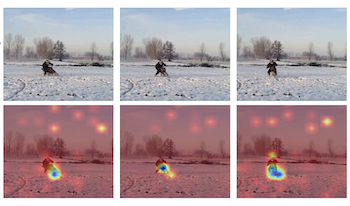

In modern life, with the explosive growth of video, images, and other data, the use of computers to automatically and efficiently classify and analyze human actions has become increasingly important. Action recognition, a problem of perceiving and understanding the behavioral state of objects in a dynamic scene, is a fundamental yet key task in the computer field. However, analyzing a video with multiple objects or a video with irregular shooting angles poses a significant challenge for existing action recognition algorithms. To address these problems, the authors propose a novel deep-learning-based method called SlowFast-Convolutional Block Attention Module (SlowFast-CBAM). Specifically, the training dataset is preprocessed using the YOLOX network, where individual frames of action videos are separately placed in slow and fast pathways. Then, CBAM is incorporated into both the slow and fast pathways to highlight features and dynamics in the surrounding environment. Subsequently, the authors establish a relationship between the convolutional attention mechanism and the SlowFast network, allowing them to focus on distinguishing features of objects and behaviors appearing before and after different actions, thereby enabling action detection and performer recognition. Experimental results demonstrate that this approach better emphasizes the features of action performers, leading to more accurate action labeling and improved action recognition accuracy.

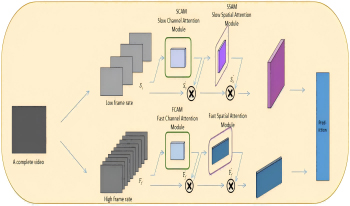

Pre-trained vision-language models, exemplified by CLIP, have exhibited promising zero-shot capabilities across various downstream tasks. Trained on image-text pairs, CLIP is naturally extendable to video-based action recognition, due to the similarity between processing images and video frames. To leverage this inherent synergy, numerous efforts have been directed towards adapting CLIP for action recognition tasks in videos. However, the specific methodologies for this adaptation remain an open question. Common approaches include prompt tuning and fine-tuning with or without extra model components on video-based action recognition tasks. Nonetheless, such adaptations may compromise the generalizability of the original CLIP framework and also necessitate the acquisition of new training data, thereby undermining its inherent zero-shot capabilities. In this study, we propose zero-shot action recognition (ZAR) by adapting the CLIP pre-trained model without the need for additional training datasets. Our approach leverages the entropy minimization technique, utilizing the current video test sample and augmenting it with varying frame rates. We encourage the model to make consistent decisions, and use this consistency to dynamically update a prompt learner during inference. Experimental results demonstrate that our ZAR method achieves state-of-the-art zero-shot performance on the Kinetics-600, HMDB51, and UCF101 datasets.

To improve the workout efficiency and to provide the body movement suggestions to users in a “smart gym” environment, we propose to use a depth camera for capturing a user’s body parts and mount multiple inertial sensors on the body parts of a user to generate deadlift behavior models generated by a recurrent neural network structure. The contribution of this paper is trifold: 1) The multimodal sensing signals obtained from multiple devices are fused for generating the deadlift behavior classifiers, 2) the recurrent neural network structure can analyze the information from the synchronized skeletal and inertial sensing data, and 3) a Vaplab dataset is generated for evaluating the deadlift behaviors recognizing capability in the proposed method.