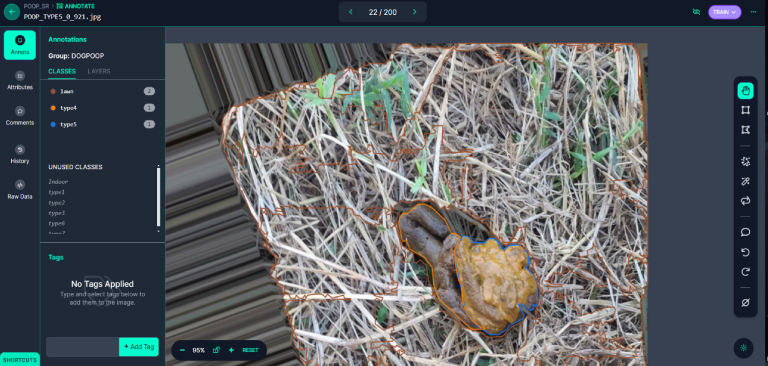

As pets now outnumber newborns in households, the demand for pet medical care and attention has surged. This has led to a significant burden for pet owners. To address this, our experiment utilizes image recognition technology to preliminarily assess the health condition of dogs, providing a rapid and economical health assessment method. By collaboration, we collected 2613 stool photos, which were enhanced to a total of 6079 images and analyzed using LabVIEW and the YOLOv8 segmentation model. The model performed excellently, achieving a precision of 86.805%, a recall rate of 74.672%, and an mAP50 of 83.354%. This proves its high recognition rate in determining the condition of dog stools. With the advancement of technology and the proliferation of mobile devices, the aim of this experiment is to develop an application that allows pet owners to assess their pets’ health anytime and manage it more conveniently. Additionally, the experiment aims to expand the database through cloud computing, optimize the model, and establish a global pet health interactive community. These developments not only propel innovation in the field of pet medical care but also provide practical health management tools for pet families, potentially offering substantial help to more pet owners in the future.

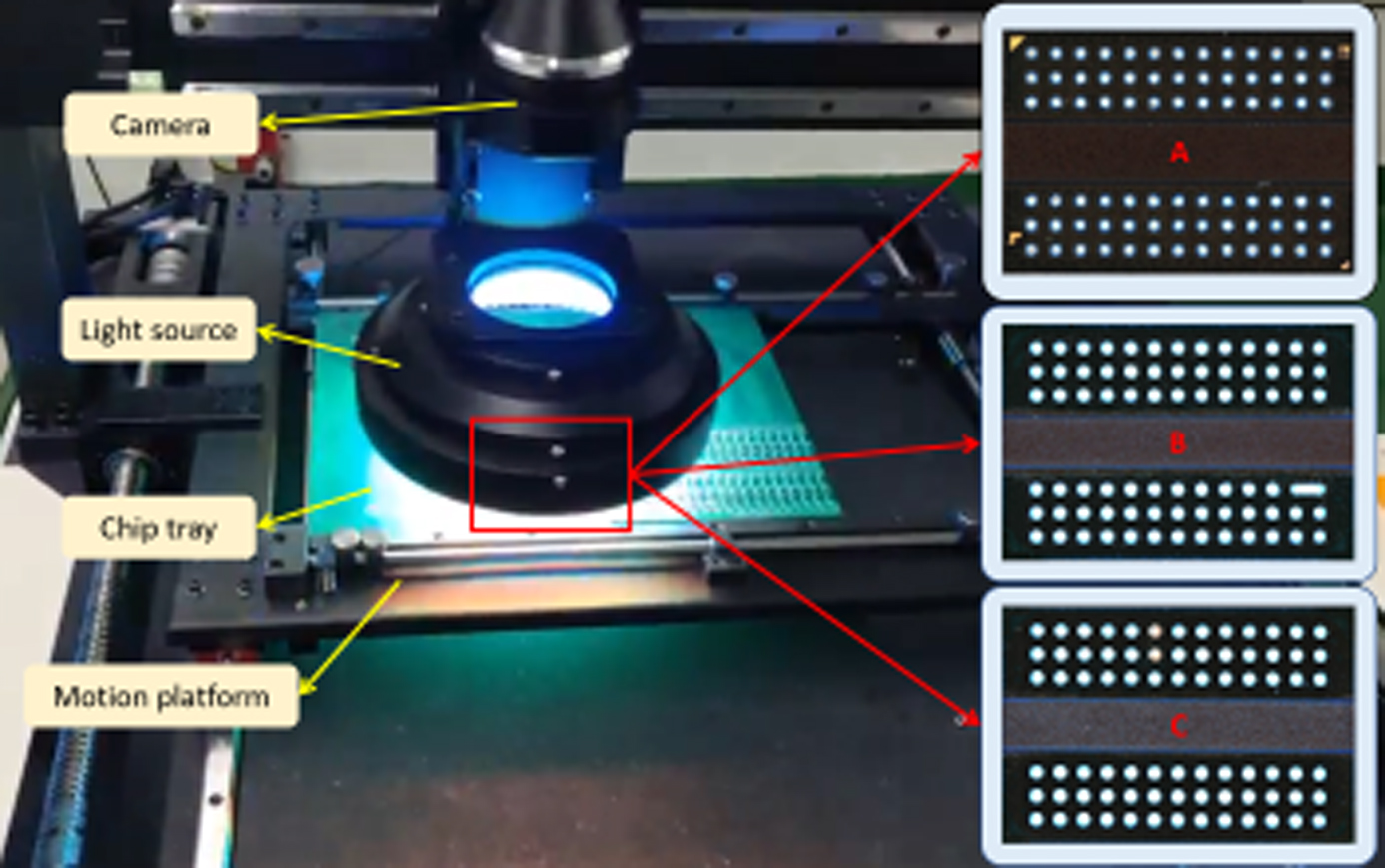

In response to the current challenges in the detection of solder ball defects in ball grid array (BGA) packaged chips, which include slow detection speed, low efficiency, and poor accuracy, our research has addressed these issues. We have designed an algorithm for detecting solder ball defects in BGA-packaged chips by leveraging the specific characteristics of these defects and harnessing the advantages of deep learning. Building upon the YOLOv8 network model, we have made adaptive improvements to enhance the algorithm. First, we have introduced an adaptive weighted downsampling method to boost detection accuracy and make the model more lightweight. Second, to improve the extraction of image features, we have proposed an efficient multi-scale convolution method. Finally, to enhance convergence speed and regression accuracy, we have replaced the traditional Complete Intersection over Union loss function with Minimum Points Distance Intersection over Union (MPDIoU). Through a series of controlled experiments, our enhanced model has shown significant improvements when compared to the original network. Specifically, we have achieved a 1.7% increase in mean average precision, a 1.5% boost in precision, a 0.9% increase in recall, a reduction of 4.3 M parameters, and a decrease of 0.4 G floating-point operations per second. In comparative experiments, our algorithm has demonstrated superior overall performance when compared to other networks, thereby effectively achieving the goal of solder ball defect detection.

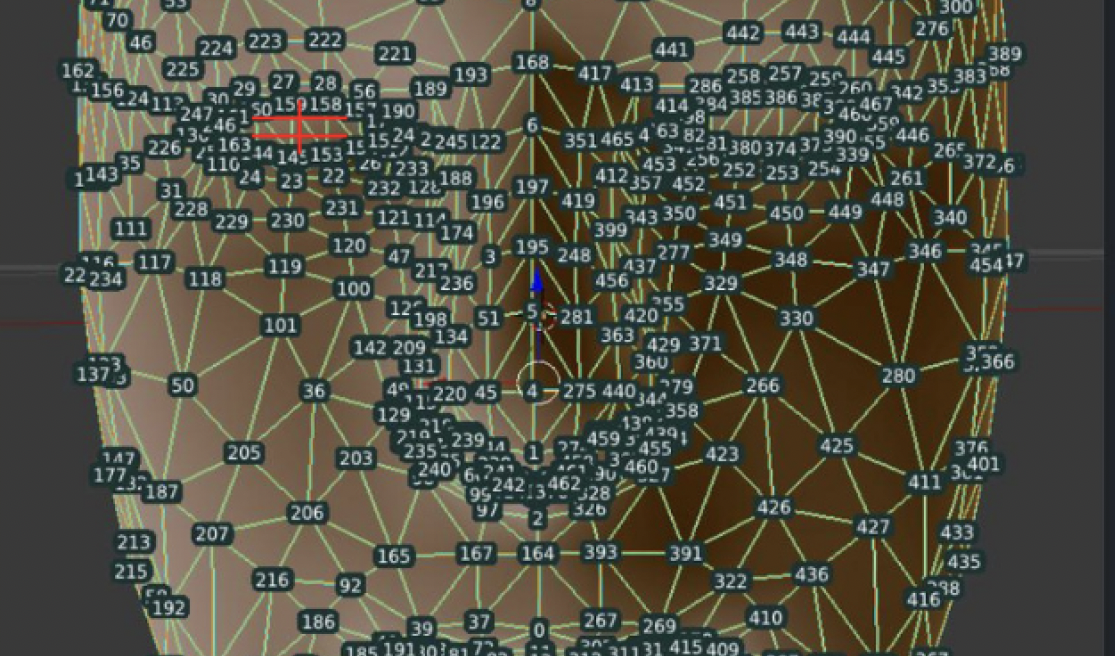

The Driver Monitoring System (DMS) presented in this work aims to enhance road safety by continuously monitoring a drivers behavior and emotional state during vehicle operation. The system utilizes computer vision and machine learning techniques to analyze the drivers face and actions, providing real-time alerts to mitigate potential hazards. The primary components of the DMS include gaze detection, emotion analysis, and phone usage detection. The system tracks the drivers eye movements to detect drowsiness and distraction through blink patterns and eye-closure durations. The DMS employs deep learning models to analyze the drivers facial expressions and extract dominant emotional states. In case of detected emotional distress, the system offers calming verbal prompts to maintain driver composure. Detected phone usage triggers visual and auditory alerts to discourage distracted driving. Integrating these features creates a comprehensive driver monitoring solution that assists in preventing accidents caused by drowsiness, distraction, and emotional instability. The systems effectiveness is demonstrated through real-time test scenarios, and its potential impact on road safety is discussed.