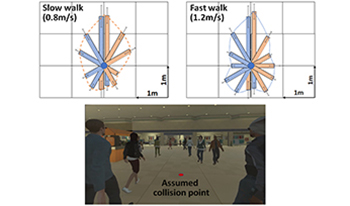

Avoiding person-to-person collisions is critical for visual field loss patients. Any intervention claiming to improve the safety of such patients should empirically demonstrate its efficacy. To design a VR mobility testing platform presenting multiple pedestrians, a distinction between colliding and non-colliding pedestrians must be clearly defined. We measured nine normally sighted subjects’ collision envelopes (CE; an egocentric boundary distinguishing collision and non-collision) and found it changes based on the approaching pedestrian’s bearing angle and speed. For person-to-person collision events for the VR mobility testing platform, non-colliding pedestrians should not evade the CE.

Background: Current gold standard for clinical training is on actual Linear Accelerators (LINAC) machines, recordings of the actual procedure, videos clips and word of mouth. Current training practices lack the element of immersion, convenience and flexibility. These trainings are not always interactive and may not always represent actual use case scenarios. Immersive experiences by interacting with LINAC machines in a safe and controlled virtual environment is highly desirable. Current real world radiation treatment training often requires a radiation bunker and actual equipment that is not always accessible in the clinical setting. Oncologist's time is extremely precious and radiation bunkers are hard to come by due to heavy demands in specialty clinics. Moreover, scarcity of Linear Accelerator (LIANC) due to its multi-million dollar capital cost makes the accessibility even worse. To solve the logistical, economical and resource issues, virtual reality (VR) radiation treatment will offer a solution that will also improve the clinical outcomes by preparing Oncologists in the virtual environment anywhere and anytime. We are introducing a virtual reality (VR) space for Radiation trainees, so that they can use it anywhere and anytime to practice and refine their radiation treatment skills. Moreover, an immersive space for collaborative learning and sharing their knowledge Methods: Trainees were invited to the VR space and went through the pre-assessment questionnaire and then guided through series of videos and digital contents and then subjected to post-assessment randomized questionnaire Findings: Trainees (n=32) reported significant improvement in their learning. For future work we can compare to traditional methods. A number of trainees were new to VR technology and also new to Radiation Oncology. Interpretation: Our research revealed that incorporating virtual reality (VR) as a tool for elucidating Radiation Oncology concepts resulted in an immediate and notable enhancement in trainees' proficiency. Moreover, those who were educated through VR demonstrated a more profound understanding of Radiation Oncology, including a wider array of potential treatment strategies, all within a user-friendly setting.

There is a need to prepare for emergencies such as active shooter events. Emergency response training drills and exercises are necessary to train for such events as we are unable to predict when emergencies do occur. There has been progress in understanding human behavior, unpredictability, human motion synthesis, crowd dynamics, and their relationships with active shooter events, but challenges remain. This paper presents an immersive security personnel training module for active shooter events in an indoor building. We have created an experimental platform for conducting active shooter drills for training that gives a fully immersive feel of the situation and allow one to perform virtual evacuation drills. The security personnel training module also incorporates four sub-modules namely 1) Situational assessment module, 2) Individual officer intervention module, 3) Team Response Module, and 4) Rescue Task Force module. We have developed an immersive virtual reality training module for active shooter events using an Oculus for course of action, visualization, and situational awareness for active shooter events as shown in Fig.1. The immersive security personnel training module aims to get information about the emergency situation inside the building. The dispatched officer will verify the active shooter situation in the building. The security personnel should find a safe zone in the building and secure the people in that area. The security personnel should also find the number and location of persons in possible jeopardy. Upon completion of the initial assessment, the first security personnel shall advise communications and request resources as deemed necessary. This will allow determining whether to take immediate action alone or with another officer or wait until additional resources are available. After successfully gathering the information, the personnel needs to update the info to their officer through a communication device.

The method of loci (memory palace technique) is a learning strategy that uses visualizations of spatial environments to enhance memory. One particularly popular use of the method of loci is for language learning, in which the method can help long-term memory of vocabulary by allowing users to associate location and other spatial information with particular words/concepts, thus making use of spatial memory to assist memory typically associated with language. Augmented reality (AR) and virtual reality (VR) have been shown to potentially provide even better memory enhancement due to their superior visualization abilities. However, a direct comparison of the two techniques in terms of language-learning enhancement has not yet been investigated. In this presentation, we present the results of a study designed to compare AR and VR when using the method of loci for learning vocabulary from a second language.

Visualization explores quantitative content of data with human intuition and plays an integral part in the data mining process. When the data is big there are different analysis methods and approaches used to find inherent patterns and relationships. However, sometimes a human in loop intervention is needed to find new connections and relationships that the existing algorithms cannot provide. Immersive Virtual Reality (VR) provides the “sense of presence” and gives the ability to discover new connections and relationships by visual inspection. The goal of this work is to investigate the merging of immersive VR and data science for advanced visualization. VR and immersive visualization involve interplay between novel technology and human perception to generate insight into both. We propose to use immersive VR for exploring the higher dimensionality and abstraction that are associated with big data. VR can use an abstract representation of high-dimensional data in support of advanced scientific visualization. This paper demonstrates the data visualization tool with real-time feed of Baltimore crime data in immersive environment, non-immersive environment, and mobile environment. We have combined virtual reality interaction techniques and 3D geographical information representation to enhance the visualization of situational impacts as shown in Figure 1. The data visualization tool is developed using the Unity gaming engine. We have presented bar graphs with oculus controller to combine the bar chart visualization with a zooming feature that allows users to view the details more effectively. The Oculus Touch headset allows the users to navigate and experience the environment with full immersion. Oculus Touch controllers also give haptic feedback to the user when using objects such as laser pointer. We are interested in extending our VR data visualization tools to enable collaborative, multi-user data exploration and to explore the impact of VR on collaborative analytics tasks, in comparison with traditional 2D visualizations. The benefits of our proposed work include providing a data visualization tool for immersive visualization and visual analysis. We also suggest key features that immersive analytics can provide with situational awareness and in loop human intervention for decision making.

Realistically writing and sketching on virtual surfaces in vir-tual reality (VR) enhance the potential uses of VR in productionand art creation. To achieve a similar writing experience as inthe real world, the VR system needs to be pressure sensitive to theuser’s stylus on the virtual plane to create the effect of differentpen strokes and improve the user experience. Typical 6 degree-of-freedom (DoF) VR controllers do not measure force or pressureon surfaces because they are held in mid-air. We propose a newmethod, VirtualForce, to calculate the force based on the differ-ence between the user’s physical hand position and their handavatar in VR. Our method does not require any specialized hard-ware. Furthermore, we explore the potential of our method toimprove the creation of VR art, and we present several ways inwhich VirtualForce can greatly enhance the accuracy of drawingand writing on virtual surfaces in virtual reality.

Active shooter events are not emergencies that can be reasonably anticipated. However, these events do occur more than we think, and there is a dire need for an effective evacuation plan that can increase the likelihood of saving lives and reducing casualties in the event of an active shooting incident. This has raised a major concern about the lack of tools that would allow robust predictions of realistic human movements and the lack of understanding about the interaction in designated environments. Clearly, it is impractical to carry out live experiments where thousands of people are evacuated from buildings designed for every possible emergency condition. There has been progress in understanding human movement, human motion synthesis, crowd dynamics, indoor environments and their relationships with active shooter events, but challenges remain. This paper presents an immersive virtual reality (VR) experimental setup for conducting evacuation experiments and virtual evacuation drills in response to extreme events that impact the actions of occupants. We have presented two ways for controlling crowd behavior. First, by defining rules for agents or NPCs (Non-Player Characters). Second, by providing controls to the users as avatars or PCs (Player characters) to navigate in the VR environment as autonomous agents with a keyboard/ joystick along with an immersive VR headset in real time.The results will enable scientists and engineers to develop more realistic models of the systems they are designing, and to obtain greater insights into their eventual behavior, without having to build costly prototypes.

Purpose: Virtual Reality (VR) headsets are becoming more and more popular and are now standard attractions in many places such as museums and fairs. Although the issues of VR induced cybersickness or eye strain are well known, as well as the associated risks factors, most studies have focused on reducing it or assessing this discomfort rather than predicting it. Since the negative experience of few users can have a strong impact on the product or an event's publicity the aim of the study was to develop a simple questionnaire that could help a user to rapidly and accurately self-assess personal risks of experiencing discomfort before using VR. Methods: 224 subjects (age 30.44±2.62 y.o.) participated to the study. The VR experience was 30 minutes long. During each session, 4 users participated simultaneously. The experience was conducted with HTC Vive. It consisted in being at the bottom of the ocean and observing surroundings. Users could see the other participants' avatars, move in a 12 m2 area and interact with the environment. The experience was designed to produce as little discomfort as possible. Participants filled out a questionnaire which included 11 questions about their personal information (age, gender, experience with VR, etc.), good binocular vision, need for glasses and use of their glasses during the VR session, tendencies to suffer from other conditions (such as motion sickness, migraines) and the level of fatigue before the experiment, designed to assess their susceptibility to cybersickness. The questionnaire also contained three questions through which subjects self-assessed the impact of the session on their level of visual fatigue, headache and nausea, the sum of which produced the subjective estimate of “VR discomfort” (VRD). 5-point Likert scale was used for the questions when possible. The data of 29 participants were excluded from the analysis due to incomplete data. Results: The correlation analysis showed that five questions' responses correlated with the VRD: sex (r = -.19, p = .02 (FDR corrected)), susceptibility to head aches and migraines (r = -.25, p = .002), susceptibility to motion sickness (r = -.18, p = .02), fatigue or a sickness before the session (r = -.26, p < .002), and the stereoscopic vision issues (r = .23, p = .004). A linear regression model of the discomfort with these five questions as predictors (F(5, 194) = 9.19, p < 0.001, R2 = 0.19) showed that only the level of fatigue (beta = .53, p < .001) reached statistical significance. Conclusion: Even though answers to five questions were found to correlate with VR induced discomfort, linear regression showed that only one of them (the level of fatigue) proved to be useful in prediction of the level of the discomfort. The results suggest that a tool whose purpose is to predict VR-induced discomfort can benefit from a combination of subjectve and objective measures. Conclusion: Even though answers to five questions were found to correlate with VR induced discomfort, linear regression showed that only one of them (the level of fatigue) proved to be useful in prediction of the level of the discomfort. The results suggest that a tool whose purpose is to predict VR-induced discomfort can benefit from a combination of subjectve and objective measures.

Healthcare practitioners, social workers, and care coordinators must work together seamlessly, safely and efficiently. Within the context of the COVID-19 pandemic, understanding relevant evidence-based and best practices as well as identification of barriers and facilitators of care for vulnerable populations are of crucial importance. A current gap exists in the lack of specific training for these specialized personnel to facilitate care for socially vulnerable populations, particularly racial and ethnic minorities. With continuing advancements in technology, VR based training incorporates real-life experience and creates a "sense of presence" in the environment. Furthermore, immersive virtual environments offer considerable advantages over traditional training exercises such as reduction in the time and cost for different what-if scenarios and opportunities for more frequent practice. This paper proposes the development of Virtual Reality Instructional (VRI) training modules geared for COVID-19 testing. The VRI modules are developed for immersive, non-immersive, and mobile environment. This paper describes the development and testing of the VRI module using the Unity gaming engine These VRI modules are developed to help increase safety preparedness and mitigate the social distancing related risks for safety management.

Color deficiency tests are well known all over the world. However, there are not applications that attempt to simulate these tests with total color accuracy in virtual reality using spectral color computing. In this work a study has been made of the tools that exist in the market in VR environments to simulate the experience of users suffering from color vision deficiencies (CVD) and the VR tools that detect CVD. A description of these tools is provided and a new proposal is presented, developed using Unity Game Engine software and HTC Vive VR glasses as Head Mounted Display (HMD). The objective of this work is to assess the ability of normal and defective observers to discriminate color by means of a color arrangement test in a virtual reality environment. The virtual environment that has been generated allows observers to perform a virtual version of the Farnsworth-Munsell 100 Hue (FM 100) color arrangement test. In order to test the effectiveness of the virtual reality test, experiments have been carried out with real users, the results of which we will see in this paper.