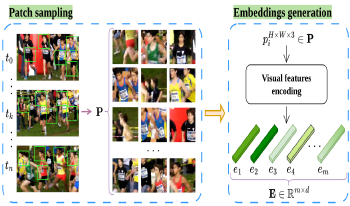

This paper proposes a novel frame selection technique based on embedding similarity to optimize video quality assessment (VQA). By leveraging high-dimensional feature embeddings extracted from deep neural networks (ResNet-50, VGG-16, and CLIP), we introduce a similarity-preserving approach that prioritizes perceptually relevant frames while reducing redundancy. The proposed method is evaluated on two datasets, CVD2014 and KonViD-1k, demonstrating robust performance across synthetic and real-world distortions. Results show that the proposed approach outperforms state-of-the-art methods, particularly in handling diverse and in-the-wild video content, achieving robust performances on KonViD-1k. This work highlights the importance of embedding-driven frame selection in improving the accuracy and efficiency of VQA methods.

We propose a continuous blind/no-reference video quality assessment (NR-VQA) algorithm based on features extracted from the bitstream, i.e., without decoding the video. The resulting algorithm requires minimal training and adopts a simple multi-layer perceptron for score prediction. The algorithm is computationally appealing. To assess the performance of the algorithm, both the Pearson Correlation Coefficient (PCC) and the Spearman Rank Ordered Correlation Coefficient (SROCC) are computed between the predicted values and the quality scores of two databases. The proposed approach is shown to have a high correlation with human visual perception of quality.

The Video Multimethod Assessment Fusion (VMAF) method, proposed by Netflix, offers an automated estimation of perceptual video quality for each frame of a video sequence. Then, the arithmetic mean of the per-frame quality measurements is taken by default, in order to obtain an estimate of the overall Quality of Experience (QoE) of the video sequence. In this paper, we validate the hypothesis that the arithmetic mean conceals the bad quality frames, leading to an overestimation of the provided quality. We also show that the Minkowski mean (appropriately parametrized) approximates well the subjectively measured QoE, providing superior Spearman Rank Correlation Coefficient (SRCC), Pearson Correlation Coefficient (PCC), and Root-Mean-Square-Error (RMSE) scores.

Video Quality Assessment (VQA) is an essential topic in several industries ranging from video streaming to camera manufacturing. In this paper, we present a novel method for No-Reference VQA. This framework is fast and does not require the extraction of hand-crafted features. We extracted convolutional features of 3-D C3D Convolutional Neural Network and feed one trained Support Vector Regressor to obtain a VQA score. We did certain transformations to different color spaces to generate better discriminant deep features. We extracted features from several layers, with and without overlap, finding the best configuration to improve the VQA score. We tested the proposed approach in LIVE-Qualcomm dataset. We extensively evaluated the perceptual quality prediction model, obtaining one final Pearson correlation of 0:7749±0:0884 with Mean Opinion Scores, and showed that it can achieve good video quality prediction, outperforming other state-of-the-art VQA leading models.

The appropriate characterization of the test material, used for subjective evaluation tests and for benchmarking image and video processing algorithms and quality metrics, can be crucial in order to perform comparative studies that provide useful insights. This paper focuses on the characterisation of 360-degree images. We discuss why it is important to take into account the geometry of the signal and the interactive nature of 360-degree content navigation, for a perceptual characterization of these signals. Particularly, we show that the computation of classical indicators of spatial complexity, commonly used for 2D images, might lead to different conclusions depending on the geometrical domain used to represent the 360-degree signal. Finally, new complexity measures based on the analysis of visual attention and content exploration patterns are proposed.