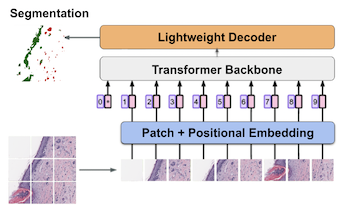

Prognosis for melanoma patients is traditionally determined with a tumor depth measurement called Breslow thickness. However, Breslow thickness fails to account for cross-sectional area, which is more useful for prognosis. We propose to use segmentation methods to estimate cross-sectional area of invasive melanoma in whole-slide images. First, we design a custom segmentation model from a transformer pretrained on breast cancer images, and adapt it for melanoma segmentation. Secondly, we finetune a segmentation backbone pretrained on natural images. Our proposed models produce quantitatively superior results compared to previous approaches and qualitatively better results as verified through a dermatologist.

Conventional image quality metrics (IQMs), such as PSNR and SSIM, are designed for perceptually uniform gamma-encoded pixel values and cannot be directly applied to perceptually non-uniform linear high-dynamic-range (HDR) colors. Similarly, most of the available datasets consist of standard-dynamic-range (SDR) images collected in standard and possibly uncontrolled viewing conditions. Popular pre-trained neural networks are likewise intended for SDR inputs, restricting their direct application to HDR content. On the other hand, training HDR models from scratch is challenging due to limited available HDR data. In this work, we explore more effective approaches for training deep learning-based models for image quality assessment (IQA) on HDR data. We leverage networks pre-trained on SDR data (source domain) and re-target these models to HDR (target domain) with additional fine-tuning and domain adaptation. We validate our methods on the available HDR IQA datasets, demonstrating that models trained with with our combined recipe outperform previous baselines, converge much quicker, and reliably generalize to HDR inputs.