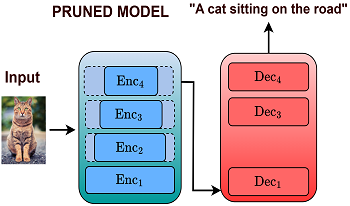

Deep learning models have significantly advanced, leading to substantial improvements in image captioning performance over the past decade. However, these improvements have resulted in increased model complexity and higher computational costs. Contemporary captioning models typically consist of three components such as a pre-trained CNN encoder, a transformer encoder, and a decoder. Although research has extensively explored the network pruning for captioning models, it has not specifically addressed the pruning of these three individual components. As a result, existing methods lack the generalizability required for models that deviate from the traditional configuration of image captioning systems. In this study, we introduce a pruning technique designed to optimize each component of the captioning model individually, thus broadening its applicability to models that share similar components, such as encoders and decoder networks, even if their overall architectures differ from the conventional captioning models. Additionally, we implemented a novel modification during the pruning in the decoder through the cross-entropy loss, which significantly improved the performance of the image-captioning model. Furthermore, we trained and validated our approach on the Flicker8k dataset and evaluated its performance using the CIDEr and ROUGE-L metrics.