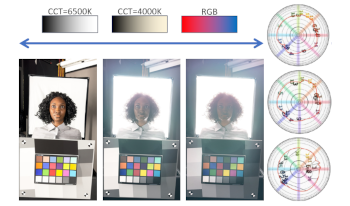

This article provides elements to answer the question: how to judge general stylistic color rendering choices made by imaging devices capable of recording HDR formats in an objective manner? The goal of our work is to build a framework to analyze color rendering behaviors in targeted regions of any scene, supporting both HDR and SDR content. To this end, we discuss modeling of camera behavior and visualization methods based on the IC T C P /ITP color spaces, alongside with example of lab as well as real scenes showcasing common issues and ambiguities in HDR rendering.

Farmers do not typically have ready access to sophisticated color measurement equipment. The idea that farmers could use their smartphones to determine when and if crops are ready for harvest was the driving force behind this project. If famers could use their smartphones to image their crops, in this case tomatoes, to determine their ripeness and readiness for harvest their farming practices could be simplified. Five smartphone devices were used to image tomatoes at different stages of ripeness. A relationship was found to exist between the hue angles taken from the smartphone images and as measured by a spectroradiometer. Additionally, a tomato color checker was created using the spectroradiometer measurements. It is intended to be made of a material that makes it easy to transport into the field. The chart is intended for use in camera calibration for future imaging. Different cloth materials were tested, with the eventual choice being a canvas material with black felt backing. Other possibilities are being investigated. The results from the smartphones and the charts will be used in further research on the application of color science in agriculture. Other possible future applications include monitoring progress relative to irrigation and fertilization programs and detection of pests and disease.

As depth imaging is integrated into more and more consumer devices, manufacturers have to tackle new challenges. Applica- tions such as computational bokeh and augmented reality require dense and precisely segmented depth maps to achieve good re- sults. Modern devices use a multitude of different technologies to estimate depth maps, such as time-of-flight sensors, stereoscopic cameras, structured light sensors, phase-detect pixels or a com- bination thereof. Therefore, there is a need to evaluate the quality of the depth maps, regardless of the technology used to produce them. The aim of our work is to propose an end-result evalua- tion method based on a single scene, using a specifically designed chart. We consider the depth maps embedded in the photographs, which are not visible to the user but are used by specialized soft- ware, in association with the RGB pictures. Some of the aspects considered are spatial alignment between RGB and depth, depth consistency, and robustness to texture variations. This work also provides a comparison of perceptual and automatic evaluations.

The ease in counterfeiting both origin and content of a video necessitates the search for a reliable method to identify the source of a media file - a crucial part of forensic investigation. One of the most accepted solutions to identify the source of a digital image involves comparison of its photo-response non-uniformity (PRNU) fingerprint. However, for videos, prevalent methods are not as efficient as image source identification techniques. This is due to the fact that the fingerprint is affected by the postprocessing steps done to generate the video. In this paper, we answer affirmatively to the question of whether one can use images to generate the reference fingerprint pattern to identify a video source. We introduce an approach called “Hybrid G-PRNU” that provides a scale-invariant solution for video source identification by matching its fingerprint with the one extracted from images. Another goal of our work is to find the optimal parameters to reach an optimal identification rate. Experiments performed demonstrate higher identification rate, while doing asymmetric comparison of video PRNU with the reference pattern generated from images, over several test cases. Further the fingerprint extractor used for this paper is being made freely available for scholars and researchers in the domain.