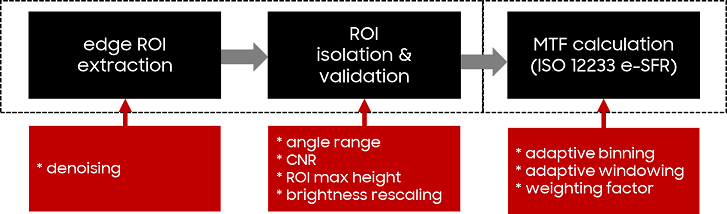

Evaluating spatial frequency response (SFR) in natural scenes is crucial for understanding camera system performance and its implications for image quality in various applications, including machine learning and automated recognition. Natural Scene derived Spatial Frequency Response (NS-SFR) represented a significant advancement by allowing for direct assessment of camera performance without the need for charts, which have been traditionally limited. However, the existing NS-SFR methods still face limitations related to restricted angular coverage and susceptibility to noise, undermining measurement accuracy. In this paper, we propose a novel methodology that can enhance the NS-SFR by employing an adaptive oversampling rate (OSR) and phase shift (PS) to broaden angular coverage and by applying a newly developed adaptive window technique that effectively reduces the impact of noise, leading to more reliable results. Furthermore, by simulation and comparison with theoretical modulation transfer function (MTF) values, as well as in natural scenes, the proposed method demonstrated that our approach successfully addresses the challenges of the existing methods, offering a more accurate representation of camera performance in natural scenes.

This paper investigates camera phone image quality, namely the effect of sensor megapixel (MP) resolution on the perceived quality of images displayed at full size on high-quality desktop displays. For the purpose, we use images from simulated cameras with different sensor MP resolutions. We employ methods recommended in the IEEE 1858 Camera Phone Image Quality (CPIQ) standard, as well as other established psychophysical paradigms, to obtain subjective image quality ratings for systems with varying MP resolution from large numbers of observers. These are subsequently used to validate image quality metrics (IQMs) relating to sharpness and resolution, including those from the CPIQ standard. Further, we define acceptable levels of quality - when changing MP resolution - for mobile phone images in Subjective Quality Scale (SQS) units. Finally, we map SQS levels to categories obtained from star-rating experiments (commonly used to rate consumer experience). Our findings draw a relationship between the MP resolution of the camera sensor and the LCD device. The chosen metrics predict quality accurately, but only the metrics proposed by CPIQ return results in calibrated JNDs in quality. We close by discussing the appropriateness of star-rating experiments for the purpose of measuring subjective image quality and metric validation.

Training autonomous vehicles requires lots of driving sequences in all situations[1]. Typically a simulation environment (software-in-the-loop, SiL) accompanies real-world test drives to systematically vary environmental parameters. A missing piece in the optical model of those SiL simulations is the sharpness, given in linear system theory by the point-spread function (PSF) of the optical system. We present a novel numerical model for the PSF of an optical system that can efficiently model both experimental measurements and lens design simulations of the PSF. The numerical basis for this model is a non-linear regression of the PSF with an artificial neural network (ANN). The novelty lies in the portability and the parameterization of this model, which allows to apply this model in basically any conceivable optical simulation scenario, e.g. inserting a measured lens into a computer game to train autonomous vehicles. We present a lens measurement series, yielding a numerical function for the PSF that depends only on the parameters defocus, field and azimuth. By convolving existing images and videos with this PSF model we apply the measured lens as a transfer function, therefore generating an image as if it were seen with the measured lens itself. Applications of this method are in any optical scenario, but we focus on the context of autonomous driving, where quality of the detection algorithms depends directly on the optical quality of the used camera system. With the parameterization of the optical model we present a method to validate the functional and safety limits of camera-based ADAS based on the real, measured lens actually used in the product.