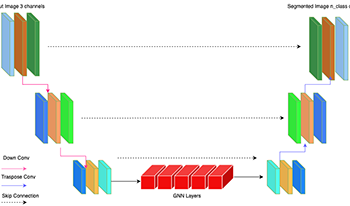

This study explores the potential of graph neural networks (GNNs) to enhance semantic segmentation across diverse image modalities. We evaluate the effectiveness of a novel GNN-based U-Net architecture on three distinct datasets: PascalVOC, a standard benchmark for natural image segmentation, Wood-Scape, a challenging dataset of fisheye images commonly used in autonomous driving, introducing significant geometric distortions; and ISIC2016, a dataset of dermoscopic images for skin lesion segmentation. We compare our proposed UNet-GNN model against established convolutional neural networks (CNNs) based segmentation models, including U-Net and U-Net++, as well as the transformer-based SwinUNet. Unlike these methods, which primarily rely on local convolutional operations or global self-attention, GNNs explicitly model relationships between image regions by constructing and operating on a graph representation of the image features. This approach allows the model to capture long-range dependencies and complex spatial relationships, which we hypothesize will be particularly beneficial for handling geometric distortions present in fisheye imagery and capturing intricate boundaries in medical images. Our analysis demonstrates the versatility of GNNs in addressing diverse segmentation challenges and highlights their potential to improve segmentation accuracy in various applications, including autonomous driving and medical image analysis. Code Available at GitHub.

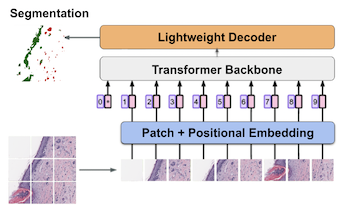

Prognosis for melanoma patients is traditionally determined with a tumor depth measurement called Breslow thickness. However, Breslow thickness fails to account for cross-sectional area, which is more useful for prognosis. We propose to use segmentation methods to estimate cross-sectional area of invasive melanoma in whole-slide images. First, we design a custom segmentation model from a transformer pretrained on breast cancer images, and adapt it for melanoma segmentation. Secondly, we finetune a segmentation backbone pretrained on natural images. Our proposed models produce quantitatively superior results compared to previous approaches and qualitatively better results as verified through a dermatologist.

Deep neural networks for semantic segmentation have recently outperformed other methods for natural images, partly due to the abundance of training data for this case. However, applying these networks to pictures from a different domain often leads to a significant drop in accuracy. Fine art paintings for highly stylized works, such as from Cubism or Expressionism, in particular, are challenging due to large deviations in shape and texture of certain objects when compared to natural images. In this paper, we demonstrate that style transfer can be used as a form of data augmentation during the training of CNN based semantic segmentation models to improve the accuracy of semantic segmentation models in art pieces of a specific artist. For this, we pick a selection of paintings from a specific style for the painters Egon Schiele, Vincent Van Gogh, Pablo Picasso and Willem de Kooning, create stylized training dataset by transferring artist-specific style to natural photographs and show that training the same segmentation network on surrogate artworks improves the accuracy for fine art paintings. We also provide a dataset with pixel-level annotation of 60 fine art paintings to the public and for evaluation of our method.

Sun glare is a commonly encountered problem in both manual and automated driving. Sun glare causes over-exposure in the image and significantly impacts visual perception algorithms. For higher levels of automated driving, it is essential for the system to understand that there is sun glare which can cause system degradation. There is very limited literature on detecting sun glare for automated driving. It is primarily based on finding saturated brightness areas and extracting regions via image processing heuristics. From the perspective of a safety system, it is necessary to have a highly robust algorithm. Thus we designed two complementary algorithms using classical image processing techniques and CNN which can learn global context. We also discuss how sun glare detection algorithm will efficiently fit into a typical automated driving system. As there is no public dataset, we created our own and will release it publicly via theWoodScape project [1] to encourage further research in this area.

The object sizes in images are diverse, therefore, capturing multiple scale context information is essential for semantic segmentation. Existing context aggregation methods such as pyramid pooling module (PPM) and atrous spatial pyramid pooling (ASPP) employ different pooling size or atrous rate, such that multiple scale information is captured. However, the pooling sizes and atrous rates are chosen empirically. Rethinking of ASPP leads to our observation that learnable sampling locations of the convolution operation can endow the network learnable fieldof- view, thus the ability of capturing object context information adaptively. Following this observation, in this paper, we propose an adaptive context encoding (ACE) module based on deformable convolution operation where sampling locations of the convolution operation are learnable. Our ACE module can be embedded into other Convolutional Neural Networks (CNNs) easily for context aggregation. The effectiveness of the proposed module is demonstrated on Pascal-Context and ADE20K datasets. Although our proposed ACE only consists of three deformable convolution blocks, it outperforms PPM and ASPP in terms of mean Intersection of Union (mIoU) on both datasets. All the experimental studies confirm that our proposed module is effective compared to the state-of-the-art methods.

Change detection in image pairs has traditionally been a binary process, reporting either “Change” or “No Change.” In this paper, we present LambdaNet, a novel deep architecture for performing pixel-level directional change detection based on a four class classification scheme. LambdaNet successfully incorporates the notion of “directional change” and identifies differences between two images as “Additive Change” when a new object appears, “Subtractive Change” when an object is removed, “Exchange” when different objects are present in the same location, and “No Change.” To obtain pixel annotated change maps for training, we generated directional change class labels for the Change Detection 2014 dataset. Our tests illustrate that LambdaNet would be suitable for situations where the type of change is unstructured, such as change detection scenarios in satellite imagery.

Semantic segmentation has been a complex problem in the field of computer vision and is essential for image analysis tasks. Currently, most state-of-the-art algorithms rely on deep convolutional neural networks (DCNN) to perform this task. DCNNs are able to downsample the spatial resolution of the input image into low resolution feature mappings which are then up-sampled to produce the segmented images. However, the reduction of this spatial information causes the high frequency details of the image to be lessened resulting in blurry and inaccurate object boundaries. In order to improve this limitation, we propose combining a DCNN used for semantic segmentation with semantic boundary information. This is done using a multi-task approach by incorporating a boundary detection network into the encoder decoder architecture SegNet. This multi-task approach includes the addition of an edge class to the SegNet architecture. In doing so, the multi-task learning network is provided more information, thus improving segmentation accuracy, specifically boundary delineation. This approach was tested on the RGB-NIR Scene dataset. Compared to using SegNet alone, we observe increased boundary segmentation accuracies using this approach. We are able to show that the addition of a boundary detection information significantly improves the semantic segmentation results of a DCNN.