This paper proposes a novel aggregation method using the Kumaraswamy distribution to analyze partial metric values, particularly in the evaluation of video quality. Through a weighted mean aggregation procedure, we unravel the underlying effects on the data. The three experiments analyzed in this paper demonstrates the methods efficacy regardless of the time aggregation, ranging from days, minutes, and frames. This approach, grounded in the Kumaraswamy distribution, offers a robust analytical tool to understand how individual metric values amalgamate, affecting overall user perceptions and experience.

Current state-of-the-art pixel-based video quality models for 4K resolution do not have access to explicit meta information such as resolution and framerate and may not include implicit or explicit features that model the related effects on perceived video quality. In this paper, we propose a meta concept to extend state-of-the-art pixel-based models and develop hybrid models incorporating meta-data such as framerate and resolution. Our general approach uses machine learning to incooperate the meta-data to the overall video quality prediction. To this aim, in our study, we evaluate various machine learning approaches such as SVR, random forest, and extreme gradient boosting trees in terms of their suitability for hybrid model development. We use VMAF to demonstrate the validity of the meta-information concept. Our approach was tested on the publicly available AVT-VQDB-UHD-1 dataset. We are able to show an increase in the prediction accuracy for the hybrid models in comparison with the prediction accuracy of the underlying pixel-based model. While the proof-of-concept is applied to VMAF, it can also be used with other pixel-based models.

The Video Multimethod Assessment Fusion (VMAF) method, proposed by Netflix, offers an automated estimation of perceptual video quality for each frame of a video sequence. Then, the arithmetic mean of the per-frame quality measurements is taken by default, in order to obtain an estimate of the overall Quality of Experience (QoE) of the video sequence. In this paper, we validate the hypothesis that the arithmetic mean conceals the bad quality frames, leading to an overestimation of the provided quality. We also show that the Minkowski mean (appropriately parametrized) approximates well the subjectively measured QoE, providing superior Spearman Rank Correlation Coefficient (SRCC), Pearson Correlation Coefficient (PCC), and Root-Mean-Square-Error (RMSE) scores.

In recent years, with the introduction of powerful HMDs such as Oculus Rift, HTC Vive Pro, the QoE that can be achieved with VR/360° videos has increased substantially. Unfortunately, no standardized guidelines, methodologies and protocols exist for conducting and evaluating the quality of 360° videos in tests with human test subjects. In this paper, we present a set of test protocols for the evaluation of quality of 360° videos using HMDs. To this aim, we review the state-of-the-art with respect to the assessment of 360° videos summarizes their results. Also, we summarize the methodological approaches and results taken for different subjective experiments at our lab under different contextual conditions. In the first two experiments 1a and 1b, the performance of two different subjective test methods, Double-Stimulus Impairment Scale (DSIS) and Modified Absolute Category Rating (M-ACR) was compared under different contextual conditions. In experiment 2, the performance of three different subjective test methods, DSIS, M-ACR and Absolute Category Rating (ACR) was compared this time without varying the contextual conditions. Building on the reliability and general applicability of the procedure across different tests, a methodological framework for 360° video quality assessment is presented in this paper. Besides video or media quality judgments, the procedure comprises the assessment of presence and simulator sickness, for which different methods were compared. Further, the accompanying head-rotation data can be used to analyze both content- and quality-related behavioural viewing aspects. Based on the results, the implications of different contextual settings are discussed.

The research domain on the Quality of Experience (QoE) of 2D video streaming has been well established. However, a new video format is emerging and gaining popularity and availability: VR 360-degree video. The processing and transmission of 360-degree videos brings along new challenges such as large bandwidth requirements and the occurrence of different distortions. The viewing experience is also substantially different from 2D video, it offers more interactive freedom on the viewing angle but can also be more demanding and cause cybersickness. Further research on the QoE of 360-videos specifically is thus required. The goal of this study is to complement earlier research by (Tran, Ngoc, Pham, Jung, and Thank, 2017) testing the effects of quality degradation, freezing, and content on the QoE of 360-videos. Data will be gathered through subjective tests where participants watch degraded versions of 360-videos through an HMD. After each video they will answer questions regarding their quality perception, experience, perceptual load, and cybersickness. Results of the first part show overall rather low QoE ratings and it decreases even more as quality is degraded and freezing events are added. Cyber sickness was found not to be an issue.

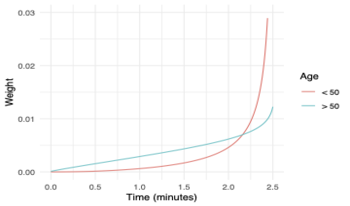

We present the results of the Quality of Experience (QoE) evaluation of 360 degree immersive video in university education. Fourth-year Veterinary Medicine students virtually attended some practical lessons which had been recorded in immersive 360 video format, covering topics of Surgical Pathology and Surgery related to horses. One hundred students participated in the experience. They evaluated it through an extensive questionnaire covering several QoE factors, including presence, audiovisual quality, satisfaction or cybersickness: 79% evaluated the experience as excellent or good, and they acknowledged an improvement of the learning process by the implementation of VR as didactic tool, and 91% reported that they would recommend it to other students. Female students consistently gave slightly better average scores than their male counterparts, although mostly within confidence intervals. Strongest inter-gender differences appeared in active social presence dimensions, according to the Temple Presence Inventory. The study also evaluates the suitability of synthetic measurement protocols, such as the Distributed Reality Experience Questionnaire (DREQ) and Net Promoter Score (NPS). We show that NPS is a valid tool for QoE analysis, but that its clustering boundary values must be adapted to the specificities of the experiment population.

In this paper, we conducted two different studies. Our first study deals with measuring the flickering in HMDs using a selfdeveloped measurement tool. Therefore, we investigated several combinations of software 360° video players and framerates. We found out that only 90 fps content is leading to a ideal and smooth playout without stuttering or black frame insertion. In addition, it should be avoided to playout 360° content at lower framerates, especially 25 and 50 fps. In our second study we investigated the influence of higher framerates of various 360° videos on the perceived quality. Doing so, we conducted a subjective test using 12 expert viewers. The participants watched 30 fps native as well as interpolated 90 fps 360° content, whether we also rendered two contents published along with the paper. We found out that 90 fps is significantly improving the perceived quality. Additionally, we compared the performance of three motion interpolation algorithms. From the results it is visible that motion interpolation can be used in post production to improve the perceived quality.

In this paper, we compare the influence of a higherresolution Head-Mounted Display (HMD) like HTC Vive Pro on 360° video QoE to that obtained with a lower-resolution HMD like HTC Vive. Furthermore, we evaluate the difference in perceived quality for entertainment-type 360° content in 4K/6K/8K resolutions at typical high-quality bitrates. In addition, we evaluate which video parts people are focusing on while watching omnidirectional videos. To this aim we conducted three subjective tests. We used HTC Vive in the first and HTC Vive Pro in the other two tests. The results from our tests are showing that the higher resolution of the Vive Pro seems to enable people to more easily judge the quality, shown by a minor deviation between the resulting quality ratings. Furthermore, we found no significant difference between the quality scores for the highest bitrate for 6K and 8K resolution. We also compared the viewing behavior for the same content viewed for the first time with the behavior when the same content is viewed again multiple times. The different representations of the contents were explored similarly, probably due to the fact that participants are finding and comparing specific parts of the 360° video suitable for rating the quality.

Adaptive streaming is fast becoming the most widely used method for video delivery to the end users over the internet. The ITU-T P.1203 standard is the first standardized quality of experience model for audiovisual HTTP-based adaptive streaming. This recommendation has been trained and validated for H.264 and resolutions up to and including full-HD. The paper provides an extension for the existing standardized short-term video quality model mode 0 for new codecs i.e., H.265, VP9 and AV1 and resolutions larger than full-HD (e.g. UHD-1). The extension is based on two subjective video quality tests. In the tests, in total 13 different source contents of 10 seconds each were used. These sources were encoded with resolutions ranging from 360p to 2160p and various quality levels using the H.265, VP9 and AV1 codecs. The subjective results from the two tests were then used to derive a mapping/correction function for P.1203.1 to handle new codecs and resolutions. It should be noted that the standardized model was not re-trained with the new subjective data, instead only a mapping/correction function was derived from the two subjective test results so as to extend the existing standard to the new codecs and resolutions.