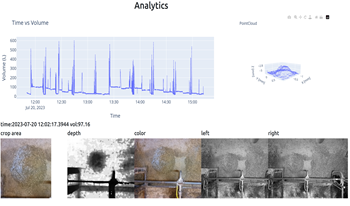

Accurate measurements of daily feed consumption for dairy cattle is an important metric for determining animal health and feed efficiency. Traditionally, manual measurements or average feed consumption for groups of animals have been employed which leads to human error and overall inconsistent measurements for the individual. Therefore, we developed a scalable non-invasive analytics system that leverages depth information derived from stereo cameras to consistently measure feed offered and report findings throughout the day. A top-down array of cameras faces the available feed, measures feed depth, projects depth to a 3-dimensional (3D) mesh, and finally estimates feed volume from the 3D projection. Our successful experiments at the Purdue University Dairy, that houses 230 cows, demonstrates its robustness and scalability for larger operations holding significant potential for optimizing feed management in dairy farms, thereby improving animal health and sustainability in the dairy industry.

Point clouds are essential for storage and transmission of 3D content. As they can entail significant volumes of data, point cloud compression is crucial for practical usage. Recently, point cloud geometry compression approaches based on deep neural networks have been explored. In this paper, we evaluate the ability to predict perceptual quality of typical voxel-based loss functions employed to train these networks. We find that the commonly used focal loss and weighted binary cross entropy are poorly correlated with human perception. We thus propose a perceptual loss function for 3D point clouds which outperforms existing loss functions on the ICIP2020 subjective dataset. In addition, we propose a novel truncated distance field voxel grid representation and find that it leads to sparser latent spaces and loss functions that are more correlated with perceived visual quality compared to a binary representation. The source code is available at <uri>https://github.com/mauriceqch/2021_pc_perceptual_loss</uri>.