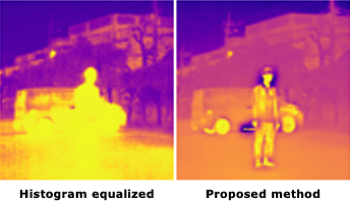

The dynamic range of the intensity of long-wave infrared (LWIR) cameras are often more than 8bit and its images have to be visualized using histogram equalization and so on. Many visualization methods do not consider effects of noise, which must be taken care of in real situations. We propose a novel LWIR images visualization method based on gradient-domain processing or gradient mapping. Processing based on intensity and gradient power in the gradient domain enables visualizing LWIR images with noise reduction. We evaluate the proposed method quantitatively and qualitatively and show its effectiveness.

Conventional image quality metrics (IQMs), such as PSNR and SSIM, are designed for perceptually uniform gamma-encoded pixel values and cannot be directly applied to perceptually non-uniform linear high-dynamic-range (HDR) colors. Similarly, most of the available datasets consist of standard-dynamic-range (SDR) images collected in standard and possibly uncontrolled viewing conditions. Popular pre-trained neural networks are likewise intended for SDR inputs, restricting their direct application to HDR content. On the other hand, training HDR models from scratch is challenging due to limited available HDR data. In this work, we explore more effective approaches for training deep learning-based models for image quality assessment (IQA) on HDR data. We leverage networks pre-trained on SDR data (source domain) and re-target these models to HDR (target domain) with additional fine-tuning and domain adaptation. We validate our methods on the available HDR IQA datasets, demonstrating that models trained with with our combined recipe outperform previous baselines, converge much quicker, and reliably generalize to HDR inputs.

It has been rigorously demonstrated that an end-to-end (E2E) differentiable formulation of a deep neural network can turn a complex recognition problem into a unified optimization task that can be solved by some gradient descent method. Although E2E network optimization yields a powerful fitting ability, the joint optimization of layers is known to potentially bring situations where layers co-adapt one to the other in a complex way that harms generalization ability. This work aims to numerically evaluate the generalization ability of a particular non-E2E network optimization approach known as FOCA (Feature-extractor Optimization through Classifier Anonymization) that helps to avoid complex co-adaptation, with careful hyperparameter tuning. In this report, we present intriguing empirical results where the non-E2E trained models consistently outperform the corresponding E2E trained models on three image-classification datasets. We further show that E2E network fine-tuning, applied after the feature-extractor optimization by FOCA and the following classifier optimization with the fixed feature extractor, indeed gives no improvement on the test accuracy. The source code is available at https://github.com/DensoITLab/FOCA-v1.

The majority of internet traffic is video content. This drives the demand for video compression in order to deliver high quality video at low target bitrates. This paper investigates the impact of adjusting the rate distortion equation on compression performance. An constant of proportionality, k, is used to modify the Lagrange multiplier used in H.265 (HEVC). Direct optimisation methods are deployed to maximise BD-Rate improvement for a particular clip. This leads to up to 21% BD-Rate improvement for an individual clip. Furthermore we use a more realistic corpus of material provided by YouTube. The results show that direct optimisation using BD-rate as the objective function can lead to further gains in bitrate savings that are not available with previous approaches.

We propose novel deep learning based chemometric data analysis technique. We trained L2 regularized sparse autoencoder end-to-end for reducing the size of the feature vector to handle the classic problem of the curse of dimensionality in chemometric data analysis. We introduce a novel technique of automatic selection of nodes inside the hidden layer of an autoencoder through Pareto optimization. Moreover, Gaussian process regressor is applied on the reduced size feature vector for the regression. We evaluated our technique on orange juice and wine dataset and results are compared against 3 state-of-the-art methods. Quantitative results are shown on Normalized Mean Square Error (NMSE) and the results show considerable improvement in the state-of-the-art.

Automated Driving requires fusing information from multitude of sensors such as cameras, radars, lidars mounted around car to handle various driving scenarios e.g. highway, parking, urban driving and traffic jam. Fusion also enables better functional safety by handling challenging scenarios such as weather conditions, time of day, occlusion etc. The paper gives an overview of the popular fusion techniques namely Kalman filters and its variation e.g. Extended Kalman filters and Unscented Kalman filters. The paper proposes choice of fusing techniques for given sensor configuration and its model parameters. The second part of paper focuses on efficient solution for series production using embedded platform using Texas Instrument's TDAx Automotive SoC. The performance is benchmarked separately for "predict" and "update" phases on for different sensor modalities. For typical L3/L4 automated driving consisting of multiple cameras, radars and lidars, fusion can supported in real time by single DSP using proposed techniques enabling cost optimized solution.