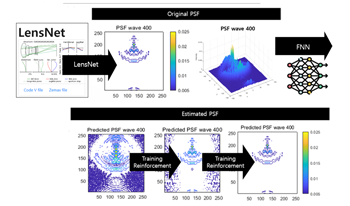

The initiation of CMOS simulations involves input scene data composed of wavelength-specific radiance, for which the application of lens PSF effects necessitates monochromatic PSFs corresponding to each wavelength. However, most lens manufacturers supply clients with lens files that contain polychromatic PSFs. Typically, upon opening these lens design files, only the weights for each wavelength can be discerned. Therefore, the ability to decompose these polychromatic PSFs into their monochromatic constituents is essential to accurately apply lens effects in CMOS simulations and to facilitate the intricate analysis of light interactions within optical systems. To address this need, our study utilizes deep learning techniques to decompose polychromatic PSFs into their constituent monochromatic elements. Leveraging lens data obtained from LensNet, we construct a polychromatic PSF which serves as the input for our deep neural network model. This model is specially trained to predict the monochromatic PSFs, revealing the distinct characteristics of each wavelength involved. The effectiveness of our approach is validated through extensive testing and detailed visualization methods. These include both 2D contour plots and 3D surface plots, which confirm the model's capability to accurately extract the monochromatic PSFs. This process is not only vital for current optical analysis but also paves the way for future advancements in neural network architectures and machine learning methodologies to refine the extraction process.