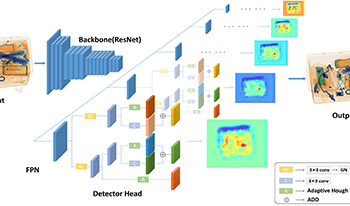

Recently, X-ray prohibited item detection has been widely used for security inspection. In practical applications, the items in the luggage are severely overlapped, leading to the problem of occlusion. In this paper, we address prohibited item detection under occlusion from the perspective of the compositional model. To this end, we propose a novel VotingNet for occluded prohibited item detection. VotingNet incorporates an Adaptive Hough Voting Module (AHVM) based on the generalized Hough transform into the widely-used detector. AHVM consists of an Attention Block (AB) and a Voting Block (VB). AB divides the voting area into multiple regions and leverages an extended Convolutional Block Attention Module (CBAM) to learn adaptive weights for inter-region features and intra-region features. In this way, the information from unoccluded areas of the prohibited items is fully exploited. VB collects votes from the feature maps of different regions given by AB. To improve the performance in the presence of occlusion, we combine AHVM with the original convolutional branches, taking full advantage of the robustness of the compositional model and the powerful representation capability of convolution. Experimental results on OPIXray and PIDray datasets show the superiority of VotingNet on widely used detectors (including representative anchor-based and anchor-free detectors).

Scale invariance and high miss detection rates for small objects are some of the challenging issues for object detection and often lead to inaccurate results. This research aims to provide an accurate detection model for crowd counting by focusing on human head detection from natural scenes acquired from publicly available datasets of Casablanca, Hollywood-Heads and Scut-head. In this study, we tuned a yolov5, a deep convolutional neural network (CNN) based object detection architecture, and then evaluated the model using mean average precision (mAP) score, precision, and recall. The transfer learning approach is used for fine-tuning the architecture. Training on one dataset and testing the model on another leads to inaccurate results due to different types of heads in different datasets. Another main contribution of our research is combining the three datasets into a single dataset, including every kind of head that is medium, large and small. From the experimental results, it can be seen that this yolov5 architecture showed significant improvements in small head detections in crowded scenes as compared to the other baseline approaches, such as the Faster R-CNN and VGG-16-based SSD MultiBox Detector.

Traditional image signal processors (ISPs) are primarily designed and optimized to improve the image quality perceived by humans. However, optimal perceptual image quality does not always translate into optimal performance for computer vision applications. In [1], Wu et al. proposed a set of methods, termed VisionISP, to enhance and optimize the ISP for computer vision purposes. The blocks in VisionISP are simple, content-aware, and trainable using existing machine learning methods. VisionISP significantly reduces the data transmission and power consumption requirements by reducing image bit-depth and resolution, while mitigating the loss of relevant information. In this paper, we show that VisionISP boosts the performance of subsequent computer vision algorithms in the context of multiple tasks, including object detection, face recognition, and stereo disparity estimation. The results demonstrate the benefits of VisionISP for a variety of computer vision applications, CNN model sizes, and benchmark datasets.

Annotation and analysis of sports videos is a challenging task that, once accomplished, could provide various benefits to coaches, players, and spectators. In particular, American Football could benefit from such a system to provide assistance in statistics and game strategy analysis. Manual analysis of recorded American football game videos is a tedious and inefficient process. In this paper, as a first step to further our research for this unique application, we focus on locating and labeling individual football players from a single overhead image of a football play immediately before the play begins. A pre-trained deep learning network is used to detect and locate the players in the image. A ResNet is used to label the individual players based on their corresponding player position or formation. Our player detection and labeling algorithms obtain greater than 90% accuracy, especially for those skill positions on offense (Quarterback, Running Back, and Wide Receiver) and defense (Cornerback and Safety). Results from our preliminary studies on player detection, localization, and labeling prove the feasibility of building a complete American football strategy analysis system using artificial intelligence.

The performance of autonomous agents in both commercial and consumer applications increases along with their situational awareness. Tasks such as obstacle avoidance, agent to agent interaction, and path planning are directly dependent upon their ability to convert sensor readings into scene understanding. Central to this is the ability to detect and recognize objects. Many object detection methodologies operate on a single modality such as vision or LiDAR. Camera-based object detection models benefit from an abundance of feature-rich information for classifying different types of objects. LiDAR-based object detection models use sparse point clouds, where each point contains accurate 3D position of object surfaces. Camera-based methods lack accurate object to lens distance measurements, while LiDAR-based methods lack dense feature-rich details. By utilizing information from both camera and LiDAR sensors, advanced object detection and identification is possible. In this work, we introduce a deep learning framework for fusing these modalities and produce a robust real-time 3D bounding box object detection network. We demonstrate qualitative and quantitative analysis of the proposed fusion model on the popular KITTI dataset.

Image quality (IQ) metrics are used to assess the quality of a detected image under a specified set of capture and display conditions. A large volume of work on IQ metrics has considered the quality of the image from an aesthetic point of view — visual perception and appreciation of the final result. Metrics have also been developed for “informational” applications such as medical imaging, aerospace and military systems, scientific imaging and industrial imaging. In these applications the criteria for image quality are based on information content and the ability to detect, identify and recognize objects from a captured image. Development of automotive imaging systems requires IQ metrics that are useful in automotive imaging. Many of the metrics developed for informational imaging are also potentially useful in automotive imaging, since many of the tasks — for example object detection and identification — are similar. In this paper, we review the Signal to Noise Ratio of the Ideal Observer and present it as a useful metric for determining whether an object can be detected with confidence, given the characteristics of an automotive imaging system. We also show how this metric can be used to optimize system parameters for a defined task.