In this paper, we explore a space-time geometric view of signal representation in machine learning models. The question we are interested in is if we can identify what is causing signal representation errors – training data inadequacies, model insufficiencies, or both. Loosely expressed, this problem is stylistically similar to blind deconvolution problems. However, studies of space-time geometries might be able to partially solve this problem by considering the curvature produced by mass in (Anti-)de Sitter space. We study the effectiveness of our approach on the MNIST dataset.

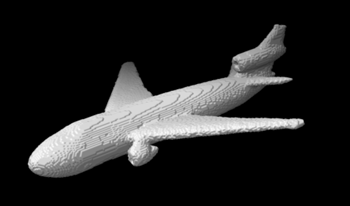

In this paper, we introduce silhouette tomography, a novel formulation of X-ray computed tomography that relies only on the geometry of the imaging system. We formulate silhouette tomography mathematically and provide a simple method for obtaining a particular solution to the problem, assuming that any solution exists. We then propose a supervised reconstruction approach that uses a deep neural network to solve the silhouette tomography problem. We present experimental results on a synthetic dataset that demonstrate the effectiveness of the proposed method.

The design and evaluation of complex systems can benefit from a software simulation - sometimes called a digital twin. The simulation can be used to characterize system performance or to test its performance under conditions that are difficult to measure (e.g., nighttime for automotive perception systems). We describe the image system simulation software tools that we use to evaluate the performance of image systems for object (automobile) detection. We describe experiments with 13 different cameras with a variety of optics and pixel sizes. To measure the impact of camera spatial resolution, we designed a collection of driving scenes that had cars at many different distances. We quantified system performance by measuring average precision and we report a trend relating system resolution and object detection performance. We also quantified the large performance degradation under nighttime conditions, compared to daytime, for all cameras and a COCO pre-trained network.

The high-resolution magnetic resonance image (MRI) provides detailed anatomical information critical for clinical application diagnosis. However, high-resolution MRI typically comes at the cost of long scan time, small spatial coverage, and low signal-to-noise ratio. The benefits of the convolutional neural network (CNN) can be applied to solve the super-resolution task to recover high-resolution generic images from low-resolution inputs. Additionally, recent studies have shown the potential to use the generative advertising network (GAN) to generate high-quality super-resolution MRIs using learned image priors. Moreover, existing approaches require paired MRI images as training data, which is difficult to obtain with existing datasets when the alignment between high and low-resolution images has to be implemented manually.This paper implements two different GAN-based models to handle the super-resolution: Enhanced super-resolution GAN (ESRGAN) and CycleGAN. Different from the generic model, the architecture of CycleGAN is modified to solve the super-resolution on unpaired MRI data, and the ESRGAN is implemented as a reference to compare GAN-based methods performance. The results of GAN-based models provide generated high-resolution images with rich textures compared to the ground-truth. Moreover, results from experiments are performed on both 3T and 7T MRI images in recovering different scales of resolution.

Image denoising is a classical preprocessing stage used to enhance images. However, it is well known that there are many practical cases where different image denoising methods produce images with inappropriate visual quality, which makes an application of image denoising useless. Because of this, it is desirable to detect such cases in advance and decide how expedient is image denoising (filtering). This problem for the case of wellknown BM3D denoiser is analyzed in this paper. We propose an algorithm of decision-making on image denoising expedience for images corrupted by additive white Gaussian noise (AWGN). An algorithm of prediction of subjective image visual quality scores for denoised images using a trained artificial neural network is proposed as well. It is shown that this prediction is fast and accurate.

A similarity search in images has become a typical operation in many applications. A presence of noise in images greatly affects the correctness of detection of similar image blocks, resulting in a reduction of efficiency of image processing methods, e.g., non-local denoising. In this paper, we study noise immunity of various distance measures (similarity metrics). Taking into account a wide variety of information content in real life images and variations of noise type and intensity. We propose a set of test data and obtain preliminary results for several typical cases of image and noise properties. The recommendations for metrics' and threshold selection are given. Fast implementation of the proposed benchmark is realized using CUDA technology.

Subjective video quality assessment generally comes across with semantically labeled evaluation scales (e.g. Excellent, Good, Fair, Poor and Bad on a single stimulus, 5 level grading scale). While suspicions about an eventual bias these labels induce in the quality evaluation always occur, to the best of our knowledge, very few state-of-the-art studies target an objective assessment of such an impact. Our study presents a neural network solution in this respect. We designed a 5-class classifier, with 2 hidden layers, and a softmax output layer. An ADAM optimizer coupled to a Sparse Categorical Cross Entropy function is subsequently considered. The experimental results are obtained out of processing a database composed of 440 observers scoring about 7 hours of video content of 4 types (high-quality stereoscopic video content, low-quality stereoscopic video content, high-quality 2D video, and low-quality 2D video). The experimental results are discussed and confrontment to the reference given by a probability-based estimation method. They show an overall good convergence between the two types of methods while pointing out to some inner applicative differences that are discussed and explained.

RGB-IR sensor combines the capabilities of RGB sensor and IR sensor in one single sensor. However, the additional IR pixel in the RGBIR sensor reduces the effective number of pixels allocated to visible region introducing aliasing artifacts due to demosaicing. Also, the presence of IR content in R, G and B channels poses new challenges in accurate color reproduction. Sharpness and color reproduction are very important image quality factors for visual aesthetic as well as computer vision algorithms. Demosaicing and color correction module are integral part of any color image processing pipeline and responsible for sharpness and color reproduction respectively. The image processing pipeline has not been fully explored for RGB-IR sensors. We propose a neural network-based approach for demosaicing and color correction for RGB-IR patterned image sensors. In our experimental results, we show that our learning-based approach performs better than the existing demosaicing and color correction methods.

Despite the advances in single-image super resolution using deep convolutional networks, the main problem remains unsolved: recovering fine texture details. Recent works in super resolution aim at modifying the training of neural networks to enable the recovery of these details. Among the different method proposed, wavelet decomposition are used as inputs to super resolution networks to provide structural information about the image. Residual connections may also link different network layers to help propagate high frequencies. We review and compare the usage of wavelets and residuals in training super resolution neural networks. We show that residual connections are key in improving the performance of deep super resolution networks. We also show that there is no statistically significant performance difference between spatial and wavelet inputs. Finally, we propose a new super resolution architecture that saves memory costs while still using residual connections, and performing comparably to the current state of the art.