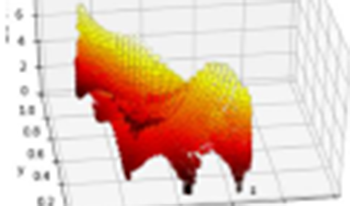

In this paper we study up to what extent neural networks can be used to accurately characterize LCD displays. Using a programmable colorimeter we have taken extensive measures for a DELL Ultrasharp UP2516D to define training and testing data sets that are used, in turn, to train and validate two neural networks: one of them using tristimulus values, XYZ, as inputs and the other one color coordinates, xyY . Both networks have the same layer structure which has been experimentally determined. The errors from both models, in terms of ΔE00 color difference, are analysed from a colorimetric point of view and interpreted in order to understand how both networks have learned and how is their performance in comparison with other classical models. As we will see, the comparison is in average in favor of the proposed models but it is not better in all cases and regions of the color space.

Image quality assessment has been a very active research area in the field of image processing, and there have been numerous methods proposed. However, most of the existing methods focus on digital images that only or mainly contain pictures or photos taken by digital cameras. Traditional approaches evaluate an input image as a whole and try to estimate a quality score for the image, in order to give viewers an idea of how “good” the image looks. In this paper, we mainly focus on the quality evaluation of contents of symbols like texts. Judging the quality for this kind of information can be based on whether or not it is readable by a human, or recognizable by a decoder such as an OCR engine. We mainly study the quality of scanned documents in terms of the detection accuracy of its OCR-transcribed version. For this purpose, we proposed a novel CNN based model to predict the quality level of scanned documents or regions in scanned documents. Experimental results evaluated on our testing dataset demonstrate the effectiveness and efficiency of our method both qualitatively and quantitatively.

Nitrate sensors are commonly used to reflect the nitrate levels of soil conditions in agriculture. In a roll-to-roll system, for manufacturing Thin-Film nitrate sensors, varying characteristics of the ion-selective membrane on screen-printed electrodes are inevitable and affect sensor performance. It is essential to monitor the sensor performance in real-time to guarantee the quality of the products. We applied image processing techniques and offline learning to realize the performance assessment. However, a large variation of the sensor’s data with dynamic manufacturing factors will defeat the accuracy of the prediction system. In this work, our key contribution is to propose a system for predicting the sensor performance in on-line scenarios and making the neural networks efficiently adapt to the new data. We leverage residual learning and Hedge Back-Propagation to the on-line settings and make the predicting network more adaptive for input data coming sequentially. Our results show that our method achieves a highly accurate prediction performance with compact time consumption.

Detection and classification of vehicles is a paramount task in surveillance framework and for traffic management and control. The type of transportation infrastructure, road conditions, traffic trends and illumination conditions are some of the key factors that affect these essential tasks. This paper explores performance of existing techniques regarding detection and classification in local, day time, complex urban traffic videos with increased free flowing vehicle volume. Three different traffic datasets with varying level of complexity are used for analysis. The scene complexity is governed by factors such as vehicle speed, type and size of dynamic objects, direction of motion of vehicles, number of lanes, occlusion, length and camera viewing angle. The datasets include a big classification volume ranging to 1516 vehicles in NIPA (customized local dataset) and 1009 vehicles in TOLL PLAZA (customized local dataset) along-with a publicly available dataset with 51 vehicles namely, HIGHWAY II. Existing detection algorithms such as blob analysis, Kalman filter tracking and detection lines were applied for detection on all the three datasets and experimental results are presented. Results show that the algorithms perform well for low density, low speed, less shadow, better image resolution, appropriate camera viewing angle, better lighting conditions and occlusion free zones. However, as soon as the complexity of the scene is increased, several detection errors are identified. Further obtaining robust and invariant features of local vehicles design has been challenging during the process. A custom GUI is built to analyze results of the algorithm. This detection is further extended to classification of 231 vehicles of NIPA dataset which is a highly complex urban traffic scenario. Vehicles are classified as Small Vehicle (SV), Large Vehicle (LV) and Motorcycle (M) by using area threshold based classifier and dense Scale Invariant Feature Transform (SIFT) and Artificial Neural Network (ANN) classifier. Detailed comparison of both classifier results show that SIFT and ANN classifier performs better for classification tasks in highly complex urban scenarios and also points out that practical systems still require a robust classification scheme to get more than 80% accuracy.