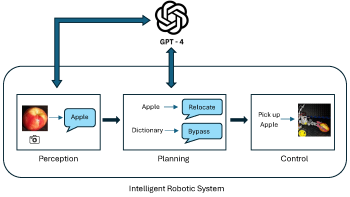

Robotics has traditionally relied on a multitude of sensors and extensive programming to interpret and navigate environments. However, these systems often struggle in dynamic and unpredictable settings. In this work, we explore the integration of large language models (LLMs) such as GPT-4 into robotic navigation systems to enhance decision-making and adaptability in complex environments. Unlike many existing robotics frameworks, our approach uniquely leverages the advanced natural language and image processing capabilities of LLMs to enable robust navigation using only a single camera and an ultrasonic sensor, eliminating the need for multiple specialized sensors and extensive pre-programmed responses. By bridging the gap between perception and planning, this framework introduces a novel approach to robotic navigation. It aims to create more intelligent and flexible robotic systems capable of handling a broader range of tasks and environments, representing a major leap in autonomy and versatility for robotics. Experimental evaluations demonstrate promising improvements in the robot’s effectiveness and efficiency across object recognition, motion planning, obstacle manipulation, and environmental adaptability, highlighting its potential for more advanced applications. Future developments will focus on enabling LLMs to autonomously generate motion profiles and executable code for tasks based on verbal instructions, allowing these actions to be carried out without human intervention. This advancement will further enhance the robot’s ability to perform specific actions independently, improving both its autonomy and operational efficiency.

Avoiding obstacles is challenging for autonomous robotic systems. In this work, we examine obstacle avoidance for legged hexapods, as it relates to climbing over randomly placed wooden joists. We formulate the task as a 3D joist detection problem and propose a detect-plan-act pipeline using a SLAM algorithm to generate a pointcloud and a grid map to expose high obstacles such as joists. A line detector is applied on the grid map to extract parametric information of the joist, such as height, width, orientation, and distance; based on this information the hexapod plans a sequence of leg movements to either climb over the joist or move sideways. We show that our perception and path planning module work well on the real-world joists with different heights and orientations.