The future of Extended Reality (XR) technologies is revolutionising our interactions with digital content, transforming how we perceive reality, and enhancing our problem-solving capabilities. However, many XR applications remain technology-driven, often disregarding the broader context of their use and failing to address fundamental human needs. In this paper, we present a teaching-led design project that asks postgraduate design students to explore the future of XR through low-fidelity, screen-free prototypes with a focus on observed human needs derived from six specific locations in central London, UK. By looking at the city and built environment as lenses for exploring everyday scenarios, the project encourages design provocations rooted in real-world challenges. Through this exploration, we aim to inspire new perspectives on the future states of XR, advocating for human-centred, inclusive, and accessible solutions. By bridging the gap between technological innovation and lived experience, this project outlines a pathway toward XR technologies that prioritise societal benefit and address real human needs.

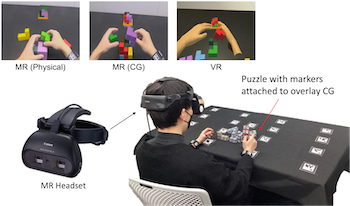

This study evaluates user experiences in Virtual Reality (VR) and Mixed Reality (MR) systems during task-based interactions. Three experimental conditions were examined: MR (Physical), MR (CG), and VR. Subjective and objective indices were used to assess user performance and experience. The results demonstrated significant differences among conditions, with MR (Physical) consistently outperforming MR (CG) and VR in various aspects. Eye-tracking data revealed that users spent less time observing physical objects in the MR (Physical) condition, primarily relying on virtual objects for task guidance. Conversely, in conditions with a higher proportion of CG content, users spent more time observing objects but reported increased discomfort due to delays. These findings suggest that the ratio of virtual to physical objects significantly impacts user experience and performance. This research provides valuable insights into improving user experiences in VR and MR systems, with potential applications across various domains.

The development of various new imaging systems introduces new viewing conditions that did not exist in the past. Mixed reality systems, which capture the real environment and renders the captured image on display with virtual objects superimposed, require more immersive and realistic feeling than virtual and augmented reality systems, especially when users just put on the headsets. Therefore, the rendering shown on the display needs to be carefully adjusted to match the appearance in the real environment. In this study, we specifically focus on reproducing the overall color tone of the real environment under different ambient illumination color by shifting the white point of the display. The human observers viewed a real environment under different ambient illumination conditions, in terms of CCT and chromaticities, and evaluated the rendering of the captured scene with 44 white points. The results clearly suggested that the display white point should be adaptive to the ambient illumination color, especially when the ambient illumination had a CCT below 4000 K, to provide a good user experience.

Mixed reality systems are often reported to cause user discomfort. Therefore, it is important to estimate the timing at which discomfort occurs and to consider ways to reduce or avoid it. The purpose of this study is to estimate the discomfort of the user while using the MR system. Psychological and physiological indicators during task were measured using the MR system, and a deep learning model was constructed to estimate psychological indicators from physiological indicators. As a result of 4-fold cross-validation, the average F1 value of each discomfort score was 0.602 for 1, 0.555 for 2, and 0.290 for 3. This result suggests that mild discomfort can be detected with a certain degree of accuracy.

This paper analyses the use of Immersive Experiences (IX) within artistic research, as an interdisciplinary environment between artistic, practice based research, visual pedagogies, social and cognitive sciences. This paper examines IX in the context of social shared spaces. It presents the Immersive Lab University of Malta (ILUM) interdisciplinary research project. ILUM has a dedicated, specific room, located at the Department of Digital Arts, Faculty of Media & Knowledge Sciences, at University of Malta, appropriately set-up with life size surround projection and surround sound so as to provide a number of viewers (located within the set-up) with an IX virtual reality environment.

Mid-air imaging is promising for glasses-free mixed reality systems in which the viewer does not need to wear any special equipment. However, it is difficult to install an optical system in a public space because conventional designs expose the optical components and disrupt the field of view. In this paper, we propose an optical system that can form mid-air images in front of building walls. This is achieved by installing optical components overhead. It displays a vertical mid-air image that is easily visible in front of the viewer. Our contributions are formulating its field of view, measuring the luminance of mid-air images formed with our system by using a luminance meter, psychophysical experiments to measure the resolution of the mid-air images formed with our system, and selection of suitable materials for our system. As a result, we found that highly reflective materials, such as touch display, transparent acrylic board, and white porcelain tile, are not suitable for our system to form visible mid-air images. We prototyped two applications for proof of concept and observed user reactions to evaluate our system.