Archives are traditionally identified as holders of text-based information. However, they also possess audio and video materials, which are the focus of this paper. In archival institutions, the absence of transcriptions for audio and video materials presents significant challenges. These materials often hold historical, cultural, and research value, but without transcriptions, their accessibility and usability are limited. The lack of transcriptions makes it difficult to index and search the content, hindering effective utilization. While existing ASR (Automatic Speech Recognition) technologies can assist, these may suffer from mediocre accuracy, especially with older or poor-quality materials. This work addresses the challenge by utilizing state of the art multilingual LLM (Large Language Model), simple to use UI (User Interface) and GPU (Graphics Processing Unit) ready containers to create a simple and effective multilingual transportable ASR module.

The digitization of historical documents is vital for preserving cultural heritage, yet mainstream OCR (Optical Character Recognition) systems often fail to support minority languages due to limited training data and language-specific models. This study explores how open-source OCR frameworks can be adapted to overcome these limitations, focusing on Finnish and Swedish as case studies. We present a practical methodology for fine-tuning PaddleOCR using a combination of manually annotated and synthetically generated data, supported by high-performance computing infrastructure. Our enhanced model significantly outperforms both Tesseract and baseline PaddleOCR, particularly in recognizing handwritten and domain-specific texts. The results highlight the importance of domain adaptation, GPU acceleration, and open-source flexibility in building OCR systems tailored for under-resourced languages. This work offers a replicable blueprint for cultural institutions seeking locally deployable OCR solution.

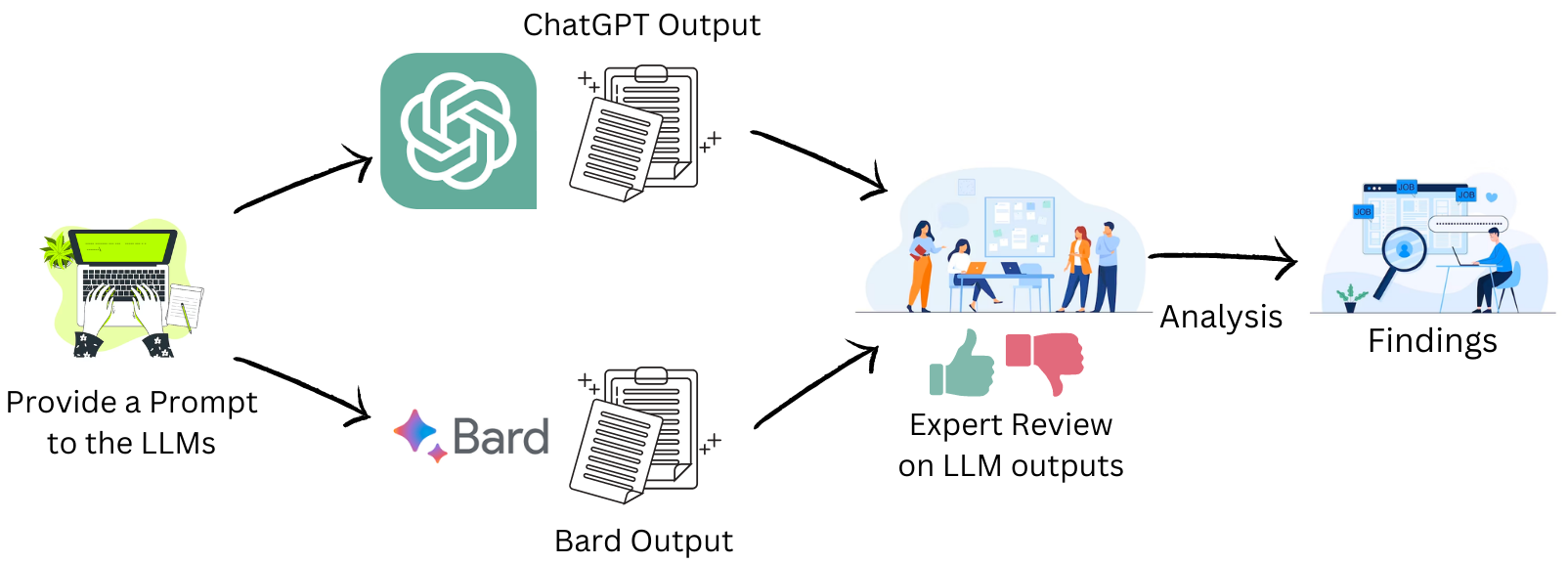

Large Language Models (LLMs) have demonstrated a huge impact on education and literacy in recent years. We evaluated the recommendations provided by two popular LLMs (OpenAI's ChatGPT and Google's Bard) to educate novices on the topic of Parallel Coordinate Plots (PCPs) using Bloom's taxonomy. We present the results of a human-expert evaluation of the recommendations provided by both the LLMs with experts from the visualization literacy field. Based on the analysis of the expert evaluation, we found that while both the LLMs provided some relevant and practical recommendations, some of the recommendations were either too difficult for novices or were in the wrong cognitive process (according to Bloom's taxonomy). In some cases, the hallucinations led to recommendations that were completely inapplicable to Parallel Coordinate Plots literacy.