As 3D Imaging for cultural heritage continues to evolve, it’s important to step back and assess the objective as well as the subjective attributes of image quality. The delivery and interchange of 3D content today is reminiscent of the early days of the analog to digital photography transition, when practitioners struggled to maintain quality for online and print representations. Traditional 2D photographic documentation techniques have matured thanks to decades of collective photographic knowledge and the development of international standards that support global archiving and interchange. Because of this maturation, still photography techniques and existing standards play a key role in shaping 3D standards for delivery, archiving and interchange. This paper outlines specific techniques to leverage ISO-19264-1 objective image quality analysis for 3D color rendition validation, and methods to translate important aesthetic photographic camera and lighting techniques from physical studio sets to rendered 3D scenes. Creating high-fidelity still reference photography of collection objects as a benchmark to assess 3D image quality for renders and online representations has and will continue to help bridge the current gaps between 2D and 3D imaging practice. The accessible techniques outlined in this paper have vastly improved the rendition of online 3D objects and will be presented in a companion workshop.

This paper will present the story of a collaborative project between the Imaging Department and the Paintings Conservation Department of the Metropolitan Museum of Art to use 3D imaging technology to restore missing and broken elements of an intricately carved giltwood frame from the late 18th century.

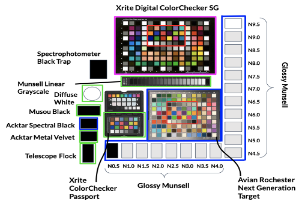

The capability for a camera to produce a color-accurate high dynamic range image is based upon its capture of luminance within a scene as well as the targets chosen to create the color transformation matrix. The wide-ranging luminance within a scene is an important part of cultural heritage documentation to appropriately capture an object's appearance. In addition, color accuracy is critical to documenting cultural heritage appropriately. This research compares prosumer and mobile phone cameras for cultural heritage documentation using single exposure and high dynamic range images. It focuses on the evaluation of color characterization process, color reproduction quality, and generation of the scene. This was done by using two types of prosumer cameras and two types of mobile phone cameras, at 800 lux with a wide range of color targets with various surface textures, matte, semigloss, and glossy. It was found that for creating color calibration matrices, a single exposure outperformed the one created from fusion of multiple exposure images. Additionally, including an extended achromatic scale along with the traditional Macbeth colors as part of the training data for the color calibration matrix may increase color accuracy for different cameras and the samples in the scene.

When one seeks to characterize the appearance of art paint-ings, color is the visual attribute that usually focuses most atten-tion: not only does color predominate in the reading of the pic-torial work, but it is also the attribute that we best know how to evaluate scientifically, thanks to spectrophotometers or imaging systems that have become portable and affordable, and thanks to the CIE color appearance models that allow us to convert the measured physical data into quantified visual values. However, for some modern paintings, the expression of the painter relies at least as much on gloss as on color; Pierre Soulages (1919-2022) is an exemplary case. This complicates considerably the characterization of the appearance of the paintings because the scientific definition of gloss, its link with measurable light quan-tities and the measurement of these light quantities over a whole painting are much less established than for color. This paper re-ports on the knowledge, challenges and difficulties of character-izing the gloss of painted works, by outlining the track of an im-aging system to achieve this.

Incident Command Dashboard (ICD) plays an essential role in Emergency Support Functions (ESF). They are centralized with a massive amount of live data. In this project, we explore a decentralized mobile incident commanding dashboard (MIC-D) with an improved mobile augmented reality (AR) user interface (UI) that can access and display multimodal live IoT data streams in phones, tablets, and inexpensive HUDs on the first responder’s helmets. The new platform is designed to work in the field and to share live data streams among team members. It also enables users to view the 3D LiDAR scan data on the location, live thermal video data, and vital sign data on the 3D map. We have built a virtual medical helicopter communication center and tested the launchpad on fire and remote fire extinguishing scenarios. We have also tested the wildfire prevention scenario “Cold Trailing” in the outdoor environment.

The color accuracy of an LED-based multispectral imaging strategy has been evaluated with respect to the number of spectral bands used to build a color profile and render the final image. Images were captured under select illumination conditions provided by 10-channel LED light sources. First, the imaging system was characterized in its full 10-band capacity, in which an image was captured under illumination by each of the 10 LEDs in turn, and the full set used to derive a system profile. Then, the system was characterized in increasingly reduced capacities, obtained by reducing the number of bands in two ways. In one approach, image bands were systematically removed from the full 10-band set. In the other, images were captured under illumination by groups of several of the LEDs at once. For both approaches, the system was characterized using different combinations of image bands until the optimal set, giving the highest color accuracy, was determined when a total of only 9, 8, 7, or 6 bands was used to derive the profile. The results indicate that color accuracy is nearly equivalent when rendering images based on the optimal combination of anywhere from 6 to 10 spectral bands, and is maintained at a higher level than that of conventional RGB imaging. This information is a first step toward informing the development of practical LED-based multispectral imaging strategies that make spectral image capture simpler and more efficient for heritage digitization workflows.