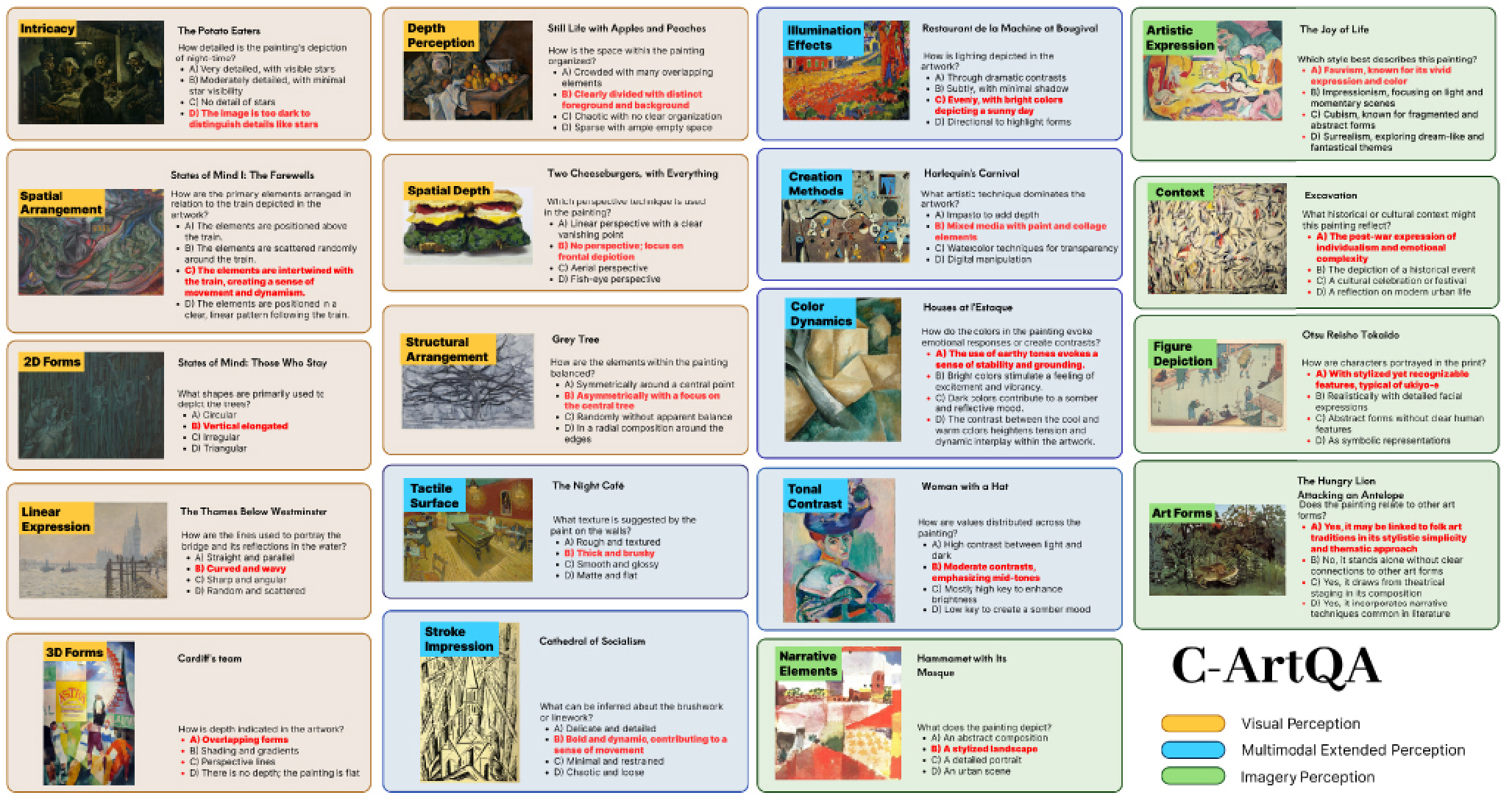

Blind and low vision (BLV) individuals face unique challenges due to a lack of objective explanations and shared artistic vocabulary. This study introduces Cultural ArtQA (C-ArtQA), a benchmark designed to assess whether current multimodal large language models (MLLMs; GPT-4V and Gemini) meet BLV needs by integrating structured visual art descriptions into auditory and tactile domains. The approach categorizes art into Visual, Multimodal Extended, and Imagery Perceptions, distributed across 19 fine-grained categories. The study employs visual question answering with 361 questions generated from a dataset of modern artworks, selected for their accessibility and cultural richness by BLV volunteers and art experts. Results indicate that GPT-4V excels in Visual and Imagery Perceptions while both models underperform in Multimodal Extended Perceptions, highlighting areas for improvement in AI’s support for BLV individuals. This study lays the foundation for developing MLLMs to meet the visual art appreciation needs of the BLV community.