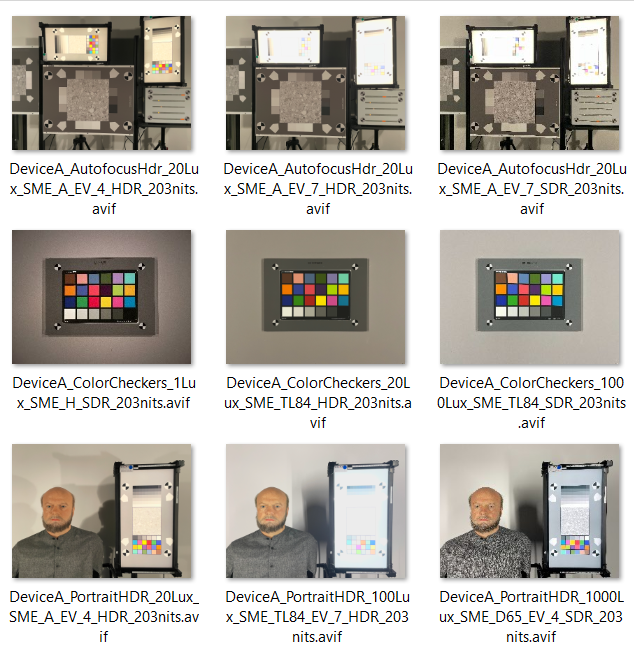

This paper is the continuation of a previous work, which aimed to develop a color rendering model using ICtCp color space, to evaluate SDR and HDR-encoded content. However, the model was only tested on an SDR image dataset. The focus of this paper is to provide an analysis of a new HDR dataset of laboratory scenes images using our model and additional color rendering visualization tools. The new HDR dataset, captured with different devices and formats in controlled laboratory setups, allows the estimation of HDR performances, encompassing several key aspects including color accuracy, contrast, and displayed brightness level, in a variety of lighting scenarios. The study provides valuable insights into the color reproduction capabilities of modern imaging devices, highlighting the advantages of HDR imaging compared to SDR and the impact of different HDR formats on visual quality.

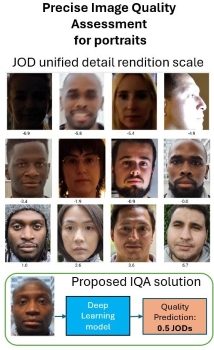

Portraits are one of the most common use cases in photography, especially in smartphone photography. However, evaluating portrait quality in real portraits is costly and difficult to reproduce. We propose a new method to evaluate a large range of detail preservation rendering on real portrait images. Our approach is based on 1) annotating a set of portrait images grouped by semantic content using pairwise comparison 2) taking advantage of the fact that we are focusing on portraits, using cross-content annotations to align the quality scales 3) training a machine learning model on the global quality scale. On top of providing a fine-grained wide range detail preservation quality output, numerical experiments show that the proposed method correlates highly with the perceptual evaluation of image quality experts.

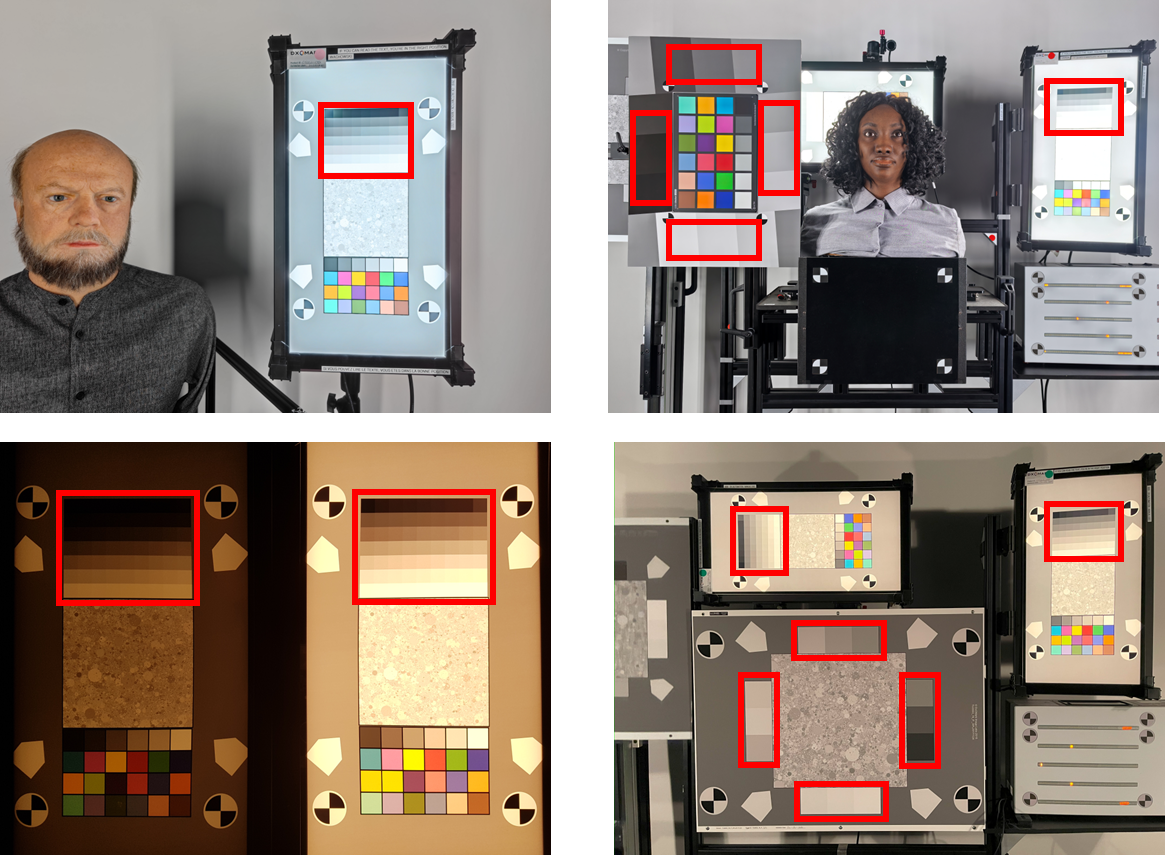

This work provides a novel glass-to-glass metric of local contrast, useful in the context of image quality evaluation of HDR content. This metric, called Local-Contrast Gain (LCG), uses the opto-optical transfer function (OOTF) of the imaging system and its first derivative to compute the incremental ratio between contrast in the scene and contrast on the display. In order to be perceptually meaningful, we chose Weber’s definition of contrast. In order know the OOTF in analytical form and to make the measurement robust to the uncertainty of measurements of the ground truth, we rely on a model that we propose and that expands upon our previously published work. We provide experimental validation of our metric on a variety of target charts, both reflective and transmissive, both in isolation and within complex setups spanning more than six EVs.

Portraits are one of the most common use cases in photography, especially in smartphone photography. However, evaluating portrait quality in real portraits is costly, inconvenient, and difficult to reproduce. We propose a new method to evaluate a large range of detail preservation renditions on realistic mannequins. This laboratory setup can cover all commercial cameras from videoconference to high-end DSLRs. Our method is based on 1) the training of a machine learning method on a perceptual scale target 2) the usage of two different regions of interest per mannequin depending on the quality of the input portrait image 3) the merge of the two quality scales to produce the final wide range scale. On top of providing a fine-grained wide range detail preservation quality output, numerical experiments show that the proposed method is robust to noise and sharpening, unlike other commonly used methods such as the texture acutance on the Dead Leaves chart.

Driving assistance is increasingly used in new car models. Most driving assistance systems are based on automotive cameras and computer vision. Computer Vision, regardless of the underlying algorithms and technology, requires the images to have good image quality, defined according to the task. This notion of good image quality is still to be defined in the case of computer vision as it has very different criteria than human vision: humans have a better contrast detection ability than image chains. The aim of this article is to compare three different metrics designed for detection of objects with computer vision: the Contrast Detection Probability (CDP) [1, 2, 3, 4], the Contrast Signal to Noise Ratio (CSNR) [5] and the Frequency of Correct Resolution (FCR) [6]. For this purpose, the computer vision task of reading the characters on a license plate will be used as a benchmark. The objective is to check the correlation between the objective metric and the ability of a neural network to perform this task. Thus, a protocol to test these metrics and compare them to the output of the neural network has been designed and the pros and cons of each of these three metrics have been noted.

Near-infrared (NIR) light sources have become increasingly present in our daily lives, which led to the growth of the number of cameras designed for viewing in the NIR spectrum (sometimes in addition to the visible) in the automotive, mobile, and surveillance sectors. However, camera evaluation metrics are still mainly focused on sensors in visible lights. The goal of this article is to extend our existing flare setup and objective flare metric to quantify NIR flare for different cameras and to evaluate the performance of several NIR filters. We also compare the results in both visible and NIR lighting for different types of devices. Moreover, we propose a new method to measure the ISO speed rating in visible light spectrum (originally defined in the ISO standard 12232) and an equivalent ISO for NIR spectrum with our flare setup.