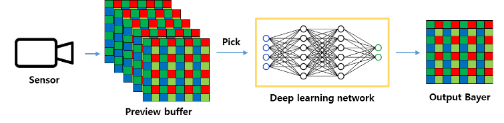

With the emergence of 200 mega pixel QxQ Bayer pattern image sensors, the remosaic technology that rearranges color filter arrays (CFAs) into Bayer patterns has become increasingly important. However, the limitations of the remosaic algorithm in the sensor often result in artifacts that degrade the details and textures of the images. In this paper, we propose a deep learning-based artifact correction method to enhance image quality within a mobile environment while minimizing shutter lag. We generated a dataset for training by utilizing a high-performance remosaic algorithm and trained a lightweight U-Net based network. The proposed network effectively removes these artifacts, thereby improving the overall image quality. Additionally, it only takes about 15 ms to process a 4000x3000 image on a Galaxy S22 Ultra, making it suitable for real-time applications.

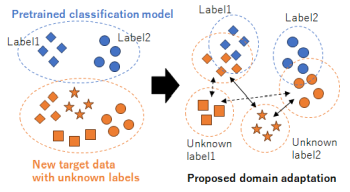

Domain adaptation, which transfers an existing system with teacher labels (source domain) to another system without teacher labels (target domain), has garnered significant interest to reduce human annotations and build AI models efficiently. Open set domain adaptation considers unknown labels in the target domain that were not present in the source domain. Conventional methods treat unknown labels as a single entity, but this assumption may not hold true in real-world scenarios. To address this challenge, we propose open set domain adaptation for image classification with multiple unknown labels. Assuming that there exists a discrepancy in the feature space between the known labels in the source domain and the unknown labels in the target domain based on their type, we can leverage clustering to classify the types of unknown labels by considering the pixel-wise feature distances between samples in the target domain and the known labels in the source domain. This enables us to assign pseudo-labels to target samples based on the classification results obtained through unsupervised clustering with an unknown number of clusters. Experimental results show that the accuracy of domain adaptation is improved by re-training using these pseudo-labels in a closed set domain adaptation setting.

We review the design of the SSIM quality metric and offer an alternative model of SSIM computation, utilizing subband decomposition and identical distance measures in each subband. We show that this model performs very close to the original and offers many advantages from a methodological standpoint. It immediately brings several possible explanations of why SSIM is effective. It also suggests a simple strategy for band noise allocation optimizing SSIM scores. This strategy may aid the design of encoders or pre-processing filters for video coding. Finally, this model leads to more straightforward mathematical connections between SSIM, MSE, and SNR metrics, improving previously known results.

Image denoising is a classical preprocessing stage used to enhance images. However, it is well known that there are many practical cases where different image denoising methods produce images with inappropriate visual quality, which makes an application of image denoising useless. Because of this, it is desirable to detect such cases in advance and decide how expedient is image denoising (filtering). This problem for the case of wellknown BM3D denoiser is analyzed in this paper. We propose an algorithm of decision-making on image denoising expedience for images corrupted by additive white Gaussian noise (AWGN). An algorithm of prediction of subjective image visual quality scores for denoised images using a trained artificial neural network is proposed as well. It is shown that this prediction is fast and accurate.

The last decades witnessed an increasing number of works aiming at proposing objective measures for media quality assessment, i.e. determining an estimation of the mean opinion score (MOS) of human observers. In this contribution, we investigate a possibility of modeling and predicting single observer’s opinion scores rather than the MOS. More precisely, we attempt to approximate the choice of one single observer by designing a neural network (NN) that is expected to mimic that observer behavior in terms of visual quality perception. Once such NNs (one for each observer) are trained they can be looked at as “virtual observers” as they take as an input information about a sequence and they output the score that the related observer would have given after watching that sequence. This new approach allows to automatically get different opinions regarding the perceived visual quality of a sequence whose quality is under investigation and thus estimate not only the MOS but also a number of other statistical indexes such as, for instance, the standard deviation of the opinions. Large numerical experiments are performed to provide further insight into a suitability of the approach.

Print quality (PQ) is most important in the printing industry. To detect and analyze print defects is an effective solution to improve print quality. As the different types of print defects appear in different regions of interest (ROI) in the digital image of a scanned page, extracting the different ROIs helps to detect and analyze the printer defect. This paper proposes a method to extract different ROIs based on the digital image object map [1], which includes three different labels: raster (images or pictures), vector (background and smooth gradient color areas), and symbol (symbols and texts). Our ROI extraction method will extract four kinds of ROIs based on these three labeled objects. So we need to distinguish the background area and smooth gradient color area (color vector) from other vector objects. The process of the ROI extraction method includes four parts; and each part will extract one kind of ROI. For the color vector and background ROI extraction part, we develop two approaches: one is to obtain the maximum area rectangular ROI; and the other approach is to extract the deepest rectangular ROI. With both of these two methods, we use a greedy algorithm to gather additional useful ROIs. In the final result of the ROI extraction process, we only save the left top and right bottom positions for each ROI. In the end, we design a Matlab GUI Tool and label the ROI ground truth manually. We calculate the intersection over union (IoU)) between the ROI extraction result and the ROI manually labeled ground truth to evaluate our ROI extraction algorithm, and check whether it is good enough to crop different ROIs from the image of the scanned page to detect and analyze print defects.

Currently, a particular scan resolution has to be defined before a scanner starts working. Two problems arise from this process. Firstly, no matter how different two pages contents are, they will be scanned into the same resolution. For example, after scanning, a blank page and a fine-detailed drawing will have the same resolution. Secondly, for one scanned page, every part of its output would have the same resolution, whatever their contents are. These problems will cause unnecessary waste of memory used to store scanned images. So a method to decide the minimum acceptable scan resolution is needed. But current image quality estimators are not suitable for estimating image quality at different resolutions. This paper proposes four features to assess image qualities at different resolutions, namely 75, 100, 150, 200 and 300 dpi. The features are tile-SSIM mean, tile-SSIM standard deviation, horizontal transition density, and vertical transition density. Tests on images containing different contents show that these features are promising in evaluate image qualities across different scan resolutions.

This paper presents a new method for no reference mesh visual quality assessment using a convolutional neural network. To do this, we first render 2D images from multiple views of the 3D mesh. Then, each image is split into small patches which are learned to a convolutional neural network. The network consists of two convolutional layers with two max-pooling layers. Then, a multilayer perceptron (MLP) with two fully connected layers is integrated to summarize the learned representation into an output node. With this network structure, feature learning and regression are used to predict the quality score of a given distorted mesh without the availability of the reference mesh. Experiments have been successfully conducted on LIRIS/EPFL generalpurpose database. The obtained results show that the proposed method provides good correlation and competitive scores comparing to some influential and effective full and reduced reference methods.