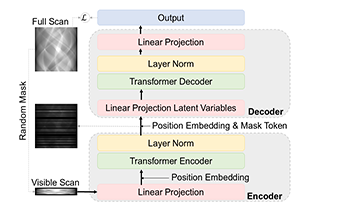

Sinogram inpainting is a critical task in computed tomography (CT) imaging, where missing or incomplete sinograms can significantly decrease image reconstruction quality. High-quality sinogram inpainting is essential for achieving high-quality CT images, enabling better diagnosis and treatment. To address this challenge, we propose SinoTx, a model based on the Transformer architecture specifically designed for sinogram completion. SinoTx leverages the inherent strengths of Transformers in capturing global dependencies, making it well-suited for handling the complex patterns present in sinograms. Our experimental results demonstrate that SinoTx outperforms existing baseline methods, achieving up to a 32.3% improvement in the Structural Similarity Index (SSIM) and a 44.2% increase in Peak Signal-to-Noise Ratio (PSNR).