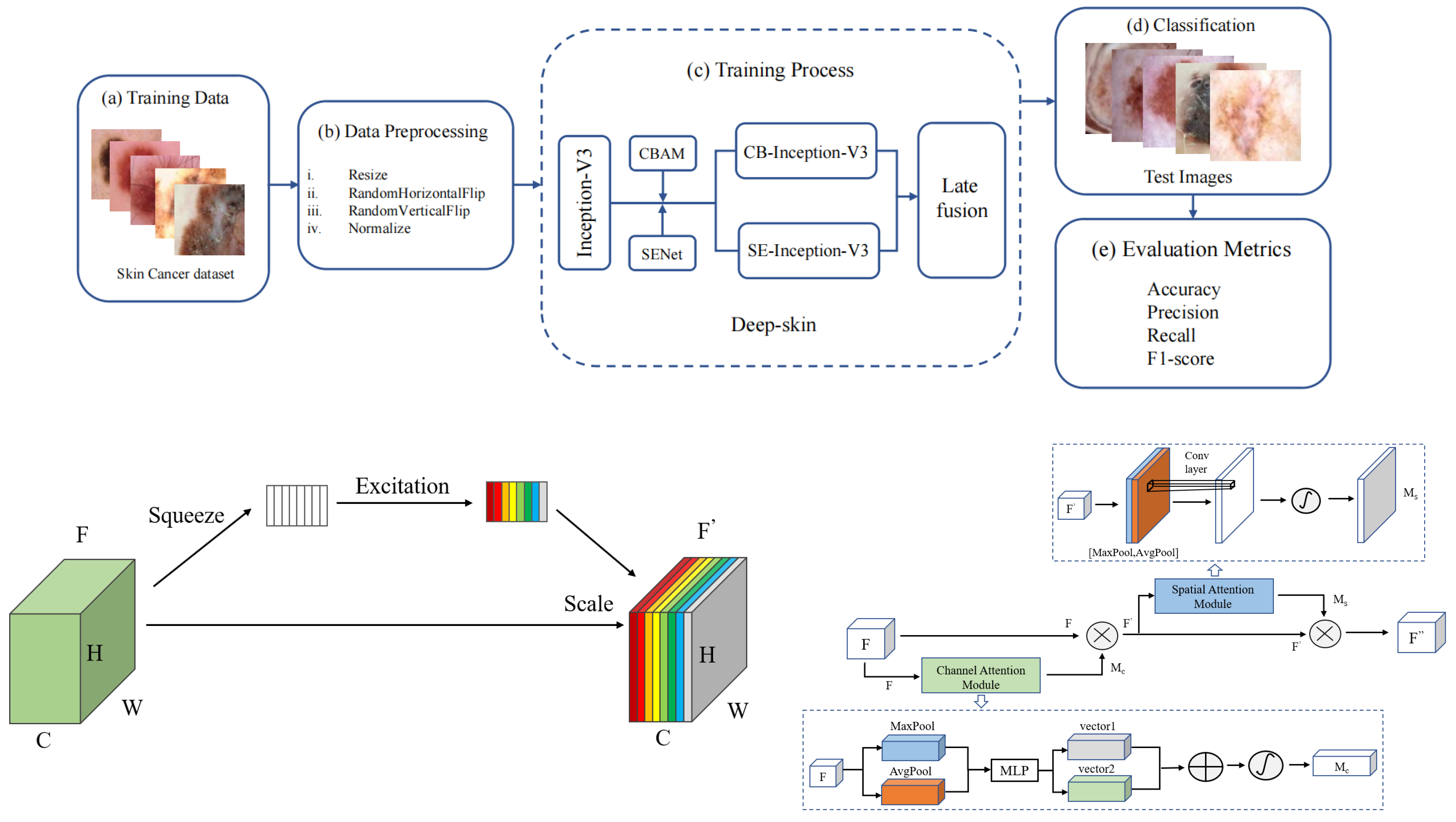

Skin tumors have become one of the most common diseases worldwide. Usually, benign skin tumors are not harmful to human health, but malignant skin tumors are highly likely to develop into skin cancer, which is life-threatening. Dermoscopy is currently the most effective method of diagnosing skin tumors. However, the complexity of skin tumor cells makes doctors’ diagnoses subject to error. Therefore, it is essential to use computers for assisted diagnosis, thereby improving the diagnostic accuracy of skin tumors. In this paper, we propose Deep-skin, a model for dermoscopic image classification, which is based on both attention mechanism and ensemble learning. Considering the characteristics of dermoscopic images, we suggest embedding different attention mechanisms on top of Inception-V3 to obtain more potential features. We then improve the classification performance by late fusion of the different models. To demonstrate the effectiveness of Deep-skin, we conduct experiments and evaluations on the publicly available dataset Skin Cancer: Malignant vs. Benign and compare the performance of Deep-skin with other classification models. The experimental results indicate that Deep-skin performs well on the dataset in comparison to other models, achieving a maximum accuracy of 87.8%. In the future, we intend to investigate better classification models for automatic diagnosis of skin tumors. Such models can potentially assist physicians and patients in clinical settings.

Link to dataset: Skin Cancer: Malignant vs. Benign (kaggle.com)

Naturalistic driving studies consist of drivers using their personal vehicles and provide valuable real-world data, but privacy issues must be handled very carefully. Drivers sign a consent form when they elect to participate, but passengers do not for a variety of practical reasons. However, their privacy must still be protected. One large study includes a blurred image of the entire cabin which allows reviewers to find passengers in the vehicle; this protects the privacy but still allows a means of answering questions regarding the impact of passengers on driver behavior. A method for automatically counting the passengers would have scientific value for transportation researchers. We investigated different image analysis methods for automatically locating and counting the non-drivers including simple face detection and fine-tuned methods for image classification and a published object detection method. We also compared the image classification using convolutional neural network and vision transformer backbones. Our studies show the image classification method appears to work the best in terms of absolute performance, although we note the closed nature of our dataset and nature of the imagery makes the application somewhat niche and object detection methods also have advantages. We perform some analysis to support our conclusion.

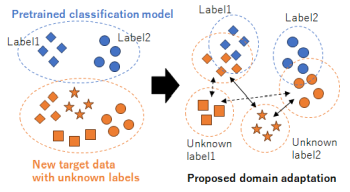

Domain adaptation, which transfers an existing system with teacher labels (source domain) to another system without teacher labels (target domain), has garnered significant interest to reduce human annotations and build AI models efficiently. Open set domain adaptation considers unknown labels in the target domain that were not present in the source domain. Conventional methods treat unknown labels as a single entity, but this assumption may not hold true in real-world scenarios. To address this challenge, we propose open set domain adaptation for image classification with multiple unknown labels. Assuming that there exists a discrepancy in the feature space between the known labels in the source domain and the unknown labels in the target domain based on their type, we can leverage clustering to classify the types of unknown labels by considering the pixel-wise feature distances between samples in the target domain and the known labels in the source domain. This enables us to assign pseudo-labels to target samples based on the classification results obtained through unsupervised clustering with an unknown number of clusters. Experimental results show that the accuracy of domain adaptation is improved by re-training using these pseudo-labels in a closed set domain adaptation setting.

Banding has been regarded as one of the most severe defects affecting the overall image quality in the printing industry. There has been a lot of research on it, but most of them focused on uniform pages or specific test images. Aiming at detecting banding on customer’s content pages, this paper proposes a banding processing pipeline that can automatically detect banding, identify periodic and isolated banding, and estimate the periodic interval. In addition, based on the detected banding characteristics, the pipeline predicts the overall quality of printed customer’s content pages and obtains predictions similar to human perceptual assessment.

It has been rigorously demonstrated that an end-to-end (E2E) differentiable formulation of a deep neural network can turn a complex recognition problem into a unified optimization task that can be solved by some gradient descent method. Although E2E network optimization yields a powerful fitting ability, the joint optimization of layers is known to potentially bring situations where layers co-adapt one to the other in a complex way that harms generalization ability. This work aims to numerically evaluate the generalization ability of a particular non-E2E network optimization approach known as FOCA (Feature-extractor Optimization through Classifier Anonymization) that helps to avoid complex co-adaptation, with careful hyperparameter tuning. In this report, we present intriguing empirical results where the non-E2E trained models consistently outperform the corresponding E2E trained models on three image-classification datasets. We further show that E2E network fine-tuning, applied after the feature-extractor optimization by FOCA and the following classifier optimization with the fixed feature extractor, indeed gives no improvement on the test accuracy. The source code is available at https://github.com/DensoITLab/FOCA-v1.

Developing machine learning models for image classification problems involves various tasks such as model selection, layer design, and hyperparameter tuning for improving the model performance. However, regarding deep learning models, insufficient model interpretability renders it infeasible to understand how they make predictions. To facilitate model interpretation, performance analysis at the class and instance levels with model visualization is essential. We herein present an interactive visual analytics system to provide a wide range of performance evaluations of different machine learning models for image classification. The proposed system aims to overcome challenges by providing visual performance analysis at different levels and visualizing misclassification instances. The system which comprises five views - ranking, projection, matrix, and instance list views, enables the comparison and analysis different models through user interaction. Several use cases of the proposed system are described and the application of the system based on MNIST data is explained. Our demo app is available at https://chanhee13p.github.io/VisMlic/.