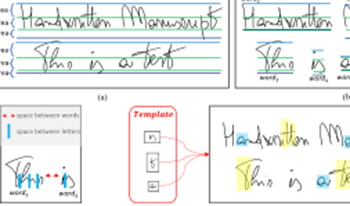

Forensic handwriting examination is a branch of Forensic Science that aims to examine handwritten documents in order to properly define or hypothesize the manuscript’s author. These analysis involves comparing two or more (digitized) documents through a comprehensive comparison of intrinsic local and global features. If a correlation exists and specific best practices are satisfied, then it will be possible to affirm that the documents under analysis were written by the same individual. The need to create sophisticated tools capable of extracting and comparing significant features has led to the development of cutting-edge software with almost entirely automated processes, improving the forensic examination of handwriting and achieving increasingly objective evaluations. This is made possible by algorithmic solutions based on purely mathematical concepts. Machine Learning and Deep Learning models trained with specific datasets could turn out to be the key elements to best solve the task at hand. In this paper, we proposed a new and challenging dataset consisting of two subsets: the first consists of 21 documents written either by the classic “pen and paper” approach (and later digitized) and directly acquired on common devices such as tablets; the second consists of 362 handwritten manuscripts by 124 different people, acquired following a specific pipeline. Our study pioneered a comparison between traditionally handwritten documents and those produced with digital tools (e.g., tablets). Preliminary results on the proposed datasets show that 90% classification accuracy can be achieved on the first subset (documents written on both paper and pen and later digitized and on tablets) and 96% on the second portion of the data. The datasets are available at https://iplab.dmi.unict.it/mfs/forensichandwriting-analysis/novel-dataset-2023/.

Determining which processing operations were used to edit an image and the order in which they were applied is an important task in image forensics. Existing approaches to detecting single manipulations have proven effective, however, their performance may significantly deteriorate if the processing occurs in a chain of editing operations. Thus, it is very challenging to detect the processing used in an ordered chain of operations using traditional forensic approaches. First attempts to perform order of operations detection were exclusively limited to a certain number of editing operations where feature extraction and order detection are disjoint. In this paper, we propose a new data-driven approach to jointly extract editing detection features, detect multiple editing operations, and determine the order in which they were applied. We design a constrained CNN-based classifier that is able to jointly extract low-level conditional fingerprint features related to a sequence of operations as well as identify an operation's order. Through a set of experiments, we evaluated the performance of our CNN-based approach with different types of residual features commonly used in forensics. Experimental results show that our method outperforms the existing approaches.

Establishing the pedigree of a digital image, such as the type of processing applied to it, is important for forensic analysts because processing generally affects the accuracy and applicability of other forensic tools used for, e.g., identifying the camera (brand) and/or inspecting the image integrity (detecting regions that were manipulated). Given the superiority of automatized tools called deep convolutional neural networks to learn complex yet compact image representations for numerous problems in steganalysis as well as in forensic, in this article we explore this approach for the task of detecting the processing history of images. Our goal is to build a scalable detector for practical situations when an image acquired by a camera is processed, downscaled with a wide variety of scaling factors, and again JPEG compressed since such processing pipeline is commonly applied for example when uploading images to social networks, such as Facebook. To allow the network to perform accurately on a wide range of image sizes, we investigate a novel CNN architecture with an IP layer accepting statistical moments of feature maps. The proposed methodology is benchmarked using confusion matrices for three JPEG quality factors.

Current satellite imaging technology enables shooting highresolution pictures of the ground. As any other kind of digital images, overhead pictures can also be easily forged. However, common image forensic techniques are often developed for consumer camera images, which strongly differ in their nature from satellite ones (e.g., compression schemes, post-processing, sensors, etc.). Therefore, many accurate state-of-the-art forensic algorithms are bound to fail if blindly applied to overhead image analysis. Development of novel forensic tools for satellite images is paramount to assess their authenticity and integrity. In this paper, we propose an algorithm for satellite image forgery detection and localization. Specifically, we consider the scenario in which pixels within a region of a satellite image are replaced to add or remove an object from the scene. Our algorithm works under the assumption that no forged images are available for training. Using a generative adversarial network (GAN), we learn a feature representation of pristine satellite images. A one-class support vector machine (SVM) is trained on these features to determine their distribution. Finally, image forgeries are detected as anomalies. The proposed algorithm is validated against different kinds of satellite images containing forgeries of different size and shape.