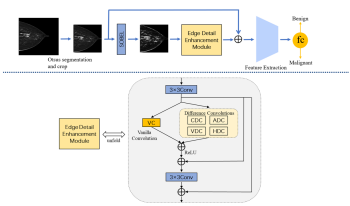

Breast cancer is the leading malignant tumor worldwide, and early diagnosis is crucial for effective treatment. Computer-aided diagnostic models based on deep learning have significantly improved the accuracy and efficiency of medical diagnosis. However, tumor edge features are critical information for determining benign and malignant, but existing methods underutilize tumor edge information, which limits the ability of early diagnosis. To enhance the study of breast lesion features, we propose the enhanced edge feature learning network (EEFL-Net) for mammogram classification. EEFL-Net enhances the learning of pathology features through the Sobel edge detection module and edge detail enhancement module (EDEM). The Sobel edge detection module performs processing to identify and enhance the key edge information. The image then enters the EDEM to fine-tune the processing further and enhance the detailed features, thus improving the classification results. Experiments on two public datasets (INbreast and CBIS-DDSM) show that EEFL-Net performs better than previous advanced mammography image classification methods.

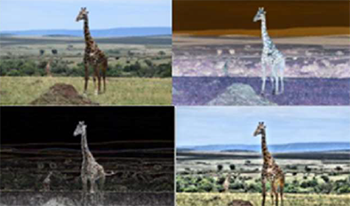

Image classification is extensively used in various applications such as satellite imagery, autonomous driving, smartphones, and healthcare. Most of the images used to train classification models can be considered ideal, i.e., without any degradation either due to corruption of pixels in the camera sensors, sudden shake blur, or the compression of images in a specific format. In this paper, we have proposed a novel CNN-based architecture for image classification of degraded images based on intermediate layer knowledge distillation and data augmentation approach cutout named ILIAC. Our approach achieves 1.1%, and 0.4% mean accuracy improvements for all the degradation levels of JPEG and AWGN, respectively, compared to the current state-of-the-art approach. Furthermore, ILIAC method is efficient in computational capacity, i.e., about half the size of the previous state-of-the-art approach in terms of model parameters and GFlops count. Additionally, we demonstrate that we do not necessarily need a larger teacher network in knowledge distillation to improve the model performance and generalization of a smaller student network for the classification of degraded images.

This research focuses on the benefits of computer vision enhancement through use of an image pre-processing optimization algorithm in which numerous variations of prevalent image modification tools are applied independently and in combination to specific sets of images. The class with the highest returned precision score is then assigned to the feature, often improving upon both the number of features captured and the precision values. Various transformations such as embossing, sharpening, contrast adjustment, etc. can bring to the forefront and reveal feature edge lines previously not capturable by neural networks, allowing potential increases in overall system accuracy beyond typical manual image pre-processing. Similar to how neural networks determine accuracy among numerous feature characteristics, the enhanced neural network will determine the highest classification confidence among unaltered original images and their permutations run through numerous pre-processing and enhancement techniques.

CMOS Image sensors play a vital role in the exponentially growing field of Artificial Intelligence (AI). Applications like image classification, object detection and tracking are just some of the many problems now solved with the help of AI, and specifically deep learning. In this work, we target image classification to discern between six categories of fruits — fresh/ rotten apples, fresh/ rotten oranges, fresh/ rotten bananas. Using images captured from high speed CMOS sensors along with lightweight CNN architectures, we show the results on various edge platforms. Specifically, we show results using ON Semiconductor’s global-shutter based, 12MP, 90 frame per second image sensor (XGS-12), and ON Semiconductor’s 13 MP AR1335 image sensor feeding into MobileNetV2, implemented on NVIDIA Jetson platforms. In addition to using the data captured with these sensors, we utilize an open-source fruits dataset to increase the number of training images. For image classification, we train our model on approximately 30,000 RGB images from the six categories of fruits. The model achieves an accuracy of 97% on edge platforms using ON Semiconductor’s 13 MP camera with AR1335 sensor. In addition to the image classification model, work is currently in progress to improve the accuracy of object detection using SSD and SSDLite with MobileNetV2 as the feature extractor. In this paper, we show preliminary results on the object detection model for the same six categories of fruits.

In this paper, we propose a new method for printed mottle defect grading. By training the data scanned from printed images, our deep learning method based on a Convolutional Neural Network (CNN) can classify various images with different mottle defect levels. Different from traditional methods to extract the image features, our method utilizes a CNN for the first time to extract the features automatically without manual feature design. Different data augmentation methods such as rotation, flip, zoom, and shift are also applied to the original dataset. The final network is trained by transfer learning using the ResNet-34 network pretrained on the ImageNet dataset connected with fully connected layers. The experimental results show that our approach leads to a 13.16% error rate in the T dataset, which is a dataset with a single image content, and a 20.73% error rate in a combined dataset with different contents.