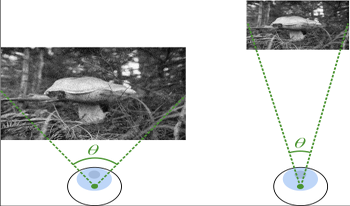

Assessing the quality of images often requires accounting for the viewing conditions - viewing distance, display resolution, and size. For example, the visibility of compression distortions may differ substantially when a video is viewed on a smartphone from a short distance and when viewed on a TV from a large distance. Nonetheless, traditional metrics are limited when applied across a diverse range of users with a diverse range of viewing environments. Metrics that account for these viewing conditions typically rely on contrast sensitivity functions (CSFs). However, it is also possible to rescale the input images to account for the change in the viewing distance. In a recent study comparing these two types of metrics, we did not observe any statistical difference between the metrics. Hence, in this paper, we use Fourier analysis to study the similarities and differences between the mechanisms of CSF-based and rescaling-based metrics. We compare the behavior of each approach and investigate the correlation between their predictions across the viewing distances. Our findings demonstrate a similarity between the two approaches for high-frequency distortions when the viewing distance is increased (the images are downscaled), but not when the viewing distance is decreased (the images are upscaled) or when accounting for low-frequency distortions.

Consumer color cameras employ sensors that do not mimic human cone spectral sensitivities, and more generally do not meet the Luther condition since the accompanying color correction substantially amplifies noise in the red channel. This begs the question: if cone spectral sensitivities yield low SNR, why has the Human Visual System so evolved? We answer the above question by noting that since modern ISPs - and the ancient HVS - remove virtually all chrominance noise, chrominance denoising artifacts rather than the chrominance noise itself should be considered. While sensor green, blue are reasonable analogs of the human M, S cones, the spectral sensitivity of red is much narrower than that of L and does not overlap much with green. An imager employing L instead of red suffers from increased red noise but is also more sensitive. This allows a high SNR (L + M)/2 luminance image to be reconstructed and used for denoising. Modeling the color filter array on the human retina, with a higher density of L pixels at the expense of S pixels, further improves the red SNR without the accompanying loss of blue quality being perceptible. The resulting LMS camera outperforms conventional RGB cameras in color accuracy and luminance SNR while being competitive in chrominance quality.

Over the years, various algorithms were developed, attempting to imitate the Human Visual System (HVS), and evaluate the perceptual image quality. However, for certain image distortions, the functionality of the HVS continues to be an enigma, and echoing its behavior remains a challenge (especially for ill-defined distortions). In this paper, we learn to compare the image quality of two registered images, with respect to a chosen distortion. Our method takes advantage of the fact that at times, simulating image distortion and later evaluating its relative image quality, is easier than assessing its absolute value. Thus, given a pair of images, we look for an optimal dimensional reduction function that will map each image to a numerical score, so that the scores will reflect the image quality relation (i.e., a less distorted image will receive a lower score). We look for an optimal dimensional reduction mapping in the form of a Deep Neural Network which minimizes the violation of image quality order. Subsequently, we extend the method to order a set of images by utilizing the predicted level of the chosen distortion. We demonstrate the validity of our method on Latent Chromatic Aberration and Moiré distortions, on synthetic and real datasets.

Open-source technologies (OSINT) are becoming increasingly popular with investigative and government agencies, intelligence services, media companies, and corporations [22]. These OSINT technologies use sophisticated techniques and special tools to analyze the continually growing sources of information efficiently [17]. There is a great need for professional training and further education in this field worldwide. After having already presented the overall structure of a professional training concept in this field in a previous paper [25], this series of articles offers individual further training modules for the worldwide standard state-of-the-art OSINT tools. The modules presented here are suitable for a professional training program and an OSINT course in a bachelor’s or master’s computer science or cybersecurity study at a university. In part 1 of a series of 4 articles, the OSINT tool RiskIQ Passiv-Total [26] is introduced, and its application possibilities are explained using concrete examples. In part 2 the OSINT tool Censys is explained [27]. This part 3 deals with Maltego [28] and Part 4 compares the 3 different tools of Part 1-3 [29].

Due to the use of 3D contents in various applications, Stereo Image Quality Assessment (SIQA) has attracted more attention to ensure good viewing experience for the users. Several methods have been thus proposed in the literature with a clear improvement for deep learning-based methods. This paper introduces a new deep learning-based no-reference SIQA using cyclopean view hypothesis and human visual attention. First, the cyclopean image is built considering the presence of binocular rivalry that covers the asymmetric distortion case. Second, the saliency map is computed taking into account the depth information. The latter aims to extract patches on the most perceptual relevant regions. Finally, a modified version of the pre-trained vgg-19 is fine-tuned and used to predict the quality score through the selected patches. The performance of the proposed metric has been evaluated on 3D LIVE phase I and phase II databases. Compared with the state-of-the-art metrics, our method gives better outcomes.