Conventional image quality metrics (IQMs), such as PSNR and SSIM, are designed for perceptually uniform gamma-encoded pixel values and cannot be directly applied to perceptually non-uniform linear high-dynamic-range (HDR) colors. Similarly, most of the available datasets consist of standard-dynamic-range (SDR) images collected in standard and possibly uncontrolled viewing conditions. Popular pre-trained neural networks are likewise intended for SDR inputs, restricting their direct application to HDR content. On the other hand, training HDR models from scratch is challenging due to limited available HDR data. In this work, we explore more effective approaches for training deep learning-based models for image quality assessment (IQA) on HDR data. We leverage networks pre-trained on SDR data (source domain) and re-target these models to HDR (target domain) with additional fine-tuning and domain adaptation. We validate our methods on the available HDR IQA datasets, demonstrating that models trained with with our combined recipe outperform previous baselines, converge much quicker, and reliably generalize to HDR inputs.

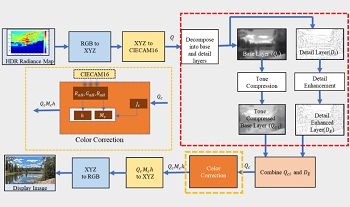

A significant challenge in tone mapping is to preserve the perceptual quality of high dynamic range (HDR) images when mapping them to standard dynamic range (SDR) displays. Most of the tone mapping operators (TMOs) compress the dynamic range without considering the surround viewing conditions such as average, dim and dark, leading to the unsatisfactory perceptual quality of the tone mapped images. To address this issue, this work focuses on utilizing CIECAM16 brightness, colorfulness, and hue perceptual correlates. The proposed model compresses the perceptual brightness and transforms the colors from HDR images using CIECAM16 color adaptations under display conditions. The brightness compression parameter was modeled via a psychophysical experiment. The proposed model was evaluated using two psychophysical experimental datasets (Rochester Institute of Technology (RIT) and Zhejiang University (ZJU) datasets).

A lightweight learning-based exposure bracketing strategy is proposed in this paper for high dynamic range (HDR) imaging without access to camera RAW. Some low-cost, power-efficient cameras, such as webcams, video surveillance cameras, sport cameras, mid-tier cellphone cameras, and navigation cameras on robots, can only provide access to 8-bit low dynamic range (LDR) images. Exposure fusion is a classical approach to capture HDR scenes by fusing images taken with different exposures into a 8-bit tone-mapped HDR image. A key question is what the optimal set of exposure settings are to cover the scene dynamic range and achieve a desirable tone. The proposed lightweight neural network predicts these exposure settings for a 3-shot exposure bracketing, given the input irradiance information from 1) the histograms of an auto-exposure LDR preview image, and 2) the maximum and minimum levels of the scene irradiance. Without the processing of the preview image streams, and the circuitous route of first estimating the scene HDR irradiance and then tone-mapping to 8-bit images, the proposed method gives a more practical HDR enhancement for real-time and on-device applications. Experiments on a number of challenging images reveal the advantages of our method in comparison with other state-of-the-art methods qualitatively and quantitatively.

Content created in High Dynamic Range (HDR) and Wide Color Gamut (WCG) is becoming more ubiquitous, driving the need for reliable tools for evaluating the quality across the imaging ecosystem. One of the simplest techniques to measure the quality of any video system is to measure the color errors. The traditional color difference metrics such as ΔE00 and the newer HDR specific metrics such as ΔEZ and ΔEITP compute color difference on a pixel-by-pixel basis which do not account for the spatial effects (optical) and active processing (neural) done by the human visual system. In this work, we improve upon the per-pixel ΔEITP color difference metric by performing a spatial extension similar to what was done during the design of S-CIELAB. We quantified the performance using four standard evaluation procedures on four publicly available HDR and WCG image databases and found that the proposed metric results in a marked improvement with subjective scores over existing per-pixel color difference metrics.

There are an increasing number of databases describing subjective quality responses for HDR (high dynamic range) imagery with various distortions. The dominant distortions across the databases are those that arise from video compression, which are primarily perceived as achromatic, but there are some chromatic distortions due to 422 and other chromatic sub-sampling. Tone mapping from the source HDR levels to various levels of reduced capability SDR (standard dynamic range) are also included in these databases. While most of these distortions are achromatic, tone-mapping can cause changes in saturation and hue angle when saturated colors are in the upper hull of the of the color space. In addition, there is one database that specifically looked at color distortions in an HDR-WCG (wide color gamut) space. From these databases we can test the improvements to well-known quality metrics if they are applied in the newly developed color perceptual spaces (i.e., representations) specifically designed for HDR and WCG. We present results from testing these subjective quality databases to computed quality using the new color spaces of Jzazbz and ICTCP, as well as the commonly used SDR color space of CIELAB.

Proposed for the first time is a novel calibration empowered minimalistic multi-exposure image processing technique using measured sensor pixel voltage output and exposure time factor limits for robust camera linear dynamic range extension. The technique exploits the best linear response region of an overall nonlinear response image sensor to robustly recover via minimal count multi-exposure image fusion, the true and precise scaled High Dynamic Range (HDR) irradiance map. CMOS sensor-based experiments using a measured Low Dynamic Range (LDR) 44 dB linear region for the technique with a minimum of 2 multi-exposure images provides robust recovery of 78 dB HDR low contrast highly calibrated test targets.

Experimentally demonstrated for the first time is Coded Access Optical Sensor (CAOS) camera empowered robust and true white light High Dynamic Range (HDR) scene low contrast target image recovery over the full linear dynamic range. The 90 dB linear HDR scene uses a 16 element custom designed test target with low contrast 6 dB step scaled irradiances. Such camera performance is highly sought after in catastrophic failure avoidance mission critical HDR scenarios with embedded low contrast targets.

A gamut compression algorithm (GCA) and a gamut extension algorithm (GEA) were proposed based on the concept of vividness. Their performance was further investigated via two psychological experiments together with some other commonly used gamut mapping algorithms (GMAs). In addition, difference uniform colour spaces (UCSs) were also evaluated in the experiments including CIELAB, CAM02-UCS and a newly proposed UCS, Jzazbz. Present results showed that the new GCA and GEA outperformed all the other GMAs and the Jzazbz was a promising UCS in the field of gamut mapping.

We improve High Dynamic Range (HDR) Image Quality Assessment (IQA) using a full reference approach that combines results from various quality metrics (HDR-CQM). We combine metrics designed for different applications such as HDR, SDR and color difference measures in a single unifying framework using simple linear regression techniques and other non-linear machine learning (ML) based approaches. We find that using a non-linear combination of scores from different quality metrics using support vector machine is better at prediction than the other techniques such as random forest, random trees, multilayer perceptron or a radial basis function network. To improve performance and reduce complexity of the proposed approach, we use the Sequential Floating Selection technique to select a subset of metrics from a list of quality metrics. We evaluate the performance on two publicly available calibrated databases with different types of distortion and demonstrate improved performance using HDR-CQM as compared to several existing IQA metrics. We also show the generality and robustness of our approach using cross-database evaluation.

Viewers of high dynamic range television (HDR, HDR-TV) expect a comfortable viewing experience with significantly brighter highlights and improved details of darker areas on a brighter display. However, extremely bright images on a HDR display are potentially undesirable and lead to an uncomfortable viewing experience. To avoid the issues, we require specific production guidelines for subjective brightness to ensure brightness consistency between and within programs. To create such production guidelines, it is necessary to develop an objective metric for subjective brightness in HDR-TVs. A previous study reports that the subjective brightness is proportional to the average of displayed pixel luminance levels. However, other parameters can affect the subjective brightness. Therefore, we conducted a subjective evaluation test by using specific test images to identify the factors that affect the perceived overall brightness of HDR images. Our results indicated that positions and distributions of displayed pixel luminance levels on video affect brightness in addition to the average of displayed pixel luminance levels. The study is expected to contribute to the development of an objective metric for subjective brightness.