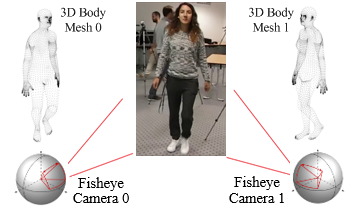

Fisheye cameras providing omnidirectional vision with up to 360° field-of-view (FoV) can cover a given space with fewer cameras for a multi-camera system. The main objective of the paper is to develop fast and accurate algorithms for automatic calibration of multiple fisheye cameras which fully utilize human semantic information without using predetermined calibration patterns or objects. The proposed automatic calibration method detects humans from each fisheye camera in equirectangular or spherical images. For each detected human, the portion of image defined by the bounding box will be converted to an undistorted image patch with normal FoV by a perspective mapping parameterized by the associated view angle. 3D human body meshes are then estimated by pretrained Human Mesh Recovery (HMR) model and the vertices of each 3D human body mesh are projected onto the 2D image plane for each corresponding image patch. Structure-from-Motion (SfM) algorithm is used to reconstruct 3D shapes from a pair of cameras and estimate the essential matrix. Camera extrinsic parameters can be calculated from the estimated essential matrix and the corresponding perspective mappings. By assuming one main camera’s pose in the world coordinate is known, the poses of all other cameras in the multi-camera system can be calculated. Fisheye camera pairs spinning different angles are evaluated using (1) 2D projection error and (2) rotation and translation errors as performance metrics. The proposed method is shown to perform more accurate calibration than methods using appearance-based feature extractors, e.g., Scale-Invariant Feature Transform (SIFT), and deep learning-based human joint estimators, e.g., MediaPipe.