Transparent and translucent objects transmit part of the incident radiant flux permitting a viewer to see the background through them. Perceived transmittance and how the human visual system assigns transmittance to flat filters has been a topic of scholarly interest. However, these works have usually been limited to the role of filter’s optical properties. Readers may have noticed in their daily lives that objects close behind a frosted glass are discernible, but other objects even slightly further behind are virtually invisible. The reason for this lies in geometrical optics and has been mostly overlooked or taken for granted from the perceptual perspective. In this work, we investigated whether the distance between a translucent filter and a background affects perceived transmittance of the filter, or whether observers account for this distance and assign transmittance to the filters in a consistent manner. Furthermore, we explored whether the trend holds for broad range of materials. For this purpose, we created an image dataset where a broad range of real physical flat filters were photographed at different distances from the background. Afterward, we conducted a psychophysical experiment to explore the link between the object-to-background distance and perceived transmittance. We found that the results vary and depend on filter’s optical properties. While transmittance was judged consistently for some filters, for others it was highly underestimated when the background moved further away.

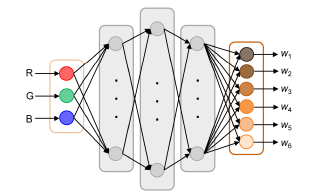

Accurate reproduction of human skin color requires knowledge of skin spectral reflectance data, which is often unavailable. Traditionally, spectral reconstruction algorithms attempt to recover the spectra using commonly available RGB camera response. Among various methods employed, polynomial regression has proven beneficial for skin spectral reconstruction. Despite their simplicity and interpretability, nonlinear regression methods may deliver sub-optimal results as the size of the data increases. Furthermore, they are prone to overfitting and require carefully adjusted hyperparameters through regularization. Another challenging issue in skin spectral reconstruction is the lack of high-quality skin hyperspectral databases available for research. In this paper, we gather skin spectral data from publicly available databases and extract the effective dimensions of these spectra using principal component analysis (PCA). We show that plausible skin spectra can be accurately modeled through a linear combination of six spectral bases. We propose a new approach for estimating the weights of such a linear combination from RGB data using neural networks, leading to the reconstruction of spectra. Furthermore, we utilize a daylight model to estimate the underlying scene illumination metamer. We demonstrate that our proposed model can effectively reconstruct facial skin spectra and render facial appearance with high color fidelity.

When visualizing colors on websites or in apps, color calibration is not feasible for consumer smartphones and tablets. The vast majority of consumers do not have the time, equipment or expertise to conduct color calibration. For such situations we recently developed the MDCIM (Mobile Display Characterization and Illumination Model) model. Using optics-based image processing it aims at improving digital color representation as assessed by human observers. It takes into account display-specific parameters and local lighting conditions. In previous publications we determined model parameters for four mobile displays: an OLED display in Samsung Galaxy S4, and 3 LCD displays: iPad Air 2 and the iPad models from 2017 and 2018. Here, we investigate the performance of another OLED display, the iPhone XS Max. Using a psychophysical experiment, we show that colors generated by the MDCIM method are visually perceived as a much better color match with physical samples than when the default method is used, which is based on sRGB space and the color management system implemented by the smartphone manufacturer. The percentage of reasonable to good color matches improves from 3.1% to 85.9% by using MDCIM method, while the percentage of incorrect color matches drops from 83.8% to 3.6%.