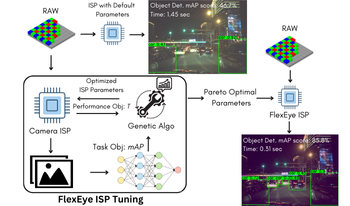

As AI becomes more prevalent, edge devices face challenges due to limited resources and the high demands of deep learning (DL) applications. In such cases, quality scalability can offer significant benefits by adjusting computational load based on available resources. Traditional Image-Signal-Processor (ISP) tuning methods prioritize maximizing intelligence performance, such as classification accuracy, while neglecting critical system constraints like latency and power dissipation. To address this gap, we introduce FlexEye, an application-specific, quality-scalable ISP tuning framework that leverages ISP parameters as a control knob for quality of service (QoS), enabling trade-off between quality and performance. Experimental results demonstrate up to 6% improvement in Object Detection accuracy and a 22.5% reduction in ISP latency compared to state of the art. In addition, we also evaluate Instance Segmentation task, where 1.2% accuracy improvement is attained with a 73% latency reduction.

An image signal processor (ISP) transforms a sensor's raw image into a RGB image for use in computer or human vision applications. ISP is composed of various functional blocks and each block contributes uniquely to make the image best suitable for the target application. Whereas, each block consists of several hyperparameters and each hyperparameter needs to be tuned (usually done manually by experts in an iterative manner) to achieve the target image quality. The tuning becomes challenging and increasingly iterative especially in low to very low light conditions where the amount of details preserved by the sensor is limited and ISP parameters have to be tuned to balance the amount of details recovered, noise, sharpness, contrast etc. To extract maximum information out of the image, usually it is required to increase the ISO gain which eventually impacts the noise and color accuracy. Also, the number of ISP parameters that need to be tuned are huge and it becomes impractical to consider all of them in such low light conditions to arrive at the best possible settings. To tackle challenges in manual tuning, especially for low light conditions we have implemented an automatic hyperparameter optimization model that can tune the low lux images so that they are perceptually equivalent to high-lux images. The experiments for IQ validation are carried out under challenging low light conditions and scenarios using Qualcomm’s Spectra ISP simulator with a 13MP OV sensor, and the performance of automatic tuned IQ is compared with manual tuned IQ for human vision use-cases. With experimental results, we have proved that with the help of evolutionary algorithms and local optimization it is possible to optimize the ISP parameters such that without using any of the KPI metrics still low-lux image/ image captured with different ISP (test image) can perceptually be improved that are equivalent to high-lux or well-tuned (reference) image.