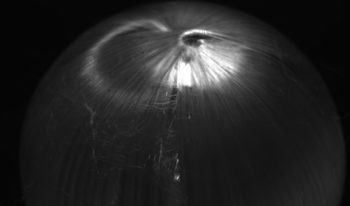

Regression-based radiance field reconstruction strategies, such as neural radiance fields (NeRFs) and, physics-based, 3D Gaussian splatting (3DGS), have gained popularity in novel view synthesis and scene representation. These methods parameterize a high-dimensional function that represents a radiance field, from a low-dimensional camera input. However, these problems are ill-posed and struggle to represent high (spatial) frequency data; manifesting as reconstruction artifacts when estimating high frequency details such as small hairs, fibers, or reflective surfaces. Here we show that classical spherical sampling around a target, often referred to as sampling a bounded scene, inhomogeneously samples the targets Fourier domain, resulting in spectral bias in the collected samples. We generalize the ill-posed problems of view-synthesis and scene representation as expressions of projection tomograpy and explore the upper-bound reconstruction limits of regression-based and integration-based strategies. We introduce a physics-based sampling strategy that we directly apply to 3DGS, and demonstrate high fidelity 3D anisotropic radiance field reconstructions with reconstruction PSNR scores as high as 44.04 dB and SSIM scores of 0.99, following the same metric analysis as defined in Mip-NeRF360.