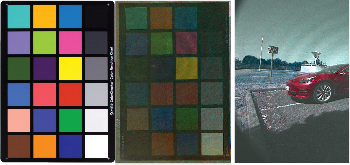

Most cameras use a single-sensor arrangement with Color Filter Array (CFA). Color interpolation techniques performed during image demosaicing are normally the reason behind visual artifacts generated in a captured image. While the severity of the artifacts depends on the demosaicing methods used, the artifacts themselves are mainly zipper artifacts (block artifacts across the edges) and false-color distortions. In this study and to evaluate the performance of demosaicing methods, a subjective pair-comparison method with 15 observers was performed on six different methods (namely Nearest Neighbours, Bilinear interpolation, Laplacian, Adaptive Laplacian, Smooth hue transition, and Gradient-Based image interpolation) and nine different scenes. The subjective scores and scene images are then collected as a dataset and used to evaluate a set of no-reference image quality metrics. Assessment of the performance of these image quality metrics in terms of correlation with the subjective scores show that many of the evaluated no-reference metrics cannot predict perceived image quality.

The exploration of the Solar System using unmanned probes and rovers has improved the understanding of our planetary neighbors. Despite a large variety of instruments, optical and near-infrared cameras remain critical for these missions to understand the planet’s surrounding, its geology but also to communicate easily with the general public. However, missions on planetary bodies must satisfy strong constraints in terms of robustness, data size, and amount of onboard computing power. Although this trend is evolving, commercial image-processing software cannot be integrated. Still, as the optical and a spectral information of the planetary surfaces is a key science objective, spectral filter arrays (SFAs) provide an elegant, compact, and cost-efficient solution for rovers. In this contribution, we provide ways to process multi-spectral images on the ground to obtain the best image quality, while remaining as generic as possible. This study is performed on a prototype SFA. Demosaicing algorithms and ways to correct the spectral and color information on these images are also detailed. An application of these methods on a custom-built SFA is shown, demonstrating that this technology represents a promising solution for rovers.

This article considers the joint demosaicing of colour and polarisation image content captured with a Colour and Polarisation Filter Array imaging system. The Linear Minimum Mean Square Error algorithm is applied to this case, and its performance is compared to the state-of-theart Edge-Aware Residual Interpolation algorithm. Results show that the LMMSE demosaicing method gives statistically higher scores on the largest tested database, in term of peak signal-to-noise ratio relatively to a CPFA-dedicated algorithm.

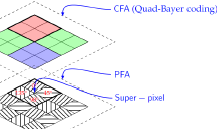

Can a mobile camera see better through display? Under Display Camera (UDC) is the most awaited feature in mobile market in 2020 enabling more preferable user experience, however, there are technological obstacles to obtain acceptable UDC image quality. Mobile OLED panels are struggling to reach beyond 20% of light transmittance, leading to challenging capture conditions. To improve light sensitivity, some solutions use binned output losing spatial resolution. Optical diffraction of light in a panel induces contrast degradation and various visual artifacts including image ghosts, yellowish tint etc. Standard approach to address image quality issues is to improve blocks in the imaging pipeline including Image Signal Processor (ISP) and deblur block. In this work, we propose a novel approach to improve UDC image quality - we replace all blocks in UDC pipeline with all-in-one network – UDC d^Net. Proposed solution can deblur and reconstruct full resolution image directly from non-Bayer raw image, e.g. Quad Bayer, without requiring remosaic algorithm that rearranges non-Bayer to Bayer. Proposed network has a very large receptive field and can easily deal with large-scale visual artifacts including color moiré and ghosts. Experiments show significant improvement in image quality vs conventional pipeline – over 4dB in PSNR on popular benchmark - Kodak dataset.

RGB-IR sensor combines the capabilities of RGB sensor and IR sensor in one single sensor. However, the additional IR pixel in the RGBIR sensor reduces the effective number of pixels allocated to visible region introducing aliasing artifacts due to demosaicing. Also, the presence of IR content in R, G and B channels poses new challenges in accurate color reproduction. Sharpness and color reproduction are very important image quality factors for visual aesthetic as well as computer vision algorithms. Demosaicing and color correction module are integral part of any color image processing pipeline and responsible for sharpness and color reproduction respectively. The image processing pipeline has not been fully explored for RGB-IR sensors. We propose a neural network-based approach for demosaicing and color correction for RGB-IR patterned image sensors. In our experimental results, we show that our learning-based approach performs better than the existing demosaicing and color correction methods.

The non-stationary nature of image characteristics calls for adaptive processing, based on the local image content. We propose a simple and flexible method to learn local tuning of parameters in adaptive image processing: we extract simple local features from an image and learn the relation between these features and the optimal filtering parameters. Learning is performed by optimizing a user defined cost function (any image quality metric) on a training set. We apply our method to three classical problems (denoising, demosaicing and deblurring) and we show the effectiveness of the learned parameter modulation strategies. We also show that these strategies are consistent with theoretical results from the literature.

Recently, a CFA sensor including a W channel has been developed. Since the W channel receives brightness information, it has a broad spectrum band compared to primary colors (Red, Green, Blue) and exhibits a high SNR. A camera using the CFA sensor obtains only certain type of color information for each pixel, so a color interpolation method is used to restore the full resolution image. In this paper, we propose a new color interpolation method for Sony-RGBW CFA. The proposed method is edge-adaptive and preferentially restores the W channel with a high sampling rate. In the next step, the R, G, and B channels are restored in the color difference domain using the W channel with the full resolution. Experimental results showed that the reconstructed image obtained by applying the proposed algorithm to the Sony-RGBW is superior to the image restored by conventional method in terms of SNR and visual confirmation.