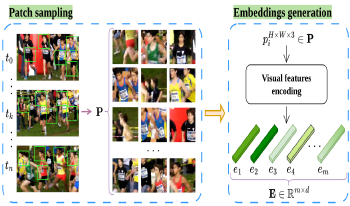

This paper proposes a novel frame selection technique based on embedding similarity to optimize video quality assessment (VQA). By leveraging high-dimensional feature embeddings extracted from deep neural networks (ResNet-50, VGG-16, and CLIP), we introduce a similarity-preserving approach that prioritizes perceptually relevant frames while reducing redundancy. The proposed method is evaluated on two datasets, CVD2014 and KonViD-1k, demonstrating robust performance across synthetic and real-world distortions. Results show that the proposed approach outperforms state-of-the-art methods, particularly in handling diverse and in-the-wild video content, achieving robust performances on KonViD-1k. This work highlights the importance of embedding-driven frame selection in improving the accuracy and efficiency of VQA methods.

In this paper, we present a novel technique that allows for customized Gabor texture features by leveraging deep learning neural networks. Our method involves using a Convolutional Neural Network to refactor traditional, hand-designed filters on specific datasets. The refactored filters can be used in an off-the-shelf manner with the same computational cost but significantly improved accuracy for material recognition. We demonstrate the effectiveness of our approach by reporting a gain in discriminatio accuracy on different material datasets. Our technique is particularly appealing in situations where the use of the entire CNN would be inadequate, such as analyzing non-square images or performing segmentation tasks. Overall, our approach provides a powerful tool for improving the accuracy of material recognition tasks while retaining the advantages of handcrafted filters.

Scientific user facilities present a unique set of challenges for image processing due to the large volume of data generated from experiments and simulations. Furthermore, developing and implementing algorithms for real-time processing and analysis while correcting for any artifacts or distortions in images remains a complex task, given the computational requirements of the processing algorithms. In a collaborative effort across multiple Department of Energy national laboratories, the "MLExchange" project is focused on addressing these challenges. MLExchange is a Machine Learning framework deploying interactive web interfaces to enhance and accelerate data analysis. The platform allows users to easily upload, visualize, label, and train networks. The resulting models can be deployed on real data while both results and models could be shared with the scientists. The MLExchange web-based application for image segmentation allows for training, testing, and evaluating multiple machine learning models on hand-labeled tomography data. This environment provides users with an intuitive interface for segmenting images using a variety of machine learning algorithms and deep-learning neural networks. Additionally, these tools have the potential to overcome limitations in traditional image segmentation techniques, particularly for complex and low-contrast images.

Scale invariance and high miss detection rates for small objects are some of the challenging issues for object detection and often lead to inaccurate results. This research aims to provide an accurate detection model for crowd counting by focusing on human head detection from natural scenes acquired from publicly available datasets of Casablanca, Hollywood-Heads and Scut-head. In this study, we tuned a yolov5, a deep convolutional neural network (CNN) based object detection architecture, and then evaluated the model using mean average precision (mAP) score, precision, and recall. The transfer learning approach is used for fine-tuning the architecture. Training on one dataset and testing the model on another leads to inaccurate results due to different types of heads in different datasets. Another main contribution of our research is combining the three datasets into a single dataset, including every kind of head that is medium, large and small. From the experimental results, it can be seen that this yolov5 architecture showed significant improvements in small head detections in crowded scenes as compared to the other baseline approaches, such as the Faster R-CNN and VGG-16-based SSD MultiBox Detector.

Image classification is extensively used in various applications such as satellite imagery, autonomous driving, smartphones, and healthcare. Most of the images used to train classification models can be considered ideal, i.e., without any degradation either due to corruption of pixels in the camera sensors, sudden shake blur, or the compression of images in a specific format. In this paper, we have proposed a novel CNN-based architecture for image classification of degraded images based on intermediate layer knowledge distillation and data augmentation approach cutout named ILIAC. Our approach achieves 1.1%, and 0.4% mean accuracy improvements for all the degradation levels of JPEG and AWGN, respectively, compared to the current state-of-the-art approach. Furthermore, ILIAC method is efficient in computational capacity, i.e., about half the size of the previous state-of-the-art approach in terms of model parameters and GFlops count. Additionally, we demonstrate that we do not necessarily need a larger teacher network in knowledge distillation to improve the model performance and generalization of a smaller student network for the classification of degraded images.

Computer vision systems become deployed in diverse real time systems hence robustness is a major area of concern. As a vast majority of the AI enabled systems are based on convolutional neural networks based models which use 3-channel RGB images as input. It has been shown that the performance of AI systems, such as those used in classification, is impacted by distortions in the images. To date most work has been carried out on distortions such as noise, blur, compression. However, color related changes to images could also impact the performance. Therefore, the goal of this paper is to study the robustness of these models under different hue shifts.

Transfer Learning is an important strategy in Computer Vision to tackle problems in the face of limited training data. However, this strategy still heavily depends on the amount of availabl data, which is a challenge for small heritage institutions. This paper investigates various ways of enrichingsmaller digital heritage collections to boost the performance of deep learningmodels, using the identification of musical instruments as a case study. We apply traditional data augmentation techniques as well as the use of an external, photorealistic collection, distorted by Style Transfer. Style Transfer techniques are capable of artistically stylizing images, reusing the style from any other given image. Hence, collections can be easily augmented with artificially generated images. We introduce the distinction between inner and outer style transfer and show that artificially augmented images in both scenarios consistently improve classification results, on top of traditional data augmentation techniques. However, and counter-intuitively, such artificially generated artistic depictions of works are surprisingly hard to classify. In addition, we discuss an example of negative transfer within the non-photorealistic domain.

The object sizes in images are diverse, therefore, capturing multiple scale context information is essential for semantic segmentation. Existing context aggregation methods such as pyramid pooling module (PPM) and atrous spatial pyramid pooling (ASPP) employ different pooling size or atrous rate, such that multiple scale information is captured. However, the pooling sizes and atrous rates are chosen empirically. Rethinking of ASPP leads to our observation that learnable sampling locations of the convolution operation can endow the network learnable fieldof- view, thus the ability of capturing object context information adaptively. Following this observation, in this paper, we propose an adaptive context encoding (ACE) module based on deformable convolution operation where sampling locations of the convolution operation are learnable. Our ACE module can be embedded into other Convolutional Neural Networks (CNNs) easily for context aggregation. The effectiveness of the proposed module is demonstrated on Pascal-Context and ADE20K datasets. Although our proposed ACE only consists of three deformable convolution blocks, it outperforms PPM and ASPP in terms of mean Intersection of Union (mIoU) on both datasets. All the experimental studies confirm that our proposed module is effective compared to the state-of-the-art methods.

Image steganography can have legitimate uses, for example, augmenting an image with a watermark for copyright reasons, but can also be utilized for malicious purposes. We investigate the detection of malicious steganography using neural networkbased classification when images are transmitted through a noisy channel. Noise makes detection harder because the classifier must not only detect perturbations in the image but also decide whether they are due to the malicious steganographic modifications or due to natural noise. Our results show that reliable detection is possible even for state-of-the-art steganographic algorithms that insert stego bits not affecting an image’s visual quality. The detection accuracy is high (above 85%) if the payload, or the amount of the steganographic content in an image, exceeds a certain threshold. At the same time, noise critically affects the steganographic information being transmitted, both through desynchronization (destruction of information which bits of the image contain steganographic information) and by flipping these bits themselves. This will force the adversary to use a redundant encoding with a substantial number of error-correction bits for reliable transmission, making detection feasible even for small payloads.

The objective of this paper is to research a dynamic computation of Zero-Parallax-Setting (ZPS) for multi-view autostereoscopic displays in order to effectively alleviate blurry 3D vision for images with large disparity. Saliency detection techniques can yield saliency map which is a topographic representation of saliency which refers to visually dominant locations. By using saliency map, we can predict what attracts the attention, or region of interest, to viewers. Recently, deep learning techniques have been applied in saliency detection. Deep learning-based salient object detection methods have the advantage of highlighting most of the salient objects. With the help of depth map, the spatial distribution of salient objects can be computed. In this paper, we will compare two dynamic ZPS techniques based on visual attention. They are 1) maximum saliency computation by Graphic-Based Visual Saliency (GBVS) algorithm and 2) spatial distribution of salient objects by a convolutional neural networks (CNN)-based model. Experiments prove that both methods can help improve the 3D effect of autostereoscopic displays. Moreover, the spatial distribution of salient objects-based dynamic ZPS technique can achieve better 3D performance than maximum saliency-based method.