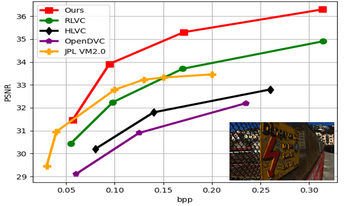

In recent years, several deep learning-based architectures have been proposed to compress Light Field (LF) images as pseudo video sequences. However, most of these techniques employ conventional compression-focused networks. In this paper, we introduce a version of a previously designed deep learning video compression network, adapted and optimized specifically for LF image compression. We enhance this network by incorporating an in-loop filtering block, along with additional adjustments and fine-tuning. By treating LF images as pseudo video sequences and deploying our adapted network, we manage to address challenges presented by the unique features of LF images, such as high resolution and large data sizes. Our method compresses these images competently, preserving their quality and unique characteristics. With the thorough fine-tuning and inclusion of the in-loop filtering network, our approach shows improved performance in terms of Peak Signal-to-Noise Ratio (PSNR) and Mean Structural Similarity Index Measure (MSSIM) when compared to other existing techniques. Our method provides a feasible path for LF image compression and may contribute to the emergence of new applications and advancements in this field.

Video compression in automated vehicles and advanced driving assistance systems is of utmost importance to deal with the challenge of transmitting and processing the vast amount of video data generated per second by the sensor suite which is needed to support robust situational awareness. The objective of this paper is to demonstrate that video compression can be optimised based on the perception system that will utilise the data. We have considered the deployment of deep neural networks to implement object (i.e. vehicle) detection based on compressed video camera data extracted from the KITTI MoSeg dataset. Preliminary results indicate that re-training the neural network with M-JPEG compressed videos can improve the detection performance with compressed and uncompressed transmitted data, improving recalls and precision by up to 4% with respect to re-training with uncompressed data.

Point clouds are essential for storage and transmission of 3D content. As they can entail significant volumes of data, point cloud compression is crucial for practical usage. Recently, point cloud geometry compression approaches based on deep neural networks have been explored. In this paper, we evaluate the ability to predict perceptual quality of typical voxel-based loss functions employed to train these networks. We find that the commonly used focal loss and weighted binary cross entropy are poorly correlated with human perception. We thus propose a perceptual loss function for 3D point clouds which outperforms existing loss functions on the ICIP2020 subjective dataset. In addition, we propose a novel truncated distance field voxel grid representation and find that it leads to sparser latent spaces and loss functions that are more correlated with perceived visual quality compared to a binary representation. The source code is available at <uri>https://github.com/mauriceqch/2021_pc_perceptual_loss</uri>.

The majority of internet traffic is video content. This drives the demand for video compression in order to deliver high quality video at low target bitrates. This paper investigates the impact of adjusting the rate distortion equation on compression performance. An constant of proportionality, k, is used to modify the Lagrange multiplier used in H.265 (HEVC). Direct optimisation methods are deployed to maximise BD-Rate improvement for a particular clip. This leads to up to 21% BD-Rate improvement for an individual clip. Furthermore we use a more realistic corpus of material provided by YouTube. The results show that direct optimisation using BD-rate as the objective function can lead to further gains in bitrate savings that are not available with previous approaches.