Evaluating spatial frequency response (SFR) in natural scenes is crucial for understanding camera system performance and its implications for image quality in various applications, including machine learning and automated recognition. Natural Scene derived Spatial Frequency Response (NS-SFR) represented a significant advancement by allowing for direct assessment of camera performance without the need for charts, which have been traditionally limited. However, the existing NS-SFR methods still face limitations related to restricted angular coverage and susceptibility to noise, undermining measurement accuracy. In this paper, we propose a novel methodology that can enhance the NS-SFR by employing an adaptive oversampling rate (OSR) and phase shift (PS) to broaden angular coverage and by applying a newly developed adaptive window technique that effectively reduces the impact of noise, leading to more reliable results. Furthermore, by simulation and comparison with theoretical modulation transfer function (MTF) values, as well as in natural scenes, the proposed method demonstrated that our approach successfully addresses the challenges of the existing methods, offering a more accurate representation of camera performance in natural scenes.

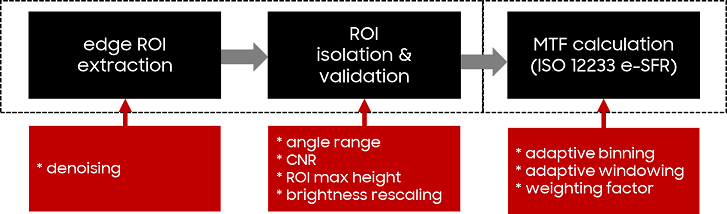

Recent research on digital camera performance evaluation introduced the Natural Scene Spatial Frequency Response (NS-SFR) framework, shown to provide a comparable measure to the ISO12233 edge SFR (e-SFR) but derived outside laboratory conditions. The framework extracts step-edges captured from pictorial natural scenes to evaluate the camera SFR. It is in 2-parts. The first utilizes the ISO12233 slanted-edge algorithm to produce an ‘envelope’ of NS-SFRs. The second estimates the system e-SFR from this NS-SFR data. One drawback of this proposed methodology has been the computation time. The process was not optimized, as it first derived NS-SFRs from all suitable step-edges and then further validated and statistically treated the results to estimate the e-SFR. This paper presents changes to the framework processes, aiming to optimize the computation time so that it is practical for real-world implementation. The developments include an improved framework structure, a pixel-stretching filter alternative, and the capability to utilize Graphics Processing Unit (GPU) acceleration. In addition, the methodology was updated to utilize the latest e-SFR algorithm implementation. The resulting code has been incorporated into a self-executable user interface prototype, available in GitHub. Future goals include making it an open-access, cloud-based solution to be used by scientists, camera evaluation labs and the general public.