High dynamic range (HDR) scenes are known to be challenging for most cameras. The most common artifacts associated with bad HDR scene rendition are clipped bright areas and noisy dark regions, rendering the images unnatural and unpleasing. This paper introduces a novel methodology for automating the perceptual evaluation of detail rendition in these extreme regions of the histogram for images that portray natural scenes. The key contributions include 1) the construction of a robust database in Just Objectionable Distance (JOD) scores, incorporating annotator outlier detection 2) the introduction of a Multitask Convolutional Neural Network (CNN) model that effectively addresses the diverse context and region-of-interest challenges inherent in natural scenes. Our experimental evaluation demonstrates that our approach strongly aligns with human evaluations. The adaptability of our model positions it as a valuable tool for ensuring consistent camera performance evaluation, contributing to the continuous evolution of smartphone technologies.

This study aims to investigate how a specific type of distortion in imaging pipelines, such as read noise, affects the performance of an automatic license plate recognition algorithm. We first evaluated a pretrained three-stage license plate recognition algorithm using undistorted license plate images. Subsequently, we applied 15 different levels of read noise using a well-known imaging pipeline simulation tool and assessed the recognition performance on the distorted images. Our analysis reveals that recognition accuracy decreases as read noise becomes more prevalent in the imaging pipeline. However, we observed that, contrary to expectations, a small amount of noise can increase vehicle detection accuracy, particularly in the case of the YOLO-based vehicle detection module. The part of the automatic license plate recognition system that is mostly prone to errors and is mostly affected by read noise is the optical character recognition module. The results highlight the importance of considering imaging pipeline distortions when designing and deploying automatic license plate recognition systems.

Retinex is a theory of colour vision, and it is also a well-known image enhancement algorithm. The Retinex algorithms reported in the literature are often called path-based or centre-surround. In the path-based approach, an image is processed by calculating (reintegrating along) paths in proximate image regions and averaging amongst the paths. Centre-surround algorithms convolve an image (in log units) with a large-scale centre-surround-type operator. Both types of Retinex algorithms map a high dynamic range image to a lower-range counterpart suitable for display, and both are proposed as a method to simultaneously enhance an image for preference. In this paper, we reformulate one of the most common variants of the path-based approach and show that it can be recast as a centre-surround algorithm at multiple scales. Significantly, our new method processes images more quickly and is potentially biologically plausible. To the extent that Retinex produces pleasing images, it produces equivalent outputs. Experiments validate our method.

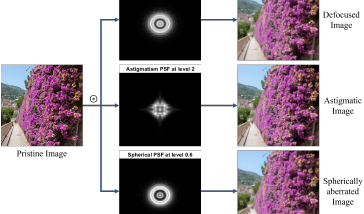

For image quality assessment, the availability of diverse databases is vital for the development and evaluation of image quality metrics. Existing databases have played an important role in promoting the understanding of various types of distortion and in the evaluation of image quality metrics. However, a comprehensive representation of optical aberrations and their impact on image quality is lacking. This paper addresses this gap by introducing a novel image quality database that focuses on optical aberrations. We conduct a subjective experiment to capture human perceptual responses on a set of images with optical aberrations. We then test the performance of selected objective image quality metrics to assess these aberrations. This approach not only ensures the relevance of our database to real-world scenarios but also contributes to ensuring the performance of the selected image quality metrics. The database is available for download at https: // www. ntnu. edu/ colourlab/ software .

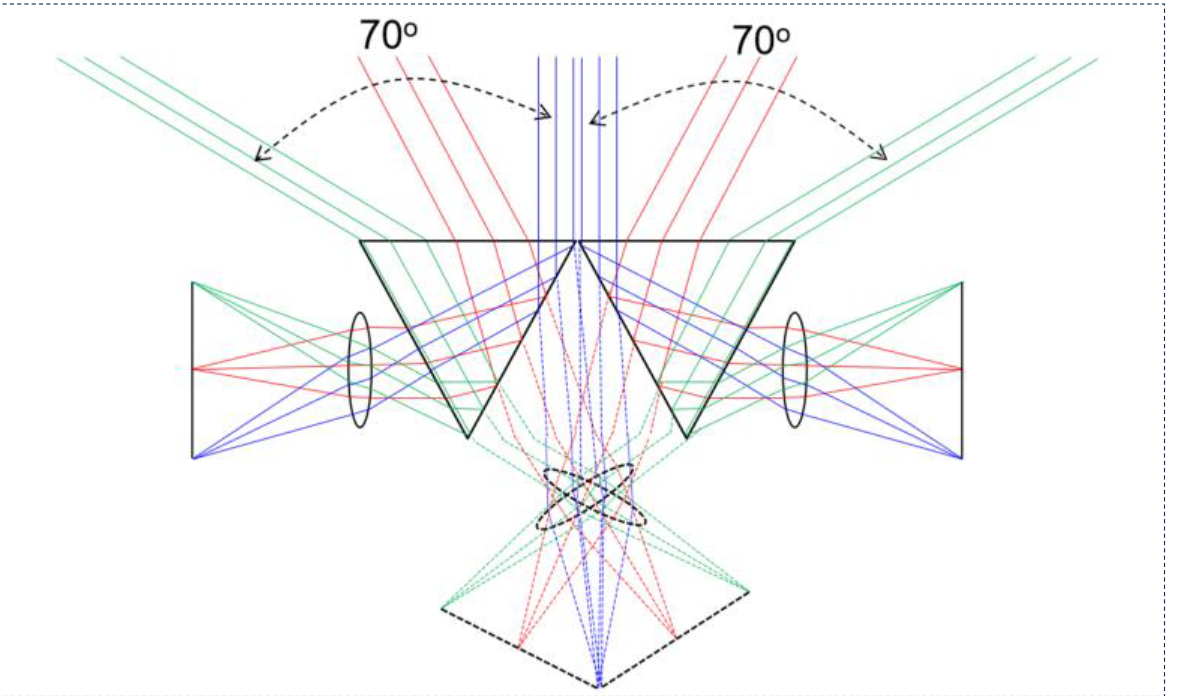

We introduce our cutting-edge panoramic camera – a true panoramic camera (TPC), designed for mobile smartphone applications. Leveraging prism optics and well-known imaging processing algorithms, our camera achieves parallax-free seamless stitching of images captured by dual cameras pointing in two different directions opposite to the normal. The result is an ultra-wide (140ox53o) panoramic field-of-view (FOV) without the optical distortions typically associated with ultra-wide-angle lenses. Packed into a compact camera module measuring 22 mm (length) x 11 mm (width) x 9 mm (height) and integrated into a mobile testing platform featuring the Qualcomm Snapdragon® 8 Gen 1 processor, the TPC demonstrates unprecedented capabilities of capturing panoramic pictures in a single shot and recording panoramic videos.

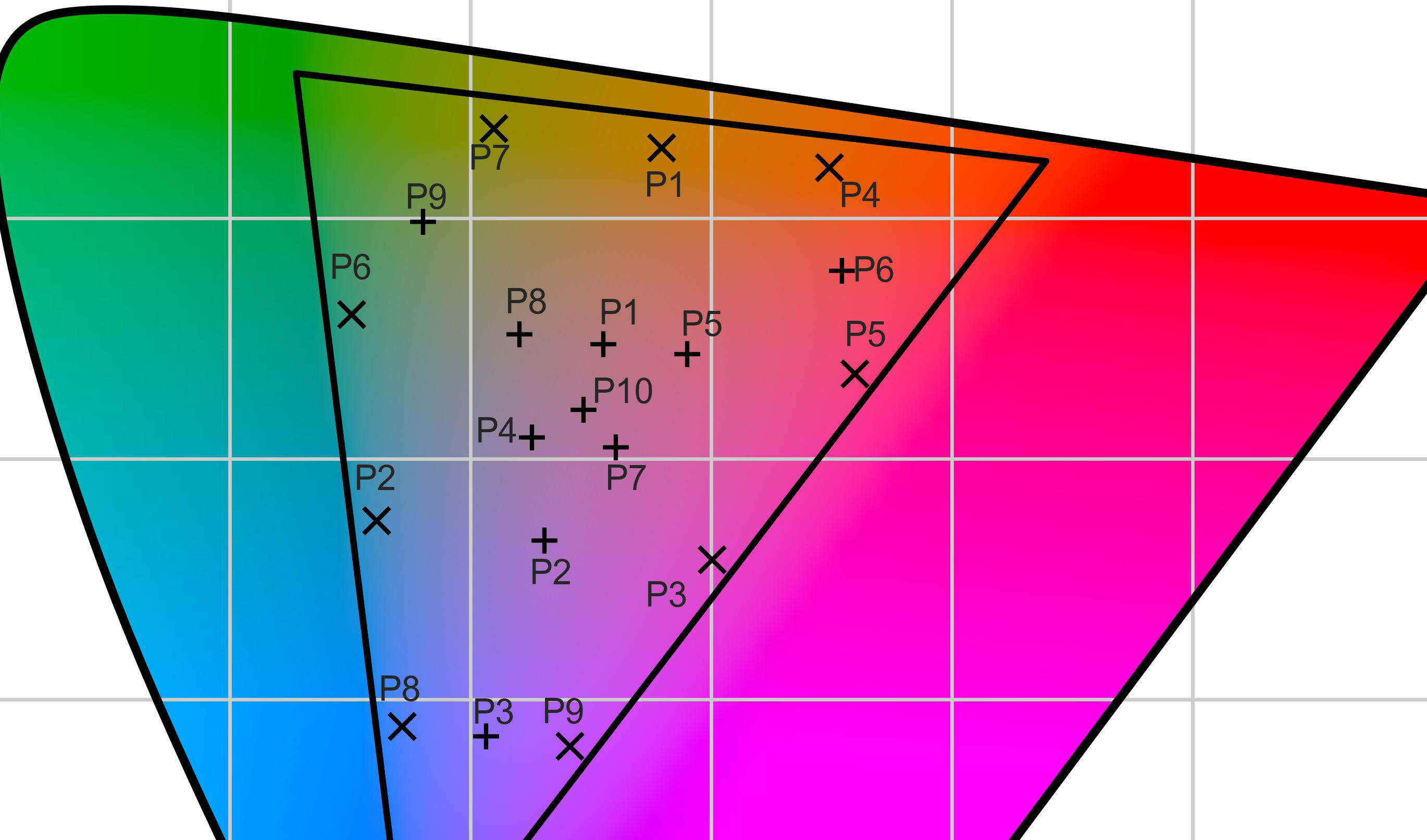

With the increasing popularity across various scientific research domains, virtual reality serves as a powerful tool for conducting colour science experiments due to its capability to present naturalistic scenes under controlled conditions. In this paper, a systematic approach for characterising the colorimetric profile of a head mounted display is proposed. First, a commercially available head mounted display, namely the Meta Quest 2, was characterised by aid of a colorimetric luminance camera. Afterwards, the suitability of four different models (Look-up Table, Polynomial Regression, Artificial Neural Network and Gain Gamma Offset) to predict the colorimetric features of the head mounted display was investigated.

When visualizing colors on websites or in apps, color calibration is not feasible for consumer smartphones and tablets. The vast majority of consumers do not have the time, equipment or expertise to conduct color calibration. For such situations we recently developed the MDCIM (Mobile Display Characterization and Illumination Model) model. Using optics-based image processing it aims at improving digital color representation as assessed by human observers. It takes into account display-specific parameters and local lighting conditions. In previous publications we determined model parameters for four mobile displays: an OLED display in Samsung Galaxy S4, and 3 LCD displays: iPad Air 2 and the iPad models from 2017 and 2018. Here, we investigate the performance of another OLED display, the iPhone XS Max. Using a psychophysical experiment, we show that colors generated by the MDCIM method are visually perceived as a much better color match with physical samples than when the default method is used, which is based on sRGB space and the color management system implemented by the smartphone manufacturer. The percentage of reasonable to good color matches improves from 3.1% to 85.9% by using MDCIM method, while the percentage of incorrect color matches drops from 83.8% to 3.6%.

Abstractly, a tone curve can be thought of as an increasing function of input brightness which, when applied to an image, results in a rendered output that is ready for display and is preferred. However, the shape of the tone curve is not arbitrary. Curves that are too steep or too shallow (which concomitantly result in too much or too little contrast) are not preferred. Thus, tone curve generation algorithms often constrain the shape of the tone curves they generate. Recently, it was argued that tone curves should - as well as being limited in their slopes - only have one or zero inflexion points. In this paper, we propose that this inflexion-point requirement should be strengthened further. Indeed, the single inflexion-point-only constraint still admits curves with sharp changes in slope (which are sometimes the culprits of banding artefacts in images). Thus, we develop a novel optimisation framework which additionally ensures sharp changes in the tone curves are smoothed out (technically, mollified). Our even simpler tone curves are shown to render most real images to be visually similar to those rendered without the constraints. Experiments validate our method.