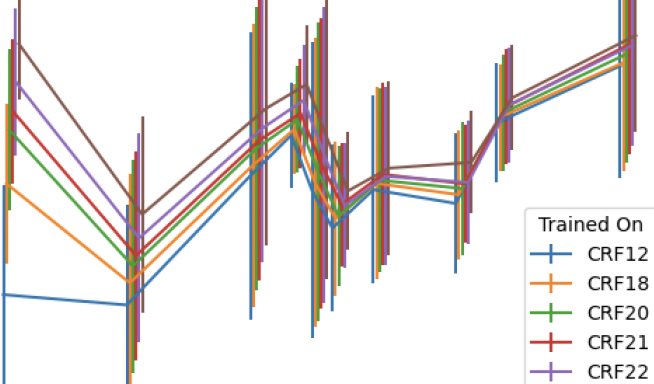

Remote photoplethysmography (rPPG) is a camera based technique used to estimate a subject's heart rate from video. It exploits the difference in light reflection between blood-dense tissue and other tissue by detecting small variations in the color of RGB pixels on skin. While often undetectable to the human eye, these subtle changes are easily detectable from high-quality video. Working with high-quality video presents many challenges due to the amount of storage space required to house it, computing power needed to analyze it, and time required to transport it. These problems can be potentially mitigated through the use of compression algorithms, but modern compression algorithms are unconcerned with maintaining the small pixel intensity variations within or between frames that are needed for the rPPG algorithms to function. When provided with compressed videos, rPPG algorithms are therefore left with less information and may predict heart rates less accurately. We examine the effects of compression on deep learning rPPG with multiple commonly used and popular compression codecs (H.264, H.265, and VP9). This is done at a variety of rate factors and frame rates to determine a threshold for which compressed video still returns a valid heart rate. These compression techniques are applied against multiple public rPPG datasets (DDPM and PURE). We find that rPPG trained on lossless data effectively fails when evaluated on data compressed at compression constant rate factors (CRFs) of 22 and higher for both H.264 and H.265, and at a constant-quality mode of CRF above 37 for VP9. We find that training on compressed data yielded less accurate results than training on loss-less or less compressed data. We did not find any specific benefit to training and testing on data compressed at identical compression levels.

Biometric authentication takes on many forms. Some of the more researched forms are fingerprint and facial authentication. Due to the amounts of research in these areas there are benchmark datasets easily accessible for new researchers to utilize when evaluating new systems. A newer, less researched biometric method is that of lip motion authentication. These systems entail a user producing a lip motion password to authenticate, meaning they must utter the same word or phrase to gain access. Because this method is less researched, there is no large-scale dataset that can be used to compare methods as well as determine the actual levels of security that they provide. We propose an automated dataset collection pipeline that extracts a lip motion authentication dataset from collections of videos. This dataset collection pipeline will enable the collection of large-scale datasets for this problem thus advancing the capability of lip motion authentication systems.

As facial authentication systems become an increasingly advantageous technology, the subtle inaccuracy under certain subgroups grows in importance. As researchers perform data augmentation to increase subgroup accuracies, it is critical that the data augmentation approaches are understood. We specifically research the impact that the data augmentation method of racial transformation has upon the identity of the individual according to a facial authentication network. This demonstrates whether the racial transformation maintains critical aspects to an individual identity or whether the data augmentation method creates the equivalence of an entirely new individual for networks to train upon. We demonstrate our method for racial transformation based on other top research articles methods, display the embedding distance distribution of augmented faces compared with the embedding distance of non-augmented faces and explain to what extent racial transformation maintains critical aspects to an individual’s identity.

DeepFakes are a recent trend in computer vision, posing a thread to authenticity of digital media. For the detection of DeepFakes most prominently neural network based approaches are used. Those detectors often lack explanatory power on why the given decision was made, due to their black-box nature. Furthermore, taking the social, ethical and legal perspective (e.g. the upcoming European Commission in the Artificial Intelligence Act) into account, black-box decision methods should be avoided and Human Oversight should be guaranteed. In terms of explainability of AI systems, many approaches work based on post-hoc visualization methods (e.g. by back-propagation) or the reduction of complexity. In our paper a different approach is used, combining hand-crafted as well as neural network based components analyzing the same phenomenon to aim for explainability. The exemplary chosen semantic phenomenon analyzed here is the eye blinking behavior in a genuine or DeepFake video. Furthermore, the impact of video duration on the classification result is evaluated empirically, so that a minimum duration threshold can be set to reasonably detect DeepFakes.