Urban governance is vital for efficiently managing cities, promoting sustainable development, and improving quality of life for residents. In the realm of urban governance, the San Antonio Research Partnership Portal stands as a groundbreaking initiative, fostering collaboration between diverse city entities and leveraging innovative smart applications. In this paper, we will focus on its ability to facilitate strategic alignment among city departments, public feedback integration, and streamlined collaboration with academic institutions. Through technical insights and real-world case studies, this paper underscores the portal’s role in enhancing municipal responsiveness, improving decision-making processes, and exemplifying the potential of smart applications utilizing artificial intelligence for fostering effective city management and community engagement.

In the era of data-driven decision making, cities and communities are increasingly seeking ways to effectively gather insights from public feedback and comments to shape their research and development initiatives. Town hall community meetings serve as a valuable platform for citizens to express their opinions, concerns, and ideas about various aspects of city life. In this study, we aim to explore the effectiveness of different keyword extraction tools and similarity matching algorithms in matching town hall community comments with city strategic plans and current research opportunities. We employ KPMiner, TopicRank, MultipartiteRank, and KeyBERT for keyword extraction, and evaluate the performance of cosine similarity, word embedding similarity, and BERT-based similarity for matching the extracted keywords. By combining these techniques, we aim to bridge the gap between community feedback and research initiatives, enabling data-driven decision-making in urban development. Our findings will provide valuable insights for more inclusive and informed strategies, ensuring that citizen opinions and concerns are effectively incorporated into city planning and development efforts.

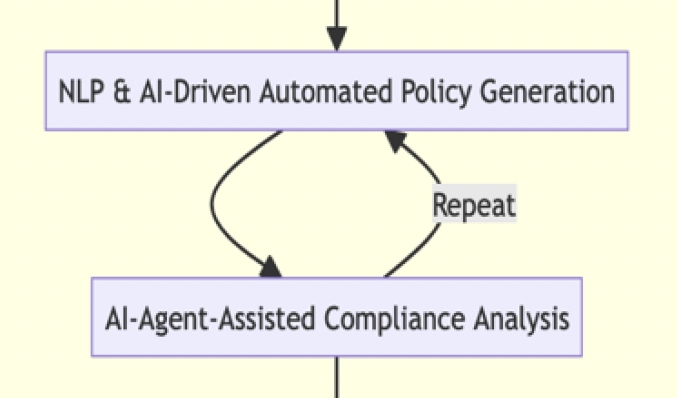

This paper introduces AI-based Cybersecurity Management Consulting (AI-CMC) as a disruptive technology to address the growing complexity of cybersecurity threats. AI-CMC combines advanced AI techniques with cybersecurity management, offering proactive and adaptive strategies through machine learning, natural language processing, and big data analytics. It enables real-time threat detection, predictive analytics, and intelligent decision-making. The paper explores AI-CMC's data-driven approach, learning models, and collaborative framework, demonstrating its potential to revolutionize cyber-security. It examines AI-CMC's benefits, challenges, and ethical considerations, emphasizing transparency and bias mitigation. A roadmap for transitioning to AI-CMC and its implications for industry standards, policies, and global strategies are discussed. Despite potential limitations and vulnerabilities, AI-CMC offers transformative solutions for enhancing threat resilience and safeguarding digital assets, calling for collaborative efforts and responsible use for a secure digital future.

The modulation-transfer function (MTF) is a fundamental optical metric to measure the optical quality of an imaging system. In the automotive industry it is used to qualify camera systems for ADAS/AD. Each modern ADAS/AD system includes evaluation algorithms for environment perception and decision making that are based on AI/ML methods and neural networks. The performance of these AI algorithms is measured by established metrics like Average Precision (AP) or precision-recall-curves. In this article we research the robustness of the link between the optical quality metric and the AI performance metric. A series of numerical experiments were performed with object detection and instance segmentation algorithms (cars, pedestrians) evaluated on image databases with varying optical quality. We demonstrate with these that for strong optical aberrations a distinct performance loss is apparent, but that for subtle optical quality differences – as might arise during production tolerances – this link does not exhibit a satisfactory correlation. This calls into question how reliable the current industry practice is where a produced camera is tested end-of-line (EOL) with the MTF, and fixed MTF thresholds are used to qualify the performance of the camera-under-test.

Video conferencing usage dramatically increased during the pandemic and is expected to remain high in hybrid work. One of the key aspects of video experience is background blur or background replacement, which relies on good quality portrait segmentation in each frame. Software and hardware manufacturers have worked together to utilize depth sensor to improve the process. Existing solutions have incorporated depth map into post processing to generate a more natural blurring effect. In this paper, we propose to collect background features with the help of depth map to improve the segmentation result from the RGB image. Our results show significant improvements over methods using RGB based networks and runs faster than model-based background feature collection models.

We present the results of our image analysis of portrait art from the Roman Empire's Julio-Claudian dynastic period. Our novel approach involves processing pictures of ancient statues, cameos, altar friezes, bas-reliefs, frescoes, and coins using modern mobile apps, such as Reface and FaceApp, to improve identification of the historical subjects depicted. In particular, we have discovered that the Reface app has limited, but useful capability to restore the approximate appearance of damaged noses of the statues. We confirm many traditional identifications, propose a few identification corrections for items located in museums and private collections around the world, and discuss the advantages and limitations of our approach. For example, Reface may make aquiline noses appear wider or shorter than they should be. This deficiency can be partially corrected if multiple views are available. We demonstrate that our approach can be extended to analyze portraiture from other cultures and historical periods. The article is intended for a broad section of the readers interested in how the modern AI-based solutions for mobile imaging merge with humanities to help improve our understanding of the modern civilization's ancient past and increase appreciation of our diverse cultural heritage.

Image captioning generates text that describes scenes from input images. It has been developed for high-quality images taken in clear weather. However, in bad weather conditions, such as heavy rain, snow, and dense fog, poor visibility as a result of rain streaks, rain accumulation, and snowflakes causes a serious degradation of image quality. This hinders the extraction of useful visual features and results in deteriorated image captioning performance. To address practical issues, this study introduces a new encoder for captioning heavy rain images. The central idea is to transform output features extracted from heavy rain input images into semantic visual features associated with words and sentence context. To achieve this, a target encoder is initially trained in an encoder-decoder framework to associate visual features with semantic words. Subsequently, the objects in a heavy rain image are rendered visible by using an initial reconstruction subnetwork (IRS) based on a heavy rain model. The IRS is then combined with another semantic visual feature matching subnetwork (SVFMS) to match the output features of the IRS with the semantic visual features of the pretrained target encoder. The proposed encoder is based on the joint learning of the IRS and SVFMS. It is trained in an end-to-end manner, and then connected to the pretrained decoder for image captioning. It is experimentally demonstrated that the proposed encoder can generate semantic visual features associated with words even from heavy rain images, thereby increasing the accuracy of the generated captions.

With the evolving artificial intelligence technology, the chatbots are becoming smarter and faster lately. Chatbots are typically available round the clock providing continuous support and services. A chatbot or a conversational agent is a program or software that can communicate using natural language with humans. The challenge of developing an intelligent chatbot still exists ever since the onset of artificial intelligence. The functionality of chatbots can range from business oriented short conversations to healthcare intervention based longer conversations. However, the primary role that the chatbots have to play is in understanding human utterances in order to respond appropriately. To that end, there is an increased emergence of Natural Language Understanding (NLU) engines by popular cloud service providers. The NLU services identify entities and intents from the user utterances provided as input. Thus, in order to integrate such understanding to a chatbot, this paper presents a study on existing major NLU platforms. Then, we present a case study chatbot integrated with Google DialogFlow and IBM Watson NLU services and discuss their intent recognition performance.

Applications used in human-centered scene analysis often rely on AI processes that provide the 3D data of human bodies. The applications are limited by the accuracy and reliability of the detection. In case of safety applications, an almost perfect detection rate is required. The presented approach gives a confidence measure for the likelihood that detected human bodies are real persons. It measures the consistency of the estimated 3D pose information of body joints with prior knowledge about the physiologically possible spatial sizes and proportions. Therefore, a detailed analysis was done which lead to the development of an error metric that allows the quantitative evaluation of single limbs and in summary of the complete body. For a given dataset an error threshold has been derived that verifies 97% persons correctly and can be used for the identification of false detections, so-called ghosts. Additionally, the 3D-data of single joints could be rated successfully. The results are usable for relabeling and retraining of underlying 2D and 3D pose estimators and provides a quantitative and comparable verification method, which improves significantly a reliable 3D-recognition of real persons and increases hereby the possibilities of high-standard applications of 3D human-centered technologies.