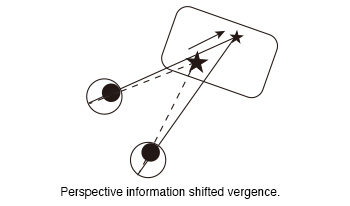

The vergence of subjects was measured while they observed 360-degree images of a virtual reality (VR) goggle. In our previous experiment, we observed a shift in vergence in response to the perspective information presented in 360-degree images when static targets were displayed within them. The aim of this study was to investigate whether a moving target that an observer was gazing at could also guide his vergence. We measured vergence when subjects viewed moving targets in 360-degree images. In the experiment, the subjects were instructed to gaze at the ball displayed in the 360-degree images while wearing the VR goggle. Two different paths were generated for the ball. One of the paths was the moving path that approached the subjects from a distance (Near path). The second path was the moving path at a distance from the subjects (Distant path). Two conditions were set regarding the moving distance (Short and Long). The moving distance of the left ball in the long distance condition was a factor of two greater than that in the short distance condition. These factors were combined to created four conditions (Near Short, Near Long, Distant Short and Distant Long). And two different movement time (5s and 10s) were designated for the movement of the ball only in the short distance conditions. The moving time of the long distance condition was always 10s. In total, six types of conditions were created. The results of the experiment demonstrated that the vergence was larger when the ball was in close proximity to the subjects than when it was at a distance. That was that the perspective information of 360-degree images shifted the subjects’ vergence. This suggests that the perspective information of the images provided observers with high-quality depth information that guided their vergence toward the target position. Furthermore, this effect was observed not only for static targets, but also for moving targets.

360-degree image quality assessment using deep neural networks is usually designed using a multi-channel paradigm exploiting possible viewports. This is mainly due to the high resolution of such images and the unavailability of ground truth labels (subjective quality scores) for individual viewports. The multi-channel model is hence trained to predict the score of the whole 360-degree image. However, this comes with a high complexity cost as multi neural networks run in parallel. In this paper, a patch-based training is proposed instead. To account for the non-uniformity of quality distribution of a scene, a weighted pooling of patches’ scores is applied. The latter relies on natural scene statistics in addition to perceptual properties related to immersive environments.