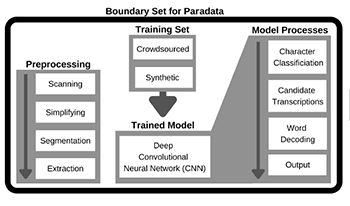

While a familiar term in fields like social science research and digital cultural heritage, ‘paradata’ has not yet been introduced conceptually into the archival realm. In response to an increasing number of experiments with machine learning and artificial intelligence, the InterPARES Trust AI research group proposes the definition of paradata as ‘information about the procedure(s) and tools used to create and process information resources, along with information about the persons carrying out those procedures.’ The utilization of this concept in archives can help to ensure that AI-driven systems are designed from the outset to honor the archival ethic, and to aid in the evaluation of off-the-shelf automation solutions. An evaluation of current AI experiments in archives highlights opportunities for paradata-conscious practice

In recent years, behavioral biometrics authentication, which uses the habit of behavioral characteristics for personal authentication, has attracted attention as an authentication method with higher security since behavioral biometrics cannot mimic as fingerprint and face authentications. As the behavioral biometrics, many researches were performed on voiceprints. However, there are few authentication technologies that utilize the habits of hand and finger movements during hand gestures. Only either color images or depth images are used for hand gesture authentication in the conventional methods. In the research, therefore, we propose to find individual habits from RGB-D images of finger movements and create a personal authentication system. 3D CNN, which is a deep learning-based network, is used to extract individual habits. An F-measure of 0.97 is achieved when rock-paper-scissors are used as the authentication operation. An F-measure of 0.97 is achieved when the disinfection operation is used. These results show the effectiveness of using RGB-D video for personal authentication.

Depth sensing technology has become important in a number of consumer, robotics, and automated driving applications. However, the depth maps generated by such technologies today still suffer from limited resolution, sparse measurements, and noise, and require significant post-processing. Depth map data often has higher dynamic range than common 8-bit image data and may be represented as 16-bit values. Deep convolutional neural nets can be used to perform denoising, interpolation and completion of depth maps; however, in practical applications there is a need to enable efficient low-power inference with 8-bit precision. In this paper, we explore methods to process high-dynamic-range depth data using neural net inference engines with 8-bit precision. We propose a simple technique that attempts to retain signal-to-noise ratio in the post-processed data as much as possible and can be applied in combination with most convolutional network models. Our initial results using depth data from a consumer camera device show promise, achieving inference results with 8-bit precision that have similar quality to floating-point processing.

Multi-object tracking is an active computer vision problem that has gained consistent interest due to its wide range of applications in many areas like surveillance, autonomous driving, entertainment, and, gaming to name a few. In the age of deep learning, many computer vision tasks have benefited from the convolutions neural network. They have been optimized with rapid development, whereas multi-target tracking remains challenging. A variety of models have benefited from the representational power of deep learning to tackle this issue. This paper inspects three CNN-based models that have achieved state-of-the-art performance in addressing this problem. All three models follow a different paradigm and provide a key inside of the development of the field. We examined the models and conducted experiments on the three models using the benchmark dataset. The quantitative results from the state-of-the-art models are listed in the standard metrics and provide the basis for future research in the field.

Camera identification is an important topic in the field of digital image forensics. There are three levels of classification: brand, model, and device. Studies in the literature are mainly focused on camera model identification. These studies are increasingly based on deep learning (DL). The methods based on deep learning are dedicated to three main goals: basic (only model) - triple (brand, model and device) - open-set (known and unknown cameras) classifications. Unlike other areas of image processing such as face recognition, most of these methods are only evaluated on a single database (Dresden) while a few others are publicly available. The available databases have a diversity in terms of camera content and distribution that is unique to each of them and makes the use of a single database questionable. Therefore, we conducted extensive tests with different public databases (Dresden, SOCRatES, and Forchheim) that combine enough features to perform a viable comparison of LD-based methods for camera model identification. In addition, the different classifications (basic, triple, open-set) pose a disparity problem preventing comparisons. We therefore decided to focus only on the basic camera model identification. We also use transfer learning (specifically fine-tuning) to perform our comparative study across databases.