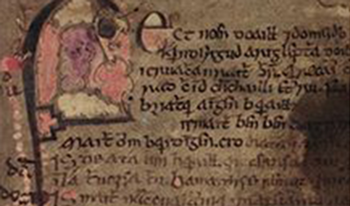

The HYPERDOC database is a publicly available hyperspectral imaging resource for the analysis of historical documents and mock-ups of inks and pigments. It consists of 1681 hyperspectral datacubes, containing millions of reflectance spectra, covering the VNIR (400–1000 nm) and SWIR (900–1700 nm) spectral ranges, including different ink recipes and documents from the 15th to 20th centuries, preserved in two archives in Granada, Spain. We will present the data acquisition process and structure of the database, followed by a live demonstration of its functionality, guiding participants through its use. Additionally, three applications of the database will be summarized, including document binarization, ink classification using machine learning techniques, and ink aging analysis. The HYPERDOC database facilitates the integration of advanced imaging techniques into document analysis and preservation, contributing to the non-invasive study of historical materials.

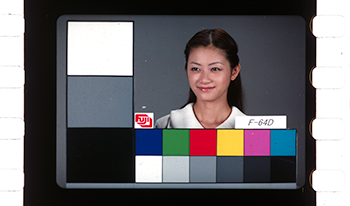

The digitisation of analogue film is critical for cultural heritage preservation, as film deteriorates over time due to environmental factors and analogue projectors are becoming obsolete. Conventional RGB scanning methods fail to fully capture the spectral complexity of film, making multispectral imaging (MSI) a feasible alternative. However, MSI faces challenges due to the limited availability of narrow-band LEDs in certain spectral regions and inherent variability in LED emissions. Aiming to minimise colour reproduction errors in film scanning, this study investigates the optimisation of LED spectral band selection and the impact of LED spectra variability. Informed by the optimised bands, multiple market-based LED sets were further evaluated using MSI capture simulations, with the 7-band and 8-band setups achieving good colour accuracy and showing rather low sensitivity to LED spectral variability. A physical multispectral capture of a film photograph demonstrated a good agreement between the capture simulation and the real results.

Hyperspectral imaging has been widely and consistently applied in the field of Cultural Heritage for material identification. In the specific context of historical document analysis, it is frequently supported and complemented by additional analytical techniques. In this study, we propose a straightforward method for material identification that combines adaptive direct identification—using a reference library of visible and near-infrared spectral reflectance data for pigments—with a KNN classifier applied to an extended spectral range for inks and supports. The method has demonstrated a high degree of accuracy, successfully identifying materials present in both actual historical documents and mock-ups created following medieval techniques. Its performance is illustrated through three spectral image fragments extracted from the HYPERDOC project database.

Hyperspectral imaging (HSI) has been widely used in the conservation studies of various cultural heritage (CH) objects, e.g., paintings, murals, and handwritten historical manuscripts. In this work, HSI is used to study painted historical maps, i.e., five maps of the Scandinavia region from the Ortelius collection preserved at the National Library of Norway in Oslo. Given knowledge of their colour application and usage, HSI-based pigment identification is performed, assuming several spectral mixing theories, i.e., pure pigments, subtractive, and additive mixing models. The obtained results are discussed, showing both the pure pigment and subtractive mixing model to be suitable for pigment identification in the case of watercolour applied on paper substrate.

While spectral imaging has now been being utilized in cultural heritage for more than 20 years, there is still a lack of uptake by heritage practitioners. While some point to cost as an issue, it appears the real concern is that of communicating effectively with interested users – conservators, curators, scholars, heritage professionals. Many people are not aware of the range of types of information and data that can be captured and made available from collections, and the potential ease of interacting with the datasets. Since spectral imaging is essentially the next element of digitization and making heritage available in the digital realm, it seems necessary for more effort to be placed on shared knowledge of the spectral capture and processing methodology, so this becomes more accessible as a tool. Setting up a new spectral imaging system, communicating and creating networks for engagement, and addressing opportunities and challenges will be discussed.

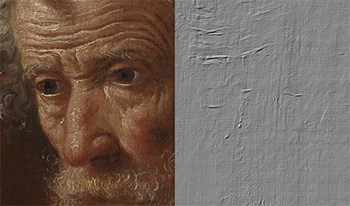

As 3D Imaging for cultural heritage continues to evolve, it’s important to step back and assess the objective as well as the subjective attributes of image quality. The delivery and interchange of 3D content today is reminiscent of the early days of the analog to digital photography transition, when practitioners struggled to maintain quality for online and print representations. Traditional 2D photographic documentation techniques have matured thanks to decades of collective photographic knowledge and the development of international standards that support global archiving and interchange. Because of this maturation, still photography techniques and existing standards play a key role in shaping 3D standards for delivery, archiving and interchange. This paper outlines specific techniques to leverage ISO-19264-1 objective image quality analysis for 3D color rendition validation, and methods to translate important aesthetic photographic camera and lighting techniques from physical studio sets to rendered 3D scenes. Creating high-fidelity still reference photography of collection objects as a benchmark to assess 3D image quality for renders and online representations has and will continue to help bridge the current gaps between 2D and 3D imaging practice. The accessible techniques outlined in this paper have vastly improved the rendition of online 3D objects and will be presented in a companion workshop.

Funded by the Helen Hamlyn Trust, ARCHiOx – Analysis and Recording of Cultural Heritage in Oxford, is a collaborative project which has united Oxford University’s Bodleian Libraries and the Factum Foundation. The Selene Photometric Stereo Scanner was conceived and developed by the latter and has, for the last three years, been piloted at the Bodleian Library. This technology has been used to reveal near-invisible text and artwork from originals from across Oxford University’s collections. Renders created with the Selene PSS, have revealed what is difficult or impossible to record through conventional photography, and have allowed for the creation of physical facsimiles. This paper serves to demonstrate how Selene recordings have assisted in the research of cultural heritage originals and natural history specimens.

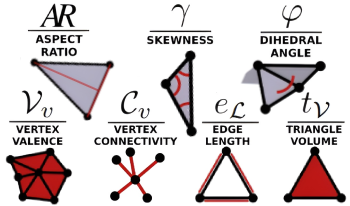

Mass 3D digitization of heritage objects is today heavily encouraged by various institutions. This is in an effort to measure and document objects for the future, use them for visualization and dissemination, and open up for the analytic tools that are available for 3D meshes. However, the structure required of a mesh depends heavily on the application, and the data might vary significantly based on the digitizing institution, object characteristics, and acquisition workflow. In this work, we sample 3D data stored in several major open-access databases for 3D heritage data and analyze the content. We take a close look at sampled mesh structures by computing various graph metrics, check some integrity measures, and evaluate their possible future use. Finally, we provide an overview of the use cases and interoperability of the meshes depending on results from the mesh structure analysis.

Photogrammetry has greatly improved the recording, preservation, and accessibility of cultural heritage in archaeology and scientific research. The increased use of 3D modeling in heritage projects brings about significant challenges, especially in terms of data management. In this context, the challenges involve ensuring that digital models are reliable, traceable, and usable. Often, these concerns are disregarded until they impede access or reuse, affecting the long-term preservation and accessibility of cultural heritage data.